Object and fractal-based multi-ocular three-dimensional video compression encoding and decoding method

A stereoscopic video, compression coding technology, applied in stereoscopic systems, digital video signal modification, television, etc., can solve the problems of slow coding speed, large amount of calculation, difficult to meet the requirements of compression time and image quality

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

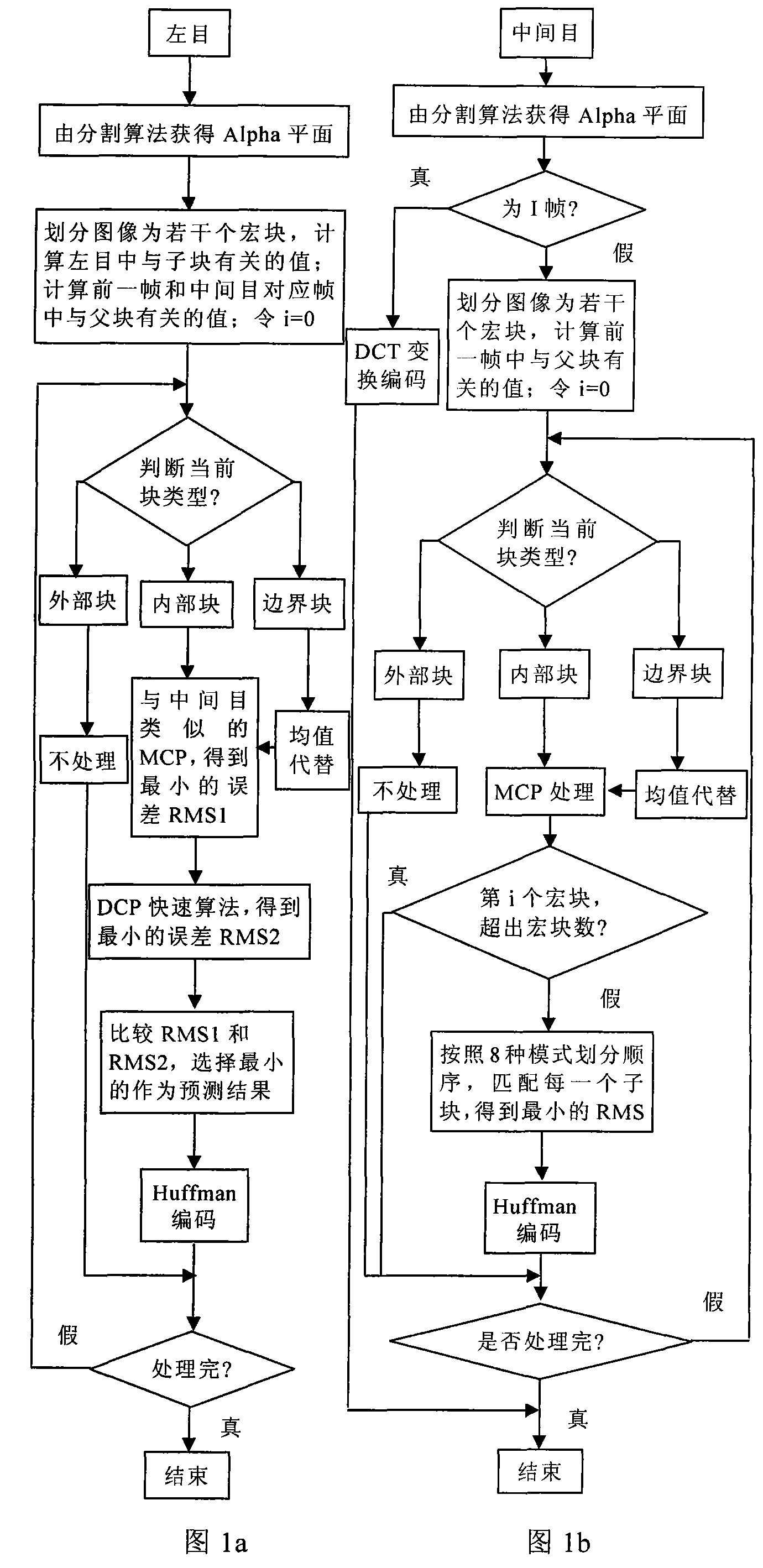

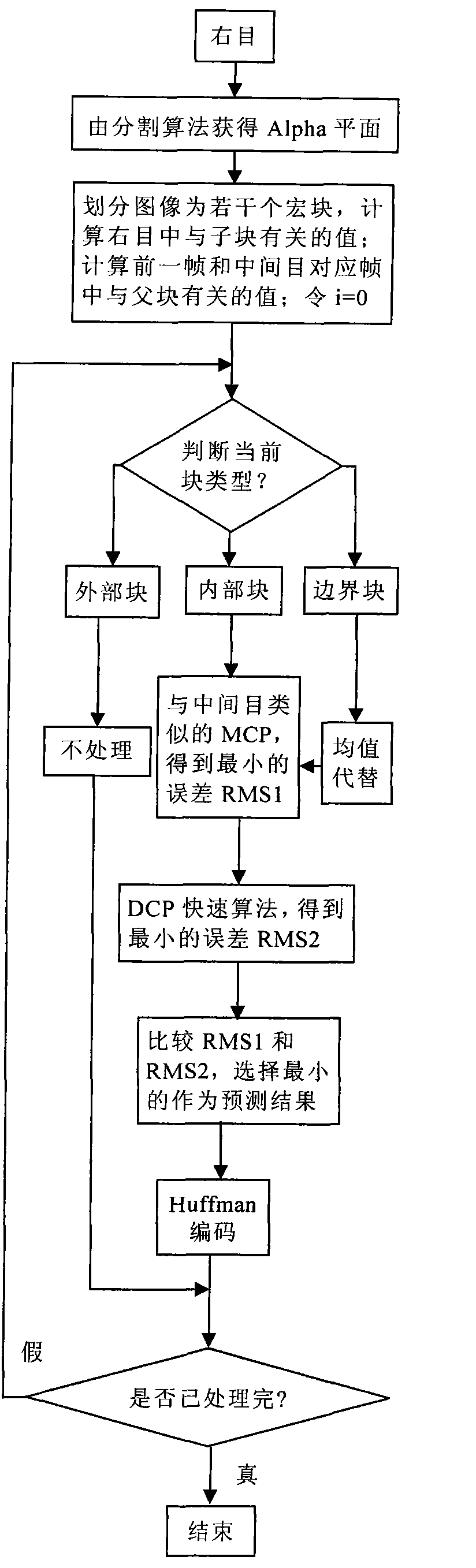

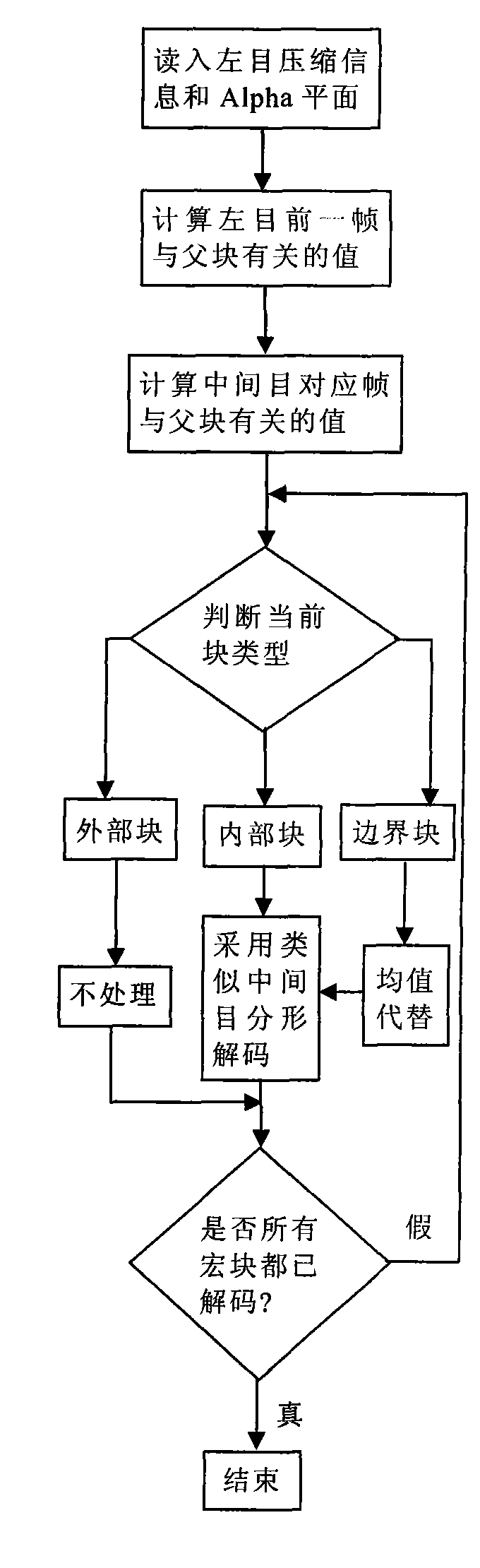

[0075] The method of the present invention will be further described in detail below in conjunction with the accompanying drawings. Only the brightness component Y is taken as an example, and the compression steps of the color difference components U and V are the same as the brightness components.

[0076] The invention proposes a method for compressing and decompressing multi-objective stereoscopic video based on objects and fractals. In multi-eye stereo video coding, the middle object is selected as a reference object, compressed using the MCP principle, and other objects are compressed based on the principle of DCP+MCP. Taking the trinocular video as an example, the intermesh video is used as a reference object, and a separate motion compensation prediction method (MCP) is used for encoding. First, the video segmentation method is used to obtain the video object segmentation plane, that is, the Alpha plane, and the block DCT transform coding is used for the initial frame. ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com