Self-adaption pre-reading method base on file system buffer

A file system and pre-reading technology, applied in memory systems, special data processing applications, memory address/allocation/relocation, etc., to achieve the effect of less historical information content, reduced reading, and simple calculation logic

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] The implementation of the present invention will be described in detail below in conjunction with the accompanying drawings and preferred embodiments.

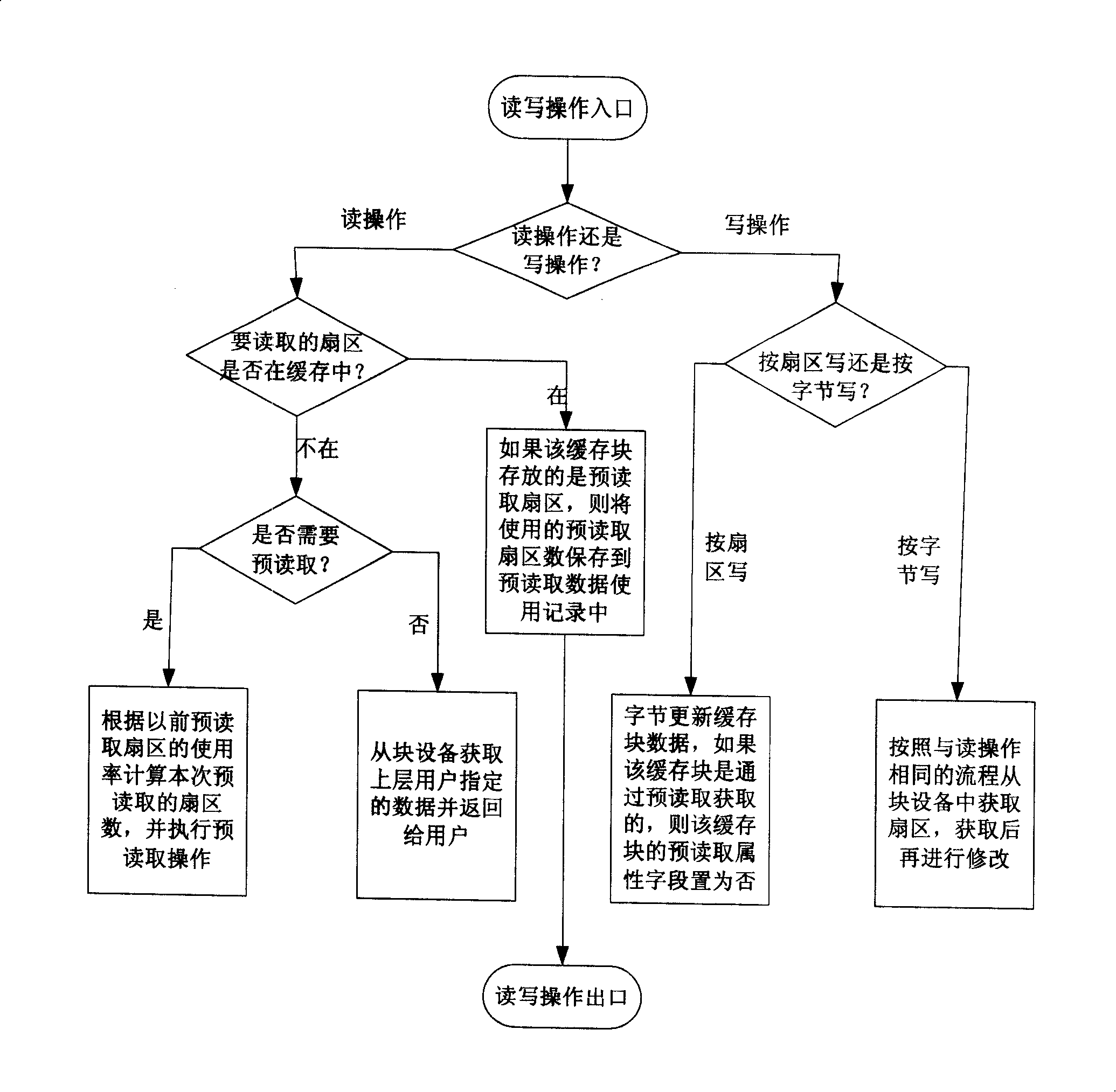

[0026] The method of the present invention can adaptively adjust the pre-read data size based on the file system cache, and it includes the following basic processing steps:

[0027] Create a pre-read sector usage record;

[0028] Each time a pre-read operation is performed, record the number of pre-read sectors in the pre-read data usage record;

[0029] When using pre-read sectors, record the number of pre-read sectors used in the pre-read data usage record;

[0030] Calculate the usage rate of the previous pre-read sector according to the number of pre-read sectors and the usage of the pre-read sector, and determine the pre-read sector in the current pre-read operation according to the usage rate number.

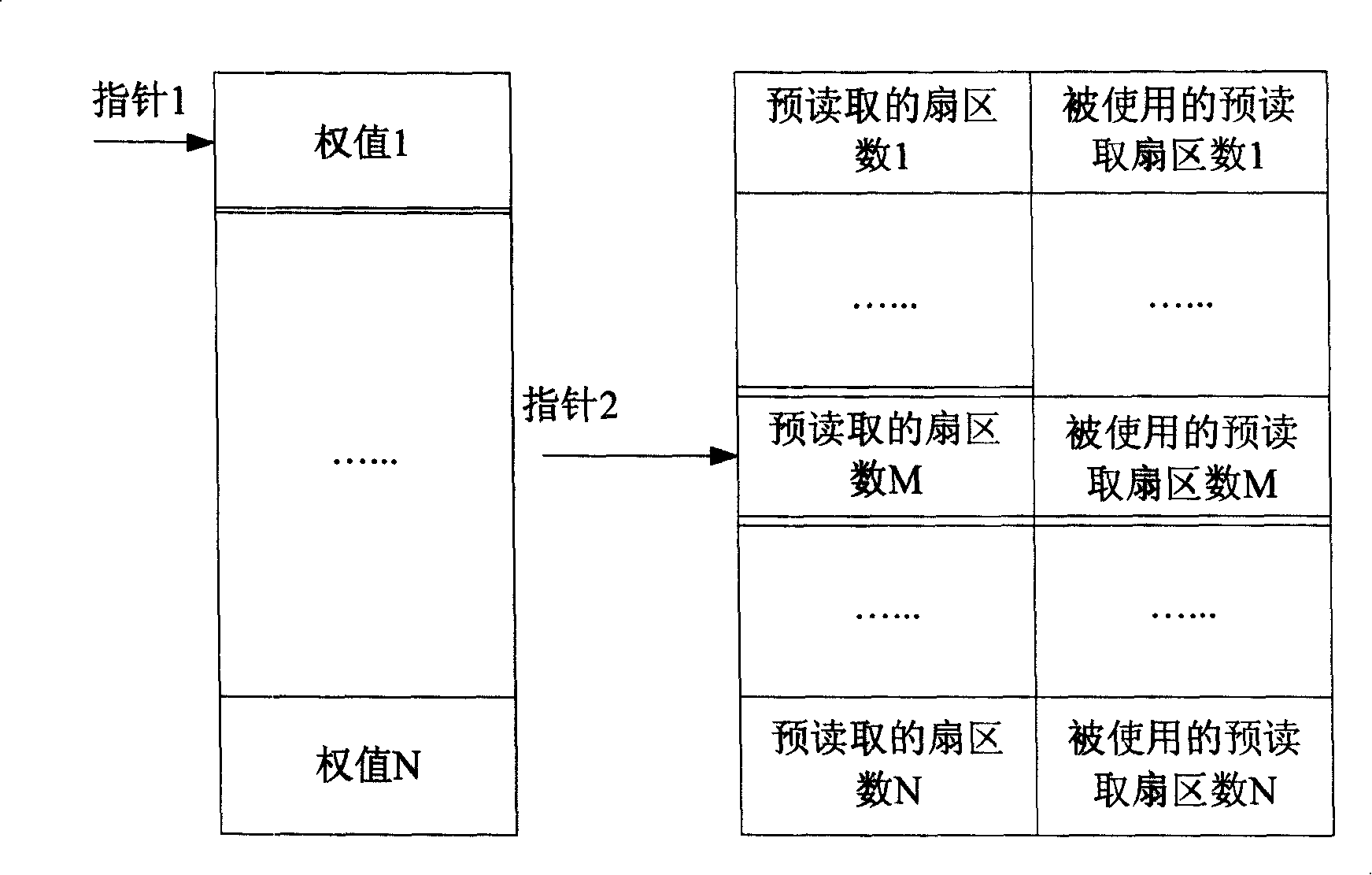

[0031] The data structure of the pre-read sector use record of the present invention at least includes two fi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com