Computing architecture with peripherals

a computing architecture and peripheral technology, applied in the direction of digital storage, memory adressing/allocation/relocation, instruments, etc., can solve the problems of severe real-time problems and unwanted timing interferen

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

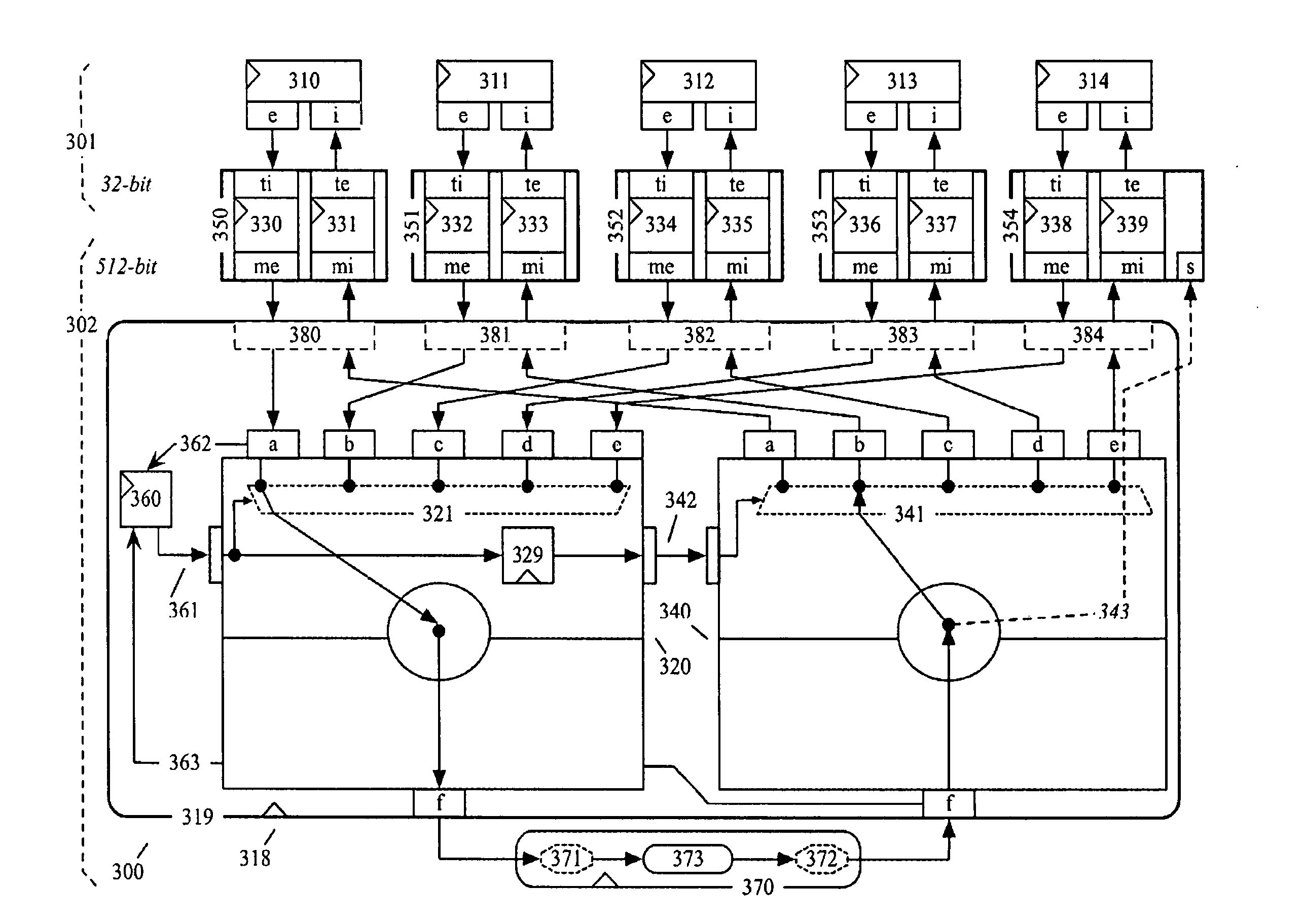

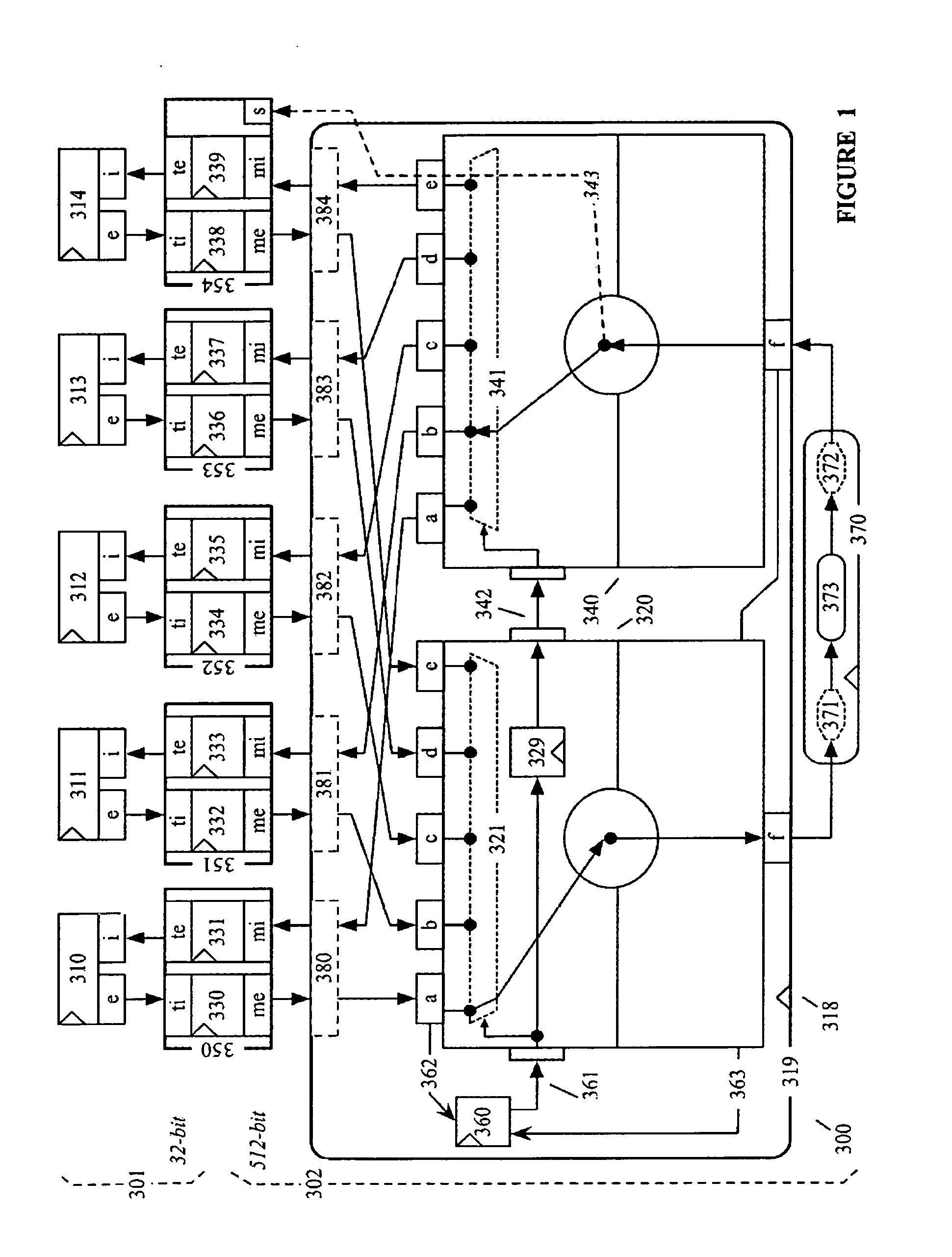

[0150]FIG. 1 is a block schematic diagram illustrating portions of a shared memory computing architecture (300) for preferred embodiments of the present invention. Shared memory computing architecture (300) comprises 5 unidirectional interconnect bridges (350, 351, 352, 353, 354). Each unidirectional interconnect bridge (350, 351, 352, 353, 354) comprises:[0151]an interconnect target port ({350.ti, 350.te}, {351.6, 351.te}, {352.ti, 352.tc}, {350.ti, 353.te}, {354.ti, 354.te}) comprising:[0152]to an ingress port (350.ti, 351.ti, 352.ti, 353.ti, 354.ti); and[0153]an egress port (350.tc, 351.te, 352.tc, 353.tc, 354.te);[0154]an interconnect master port ({350.mi, 350.me}, {351.mi, 351.me}, {352.mi, 352.me}, {353.mi, 353.me}, {354.mi, 354.me}) comprising:[0155]an ingress port (350.mi, 351.mi, 352.mi, 353.mi, 354.mi); and[0156]an egress port (350.me, 351.me, 352.me, 353.me, 354.me);[0157]a memory transfer request module (330, 332, 334, 336, 338) comprising:[0158]an ingress port (350.ti, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com