Virtual Fixtures for Improved Performance in Human/Autonomous Manipulation Tasks

a technology of virtual fixtures and tasks, applied in the field of virtual fixtures, can solve the problems of inability of the hip to penetrate the virtual environment objects, the penetration rate is high, and the direct method suffers, so as to save equipment, save time, and save tim

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

example implementation

[0139

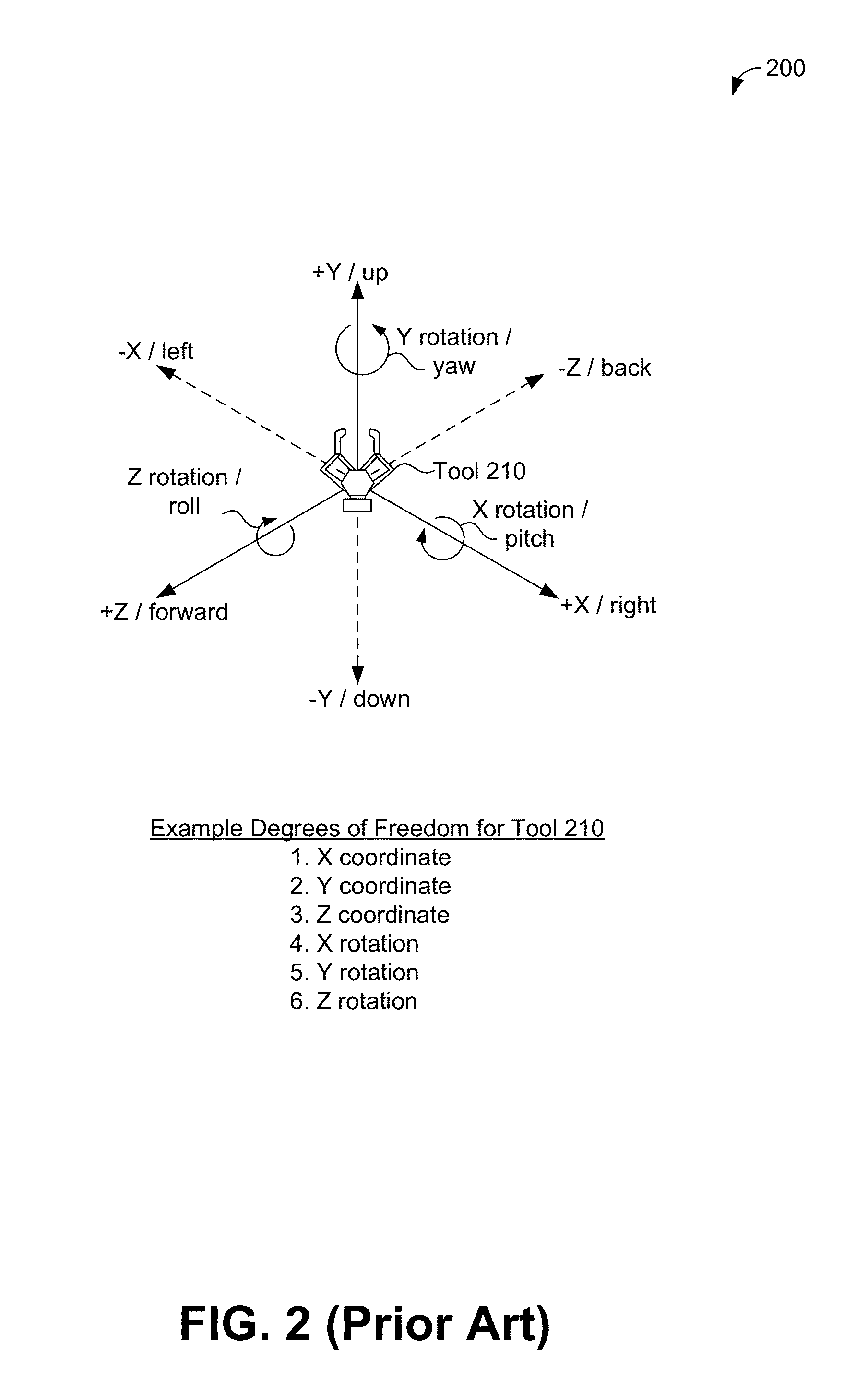

[0140]As an example, the herein-described 6-DOF haptic rendering algorithm was implemented on a desktop computer (AMD Phantom II λ6 with a Radeon HD 6990 GPU) running Ubuntu 11.10. The force was calculated asynchronously at 1000 Hz in a separate thread. During typical interaction, the collision detection algorithm ran at 15 kHz. Point images were filtered and normal vectors were calculated for every point using the GPU.

[0141]Realtime processing was achieved using a neighborhood of 9×9 points for filtering as well as normal vector calculation. The position of the haptic rendering device was both controlled automatically (for purposes of producing accurate results) as well as with a Phantom Omni haptic rendering device. Using the latter, only translational forces could be perceived by the user since the Phantom Omni only provides 3 DOFs of sensation.

[0142]To evaluate the presented haptic rendering method in a noise free environment, a virtual box (with 5 sides but no top) was con...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com