Locality with parallel hierarchical copying garbage collection

a garbage collection and hierarchical technology, applied in the field of automatic memory management, can solve the problems of wasting a lot of time on computer programs' and achieve the effect of reducing caches and tlb misses

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

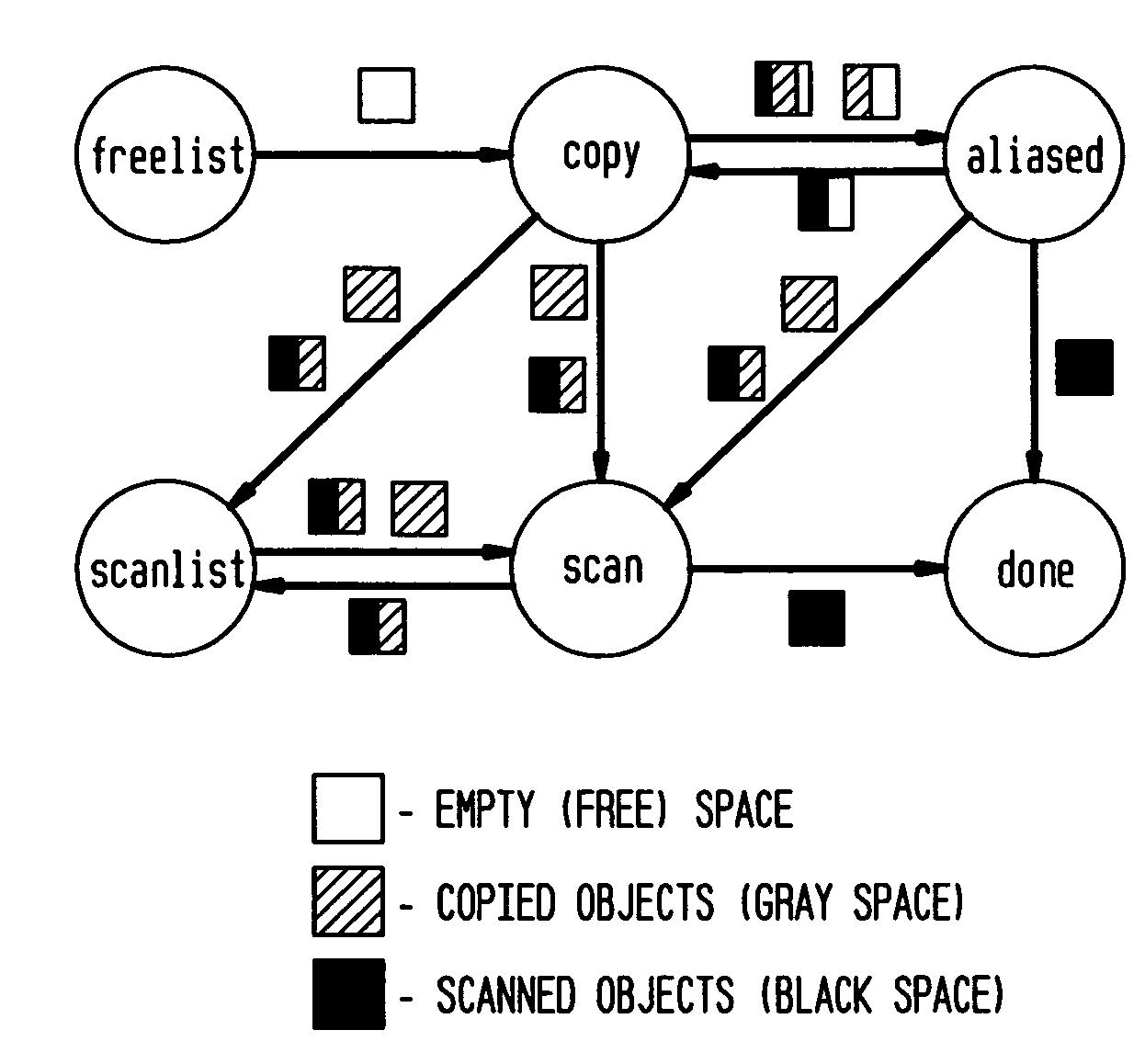

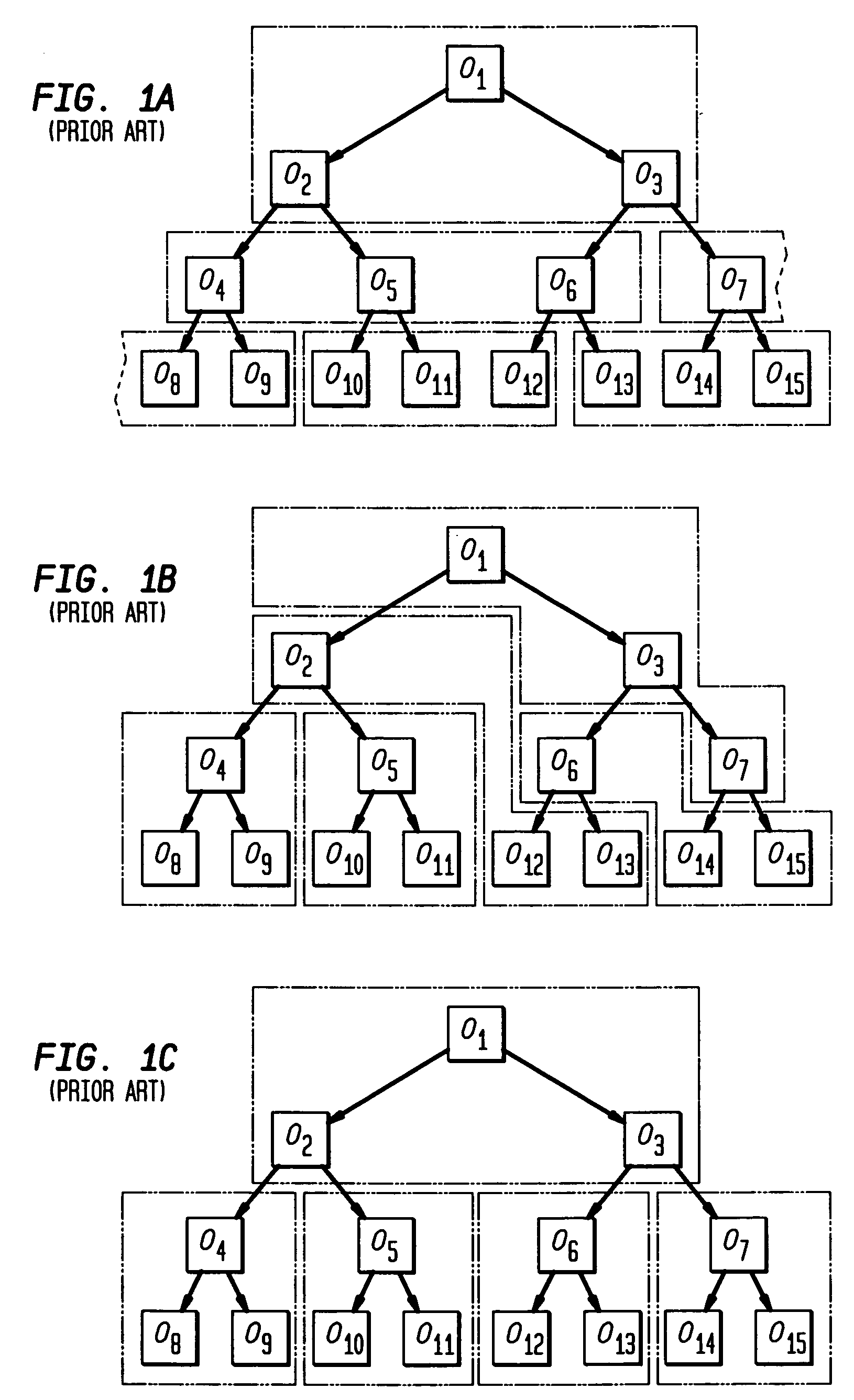

[0025]In accordance with the present invention, a garbage collection algorithm is provided that achieves hierarchical copy order with parallel garbage collection threads. FIGS. 2 and 3 illustrate, as an example, one suitable computer system in which the present invention may be used. This computer system 100, according to the present example, includes a controller / processor 102, which processes instructions, performs calculations, and manages the flow of information through the computer system 100. Additionally, the controller / processor 102 is communicatively coupled with program memory 104. Included within program memory 104 are a garbage collector 106, operating system platform 110, Java Programming Language 112, Java Virtual Machine 114, glue software 116, a memory allocator 202, Java application 204, a compiler 206, and a type profiler 208. It should be noted that while the present invention is demonstrated using the Java Programming Language, it would be obvious to those of ord...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com