Method and device for synchronizing portrait mouth shape and audio, and storage medium

An audio and portrait technology, which is applied in the field of audio and video synthesis, can solve the problems of not being able to take into account the difficulty of making character image effects, and achieve the effect of solving the difficulty of production, reducing the difficulty and cost of realization, and the effect of mouth shape image

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

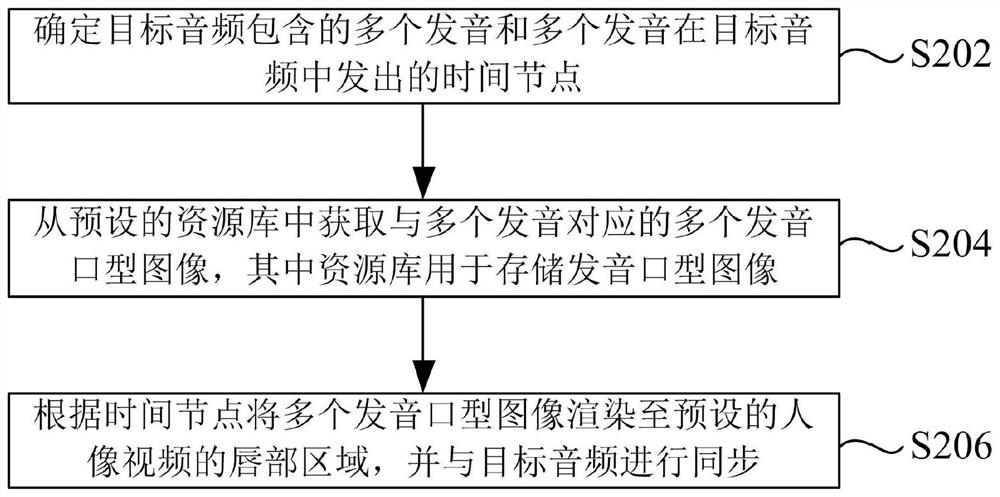

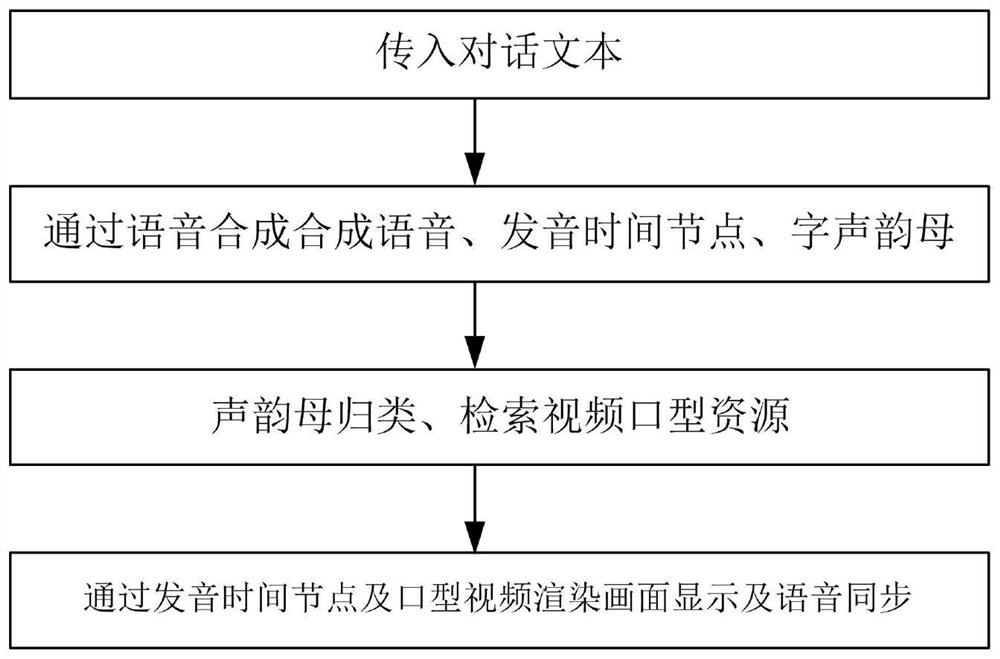

[0021] According to this embodiment, an embodiment of a method for synchronizing a portrait lip and audio is also provided. It should be noted that the steps shown in the flowchart of the accompanying drawings can be executed in a computer system such as a set of computer-executable instructions and, although a logical order is shown in the flowcharts, in some cases the steps shown or described may be performed in an order different from that herein.

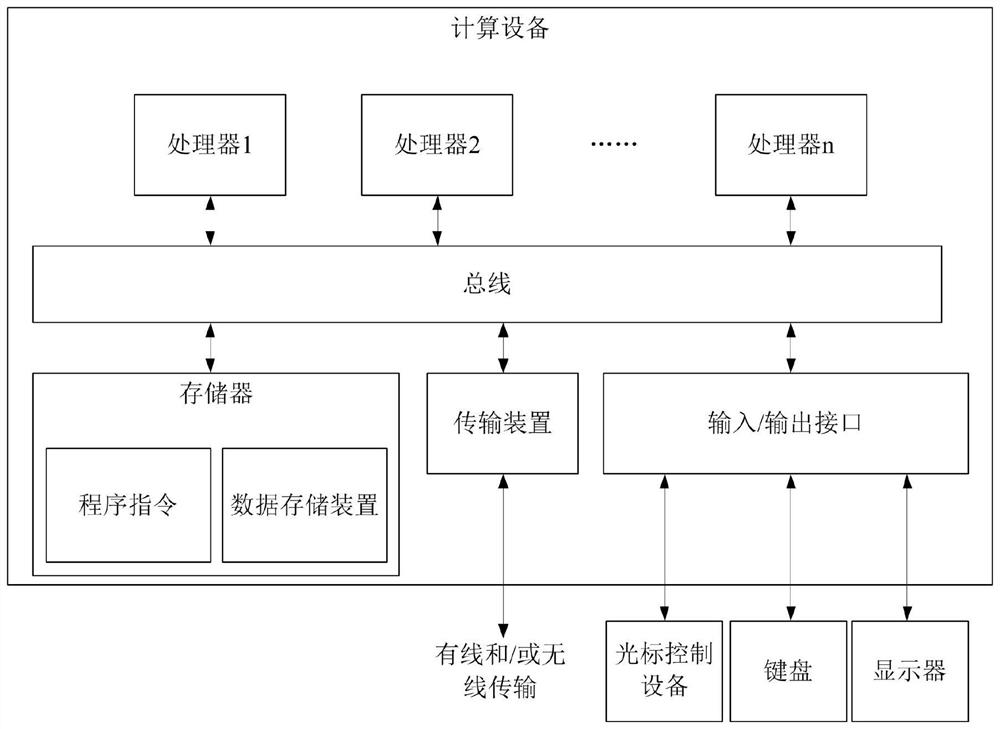

[0022] The method embodiments provided in this embodiment may be executed in a server or a similar computing device. figure 1 A block diagram of a hardware structure of a computing device for implementing a method for synchronizing a portrait mouth and audio is shown. like figure 1 As shown, a computing device may include one or more processors (the processors may include, but are not limited to, processing means such as a microprocessor MCU or a programmable logic device FPGA, etc.), memory for storing data, and memory for com...

Embodiment 2

[0065] Figure 5 An apparatus 500 for synchronizing a portrait lip and audio according to this embodiment is shown, and the apparatus 500 corresponds to the method according to the first aspect of Embodiment 1. refer to Figure 5 As shown, the device 500 includes: a pronunciation determining module 510 for determining multiple pronunciations contained in the target audio and time nodes at which the multiple pronunciations are emitted in the target audio; a mouth image determining module 520 for determining from a preset resource Acquire multiple pronunciation mouth images corresponding to multiple pronunciations in the library, wherein the resource library is used to store the pronunciation mouth images; and the synchronous rendering module 530 is used to render the multiple pronunciation mouth images to the preset according to the time node. The lip area of the portrait video and is synchronized with the target audio.

[0066] Optionally, the mouth shape image determinati...

Embodiment 3

[0073] Image 6 An apparatus 600 for synchronizing mouth shape and audio according to this embodiment is shown, and the apparatus 600 corresponds to the method according to the first aspect of Embodiment 1. refer to Image 6 As shown, the apparatus 600 includes: a processor 610; and a memory 620, connected to the processor 610, for providing the processor 610 with instructions for processing the following processing steps: determining a plurality of utterances included in the target audio and a plurality of utterances in the target audio The time node emitted in the audio; obtain multiple pronunciation lip images corresponding to multiple pronunciations from a preset resource library, wherein the resource library is used to store the pronunciation lip images; Renders to the lip region of a preset portrait video and syncs with the target audio.

[0074] Optionally, obtain multiple pronunciation mouth shape images corresponding to multiple pronunciations from the preset resour...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com