Text abstract generation method based on input sharing

A text and abstract technology, applied in the field of text abstract generation based on input sharing, to achieve the effect of improving training speed, improving model effect, and reducing memory usage

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

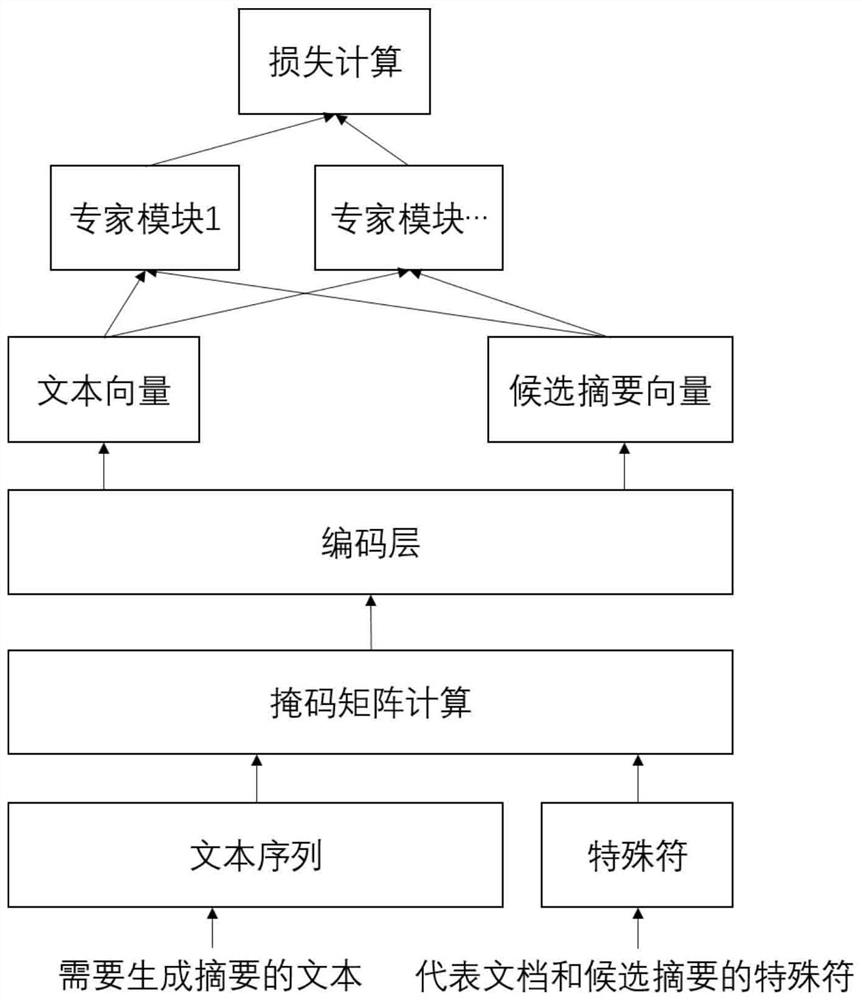

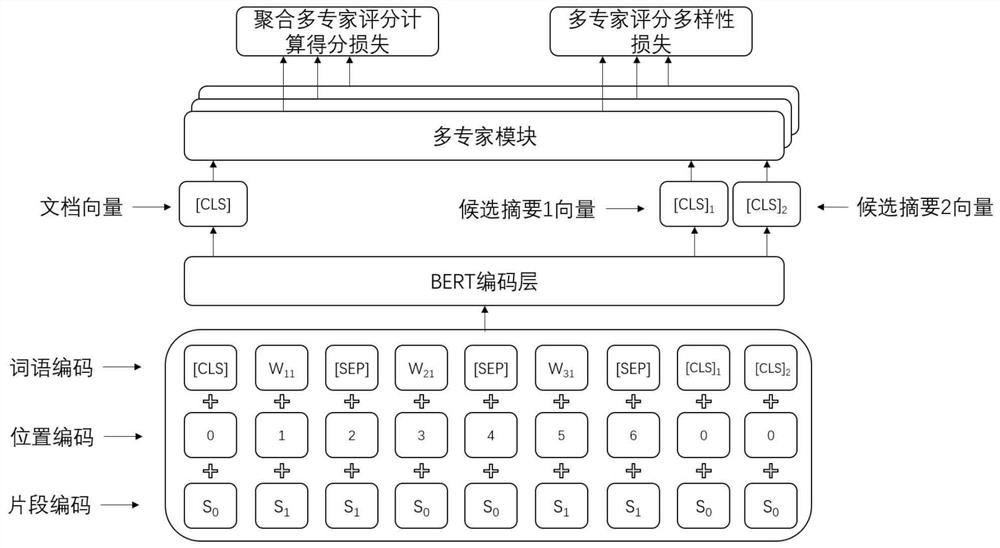

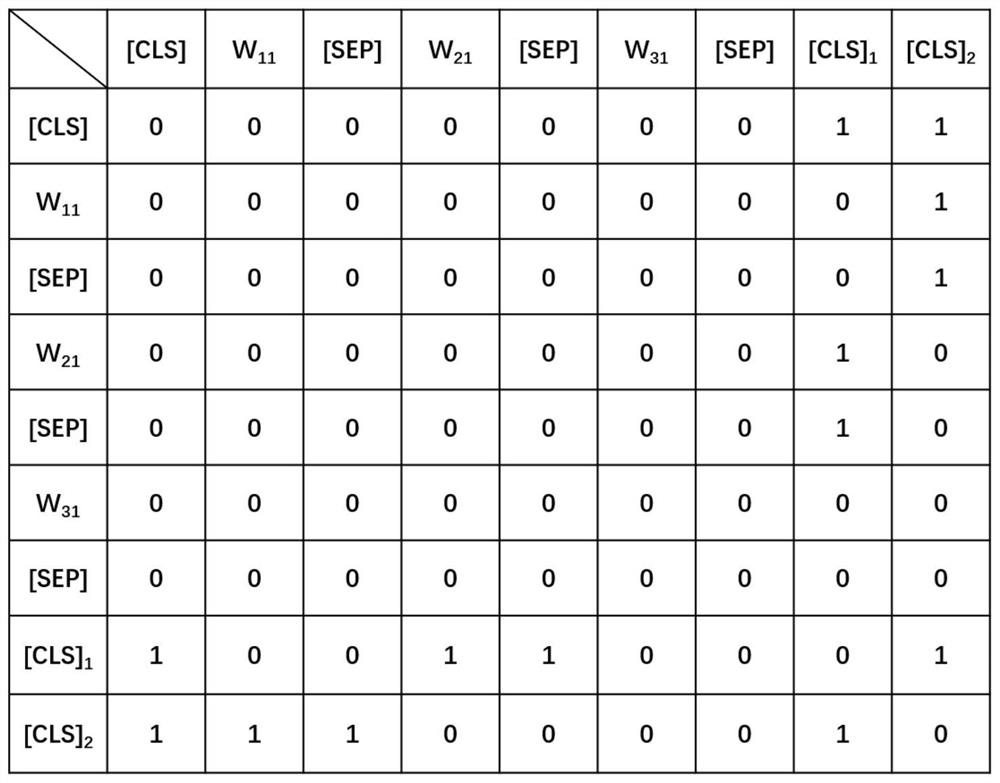

[0063] A text summarization method based on input sharing, such as figure 1 shown, including the following steps:

[0064] S1. An extractive summary generation algorithm based on the sentence level, calculates the text to obtain a sentence, and combines the sentences to obtain a plurality of candidate summary texts, and then obtains a candidate summary data set, as follows:

[0065] Obtain multiple texts and use an open-source sentence-level extractive text summary generation algorithm. In this embodiment, BertSumExt (Text Summarization with Pretrained Encoders) is used to process and calculate each text, and obtain a high score in the text. The maximum number of sentences is 10, and then T candidate summary texts corresponding to the text are obtained for every 2 sentences or every 3 sentences of the obtained sentences;

[0066] Obtain the real scores of the T candidate abstract texts corresponding to each text, and obtain a candidate abstract data set including the original...

Embodiment 2

[0111] In this embodiment, the difference from Embodiment 1 is that in step S1, DiscoBert (Discourse-Aware Neural Extractive Text Summarization) is used to process and calculate each text, and obtain the text with the highest score ranking in the text. 10 sentences, and then combine every 2 sentences or every 3 sentences of the obtained sentences to obtain T candidate abstract texts corresponding to the text;

[0112] Obtain the real scores of the T candidate abstract texts corresponding to each text, and obtain a candidate abstract data set including the original text, the T candidate abstract texts corresponding to the original text, and the real scores of the T candidate abstract texts corresponding to the original text.

[0113] Obtain the reference abstract corresponding to the text, compare the candidate abstract text with the reference abstract, calculate the ROUGE-1 score, ROUGE-2 score and ROUGE-L score respectively, and calculate the average of the three as the real s...

Embodiment 3

[0116] In this embodiment, the difference from Embodiment 1 is that in step S1, Hetformer (Hetformer: Heterogeneous transformer with sparse attention for long-text extractivesummarization) is used to process and calculate each text to obtain the Score up to 10 sentences with high rankings, and then obtain T candidate summary texts corresponding to the text for every 2 sentences or every 3 sentence combinations of the obtained sentences;

[0117]Obtain the real scores of the T candidate abstract texts corresponding to each text, and obtain a candidate abstract data set including the original text, the T candidate abstract texts corresponding to the original text, and the real scores of the T candidate abstract texts corresponding to the original text.

[0118] Obtain the reference abstract corresponding to the text, compare the candidate abstract text with the reference abstract, calculate the ROUGE-1 score, ROUGE-2 score and ROUGE-L score respectively, and calculate the average...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com