Multi-modal video dense event description algorithm of interactive Transform

An event description and multi-modal technology, applied in the field of video algorithms, can solve problems such as hindering the parallelization of training samples, achieve good semantic description effects, good video segmentation, and improve the effect of related evaluation indicators

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0021] In order to make the technical means, creative features, goals and effects achieved by the present invention easy to understand, the present invention will be further described below in conjunction with specific embodiments.

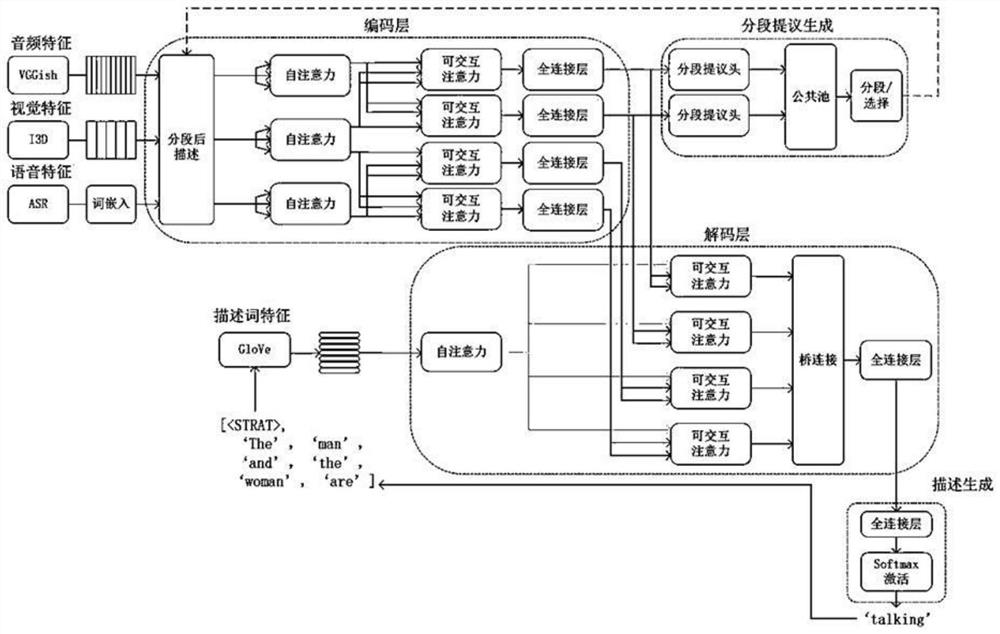

[0022] like figure 1 As shown, in the present invention, the multimodal dense video description algorithm that can interact with Transformer, the main process is as follows:

[0023] 1. Complete the dense video description task based on the ActivityNet Captions dataset. First, the visual features, audio features, and speech features in the video are extracted through the I3D model, VGGish model, and ASR system. By extracting multi-modal features, the information in the video can be better expressed.

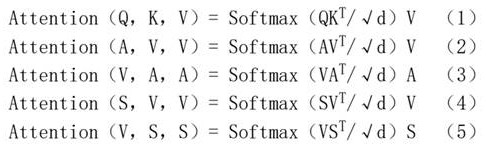

[0024] 2. Use the interactive Transformer to encode-decode the extracted features.

[0025] 3. The model training is completed in two stages; first, the description model is trained based on the real segment proposal, the weight of the trained des...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com