Semantic segmentation network based on optical and PolSAR feature fusion

A technology of semantic segmentation and feature fusion, which is applied in biological neural network models, neural learning methods, character and pattern recognition, etc., can solve the problems that remote sensing images cannot be effectively integrated and applied, so as to improve the effect, capture and restore spatio-temporal information Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0041]Specific embodiments of the present invention will be described below in conjunction with the accompanying drawings, so that those skilled in the art can better understand the present invention. It should be noted that in the following description, when detailed descriptions of known functions and designs may dilute the main content of the present invention, these descriptions will be omitted here.

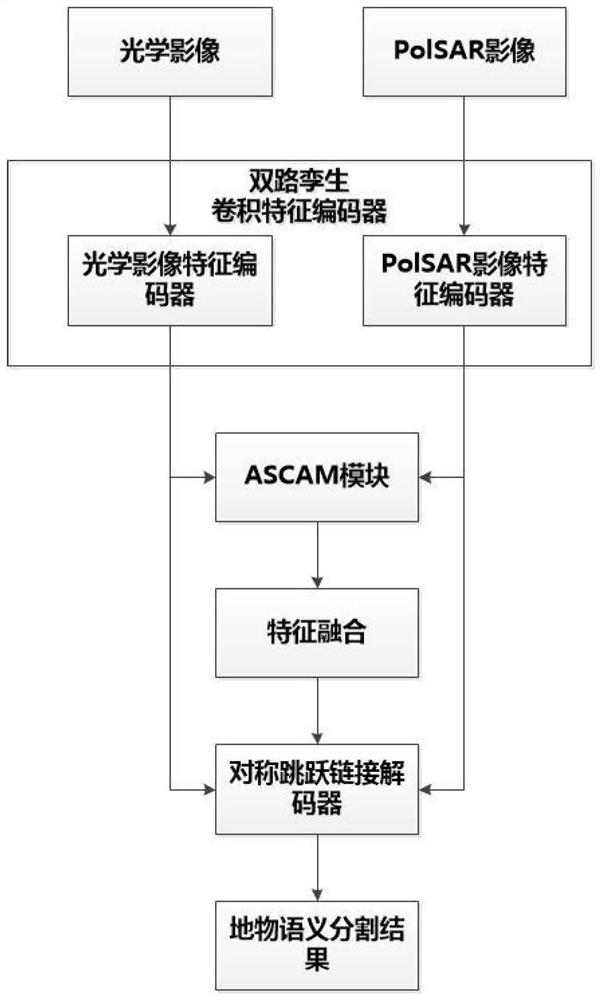

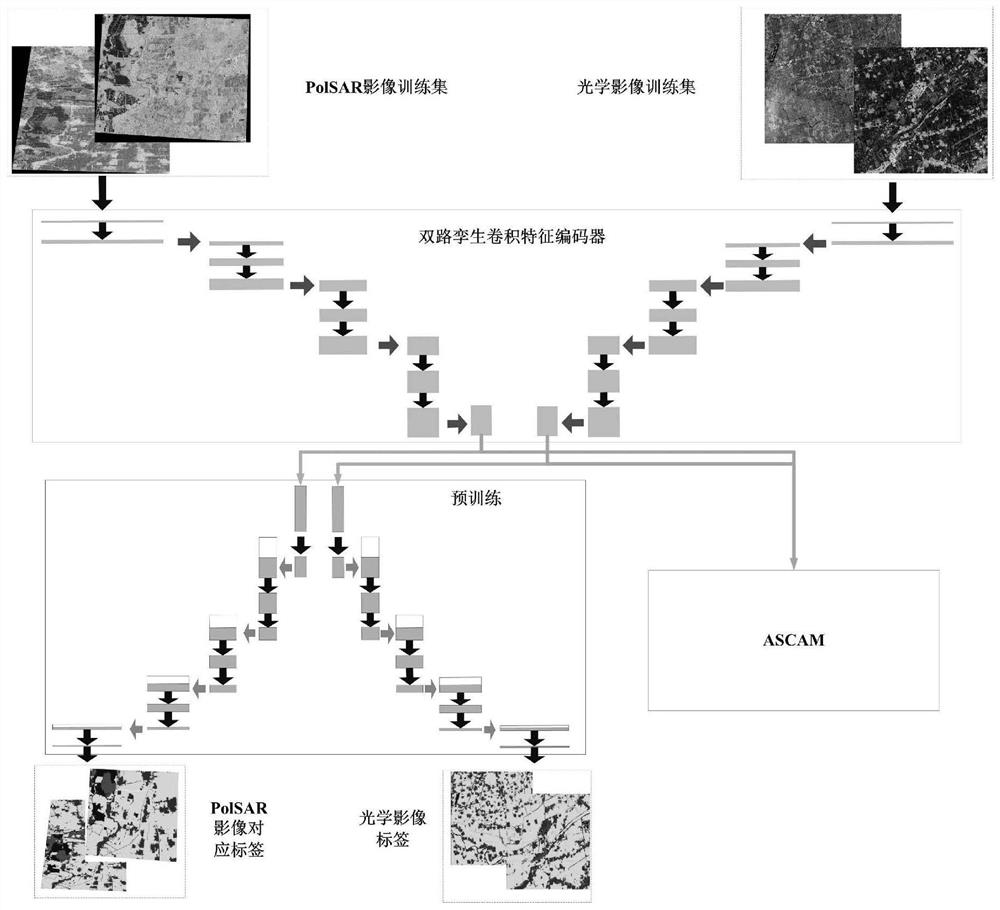

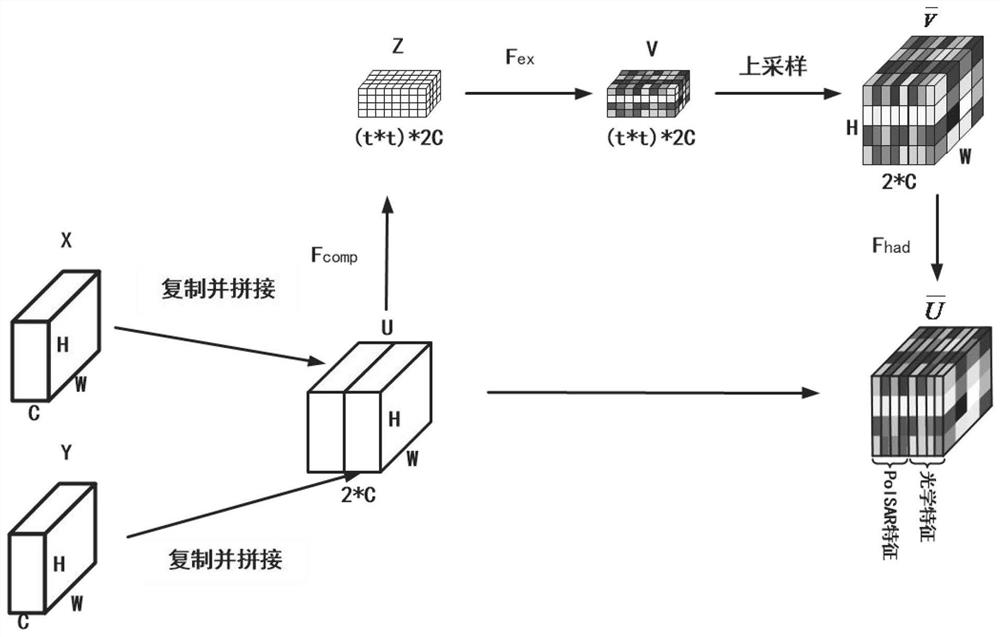

[0042] The present invention designs a new deep learning model ASCAFNet (Atrous Spatial Channel Attention Fusion Networks), which is used to realize end-to-end optical and PolSAR fusion object segmentation tasks. ASCAFNet consists of three parts, two-way twin convolutional feature encoder, attention mechanism module ASCAM (AtrousSpatial Channel Attention module) and symmetric skip connection decoder. We first designed a two-way twin convolutional feature encoder, and used ImageNet and a large number of annotated PolSAR and light images to pre-train each encoder to maximize t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com