Full-automatic high-resolution image matting method

A high-resolution image and fully automatic technology, applied in the field of image processing, can solve problems such as strong dependence on prior knowledge, inability to extract semantic features, insufficient algorithm segmentation accuracy and robustness, etc., to achieve multiple degrees of freedom and reduce calculations Quantity, fine feature effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

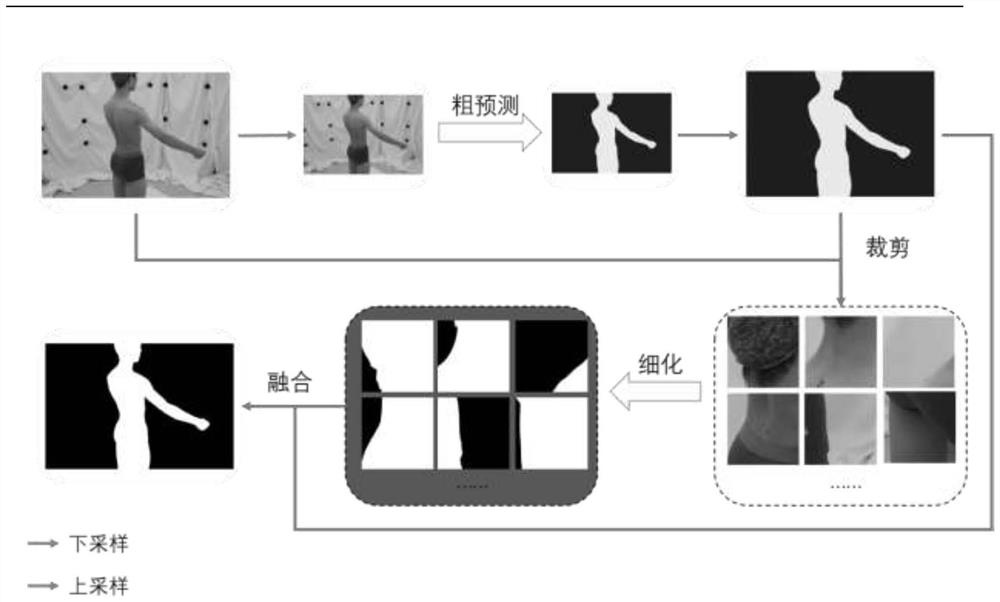

[0034] The invention provides a fully automatic high-resolution image matting method, such as figure 1 As shown, the specific steps are:

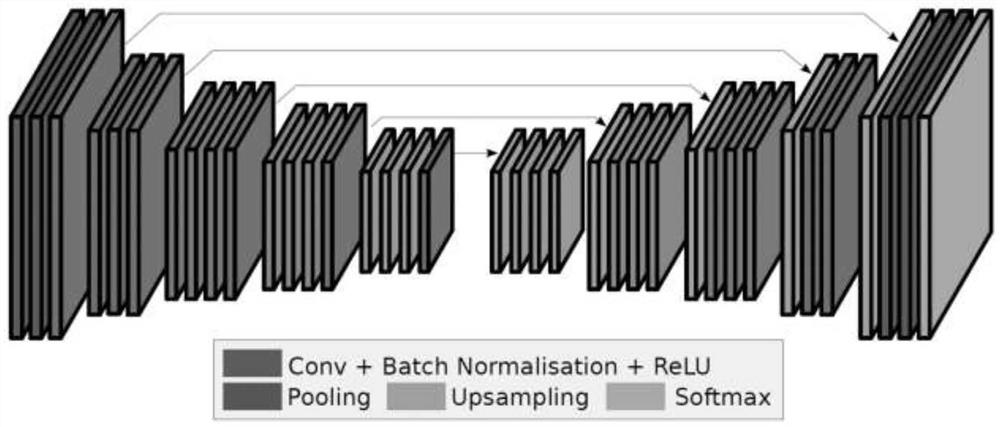

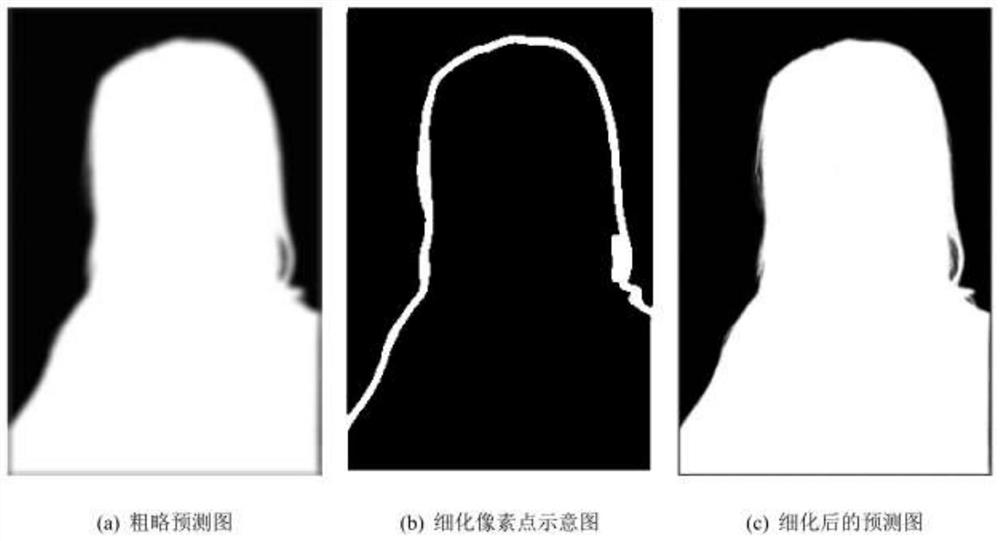

[0035] S1: Obtain the original image, down-sample the original image to obtain a process image, input the process image to the decoder encoder network to obtain a segmented image to complete rough prediction, and perform bilinear interpolation on the segmented image Upsampling results in a coarse-grained segmented image with the same resolution as the original image, such as figure 2 As shown, the decoder-encoder network includes multiple decoders and encoders, which are connected one by one;

[0036] S2: To reduce the amount of calculation, set the pixel transparency interval [α 1 ,α 2 ], refine the pixels in the transparency interval, and make the transparency less than α 1 Pixels with transparency greater than α are treated as background 2 pixels are considered foreground. The larger the coverage of the transparency interval, the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com