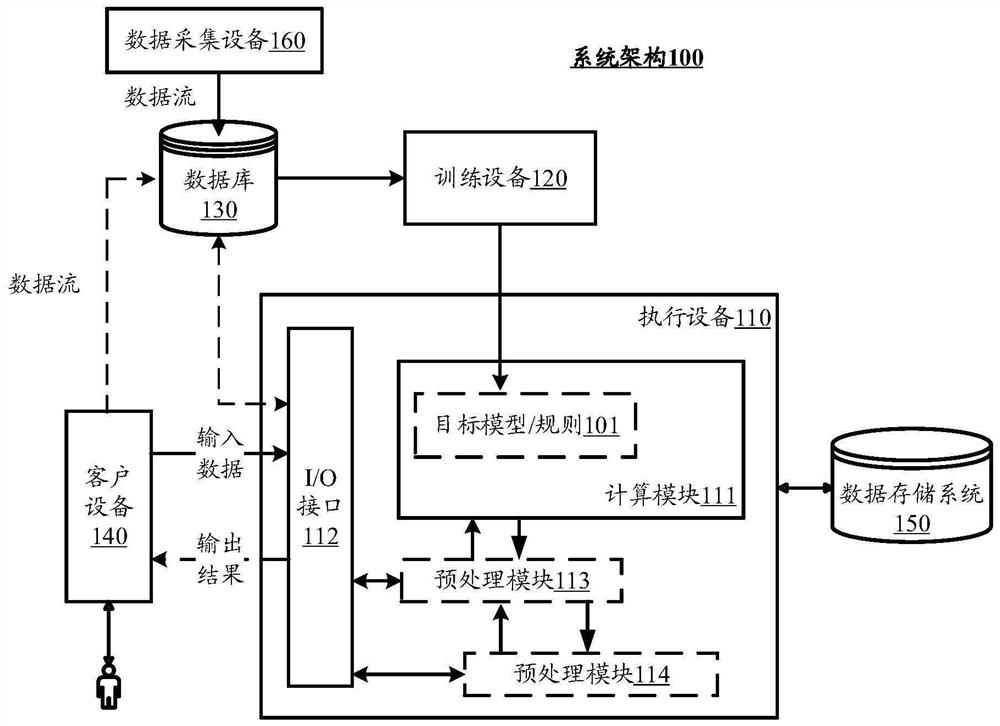

Image fusion method, and training method and device of image fusion model

An image fusion and model technology, applied in the field of computer vision, can solve the problems of inability to guarantee the fusion effect, affecting the quality of the output image, and losing details of the output image.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

example 1

[0190] Example 1: Obtaining a color image and an infrared image based on a dichroic prism.

[0191] Such as Image 6 As shown, the dichroic prism includes a prism 6020 and a filter 6030 . The incident light received by the lens 6010 can be divided into visible light and near-infrared light by using a dichroic prism, and the visible light and near-infrared light are respectively imaged by two sensors, the color sensor 6040 and the near-infrared sensor 6050, and a color image and an infrared image are simultaneously obtained.

example 2

[0192] Example 2: Obtain color images and infrared images based on time-sharing and frame interpolation.

[0193] Such as Figure 7 As shown, the supplementary light control unit 7030 periodically turns on and off the infrared supplementary light unit 7010 to control the type of light transmitted from the lens 7020 to the surface of the sensor 7040, that is, visible light or infrared light, respectively. imaging. It should be understood that Figure 7 The infrared image shown in can also be a composite image of an infrared image and a color image. In the case of low illumination, the amount of information of the color image in the composite image is less, so the composite image can be used as the composite image in the embodiment of the present application. infrared image. Through the frame interpolation algorithm, the color image and the infrared image at the same moment are obtained. Frame interpolation refers to obtaining an image of an intermediate frame through image ...

example 3

[0194] Example 3: Obtain a color image and an infrared image based on an RGB-Near-infrared (NIR) sensor.

[0195] Such as Figure 8 As shown, using the design of RGB-NIR information sensor, in one imaging, color and infrared images are obtained at the same time.

[0196] The training method of the image fusion model and the image fusion method of the embodiment of the present application will be described in detail below with reference to the accompanying drawings.

[0197] Figure 9 is a schematic diagram of the image fusion device 600 of the embodiment of the present application. In order to better understand the method in the embodiment of the present application, the following Figure 9 A brief description of the functions of each module in the

[0198] Apparatus 600 may be a cloud service device or a terminal device, such as a computer, server, or other device with enough computing power to train a time series forecasting model, or a system composed of a cloud service...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com