Deep learning method for lightweight bottleneck attention mechanism

A technology of deep learning and attention, applied in neural learning methods, neural architecture, biological neural network models, etc., can solve problems such as increasing model overhead and reducing attention mechanism

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0031] In this embodiment, real blood cell data sets from various organs are used, and the data sets include three categories: white blood cells, red blood cells, and platelets. The blood cell data set has a total of 5000 images.

[0032] The deep learning method of the lightweight bottleneck attention mechanism includes the following steps:

[0033] T1. Processing the data set: Firstly, data segmentation is performed on the data set, and the data set is divided into a training set of 4000 samples and a test set of 1000 samples.

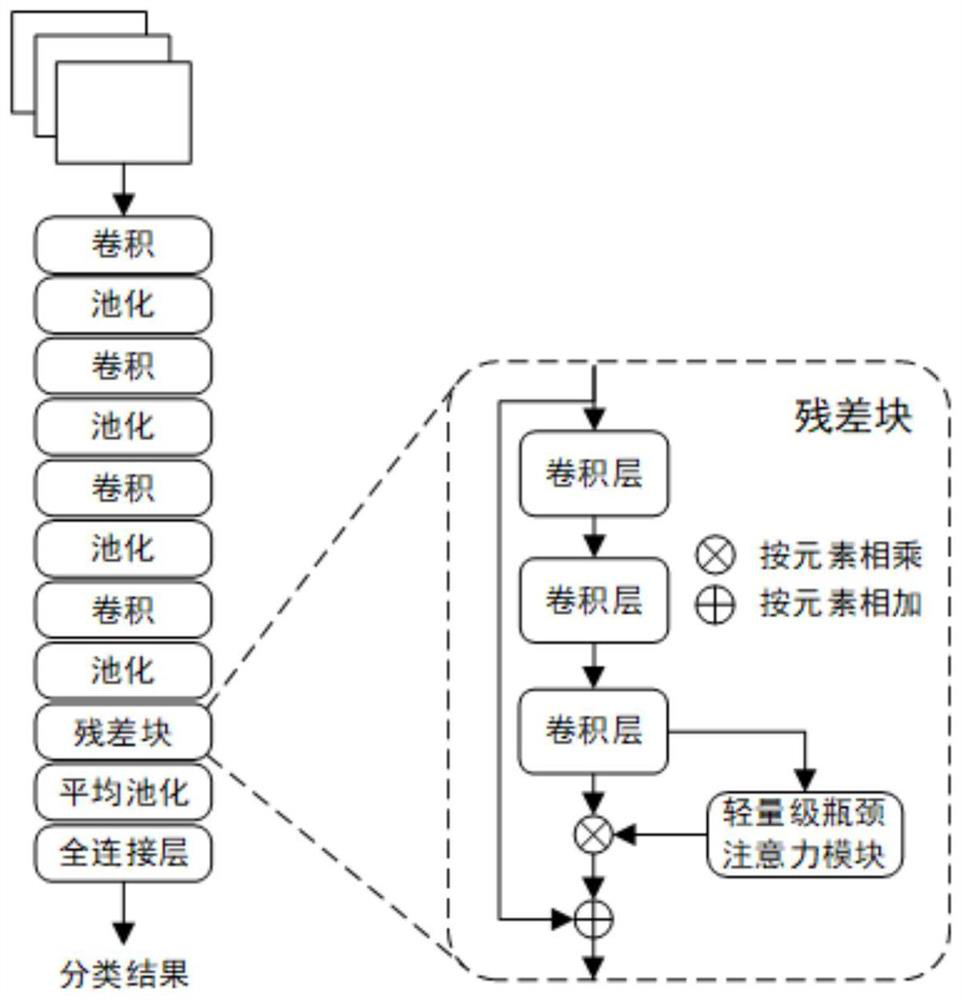

[0034] T2. Construct a convolutional neural network neural network embedded with a lightweight bottleneck attention mechanism. The structure diagram is as follows figure 2 , the specific structure is as follows:

[0035] Enter an image of size 128x128x3 into figure 2 Network, the network structure includes: 4 layers of convolution layer, 4 layers of maximum pooling layer, 1 layer of residual unit layer, 1 layer of average pooling layer and 1 lay...

Embodiment 2

[0047] This embodiment is based on a real cat and dog data set, which contains two categories: cats and dogs. The cat and dog dataset has a total of 3680 images.

[0048] The deep learning method of the lightweight bottleneck attention mechanism includes the following steps:

[0049] T1. Processing the data set: Firstly, data segmentation is performed on the data set, and the data set is divided into a training set of 3000 samples and a test set of 680 samples.

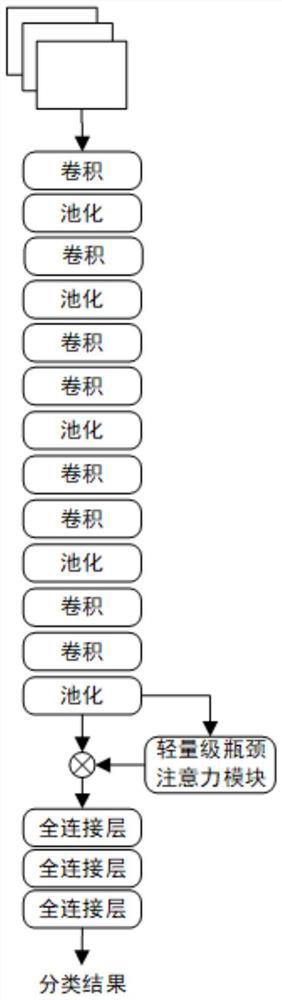

[0050] T2. Construct a VGG network embedded with a lightweight bottleneck attention mechanism. The structure diagram is as follows image 3 , the specific structure is as follows:

[0051] T21, the model input is an image of 224×224×3;

[0052] T22. Enter the first convolution layer, the size of the convolution kernel is 3×3, the step size is 1, and the number is 64, and the output feature map of 224×224×64 is obtained;

[0053] T23. Enter the first pooling layer, the size of the pooling filter is 2×2, and the ste...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com