Video retrieval method based on multi-mode and self-supervised representation learning

A multi-modal, video technology, applied in the field of computer technology and image processing, to achieve high accuracy and recall rate, high information carrying capacity and robustness, and reduce complexity.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] The method of the present invention will be further described below in conjunction with the accompanying drawings.

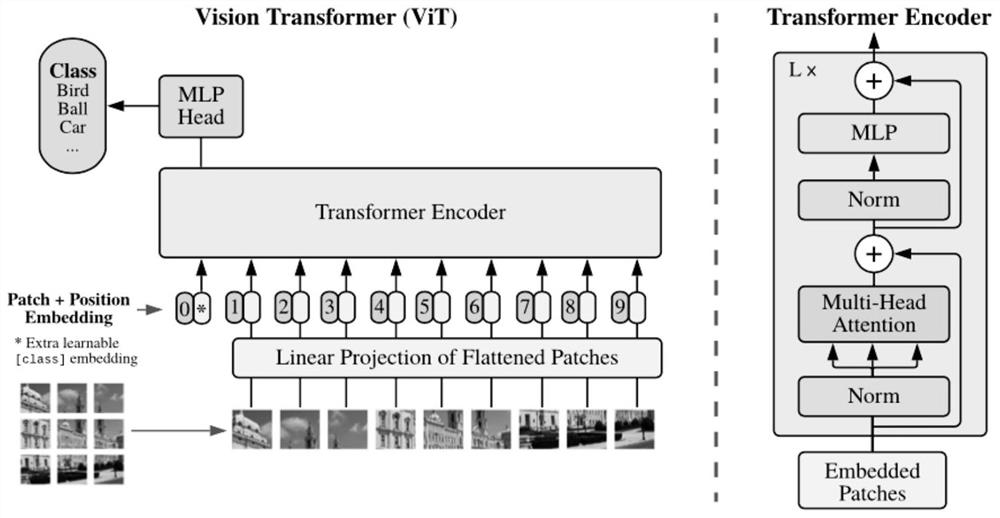

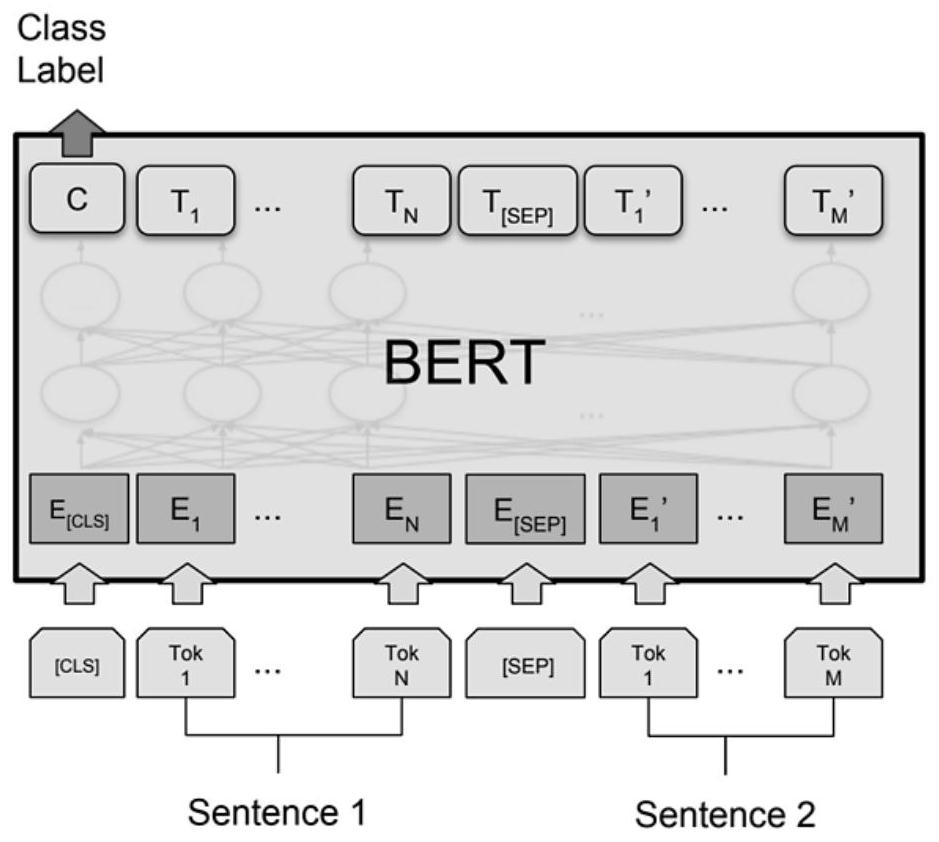

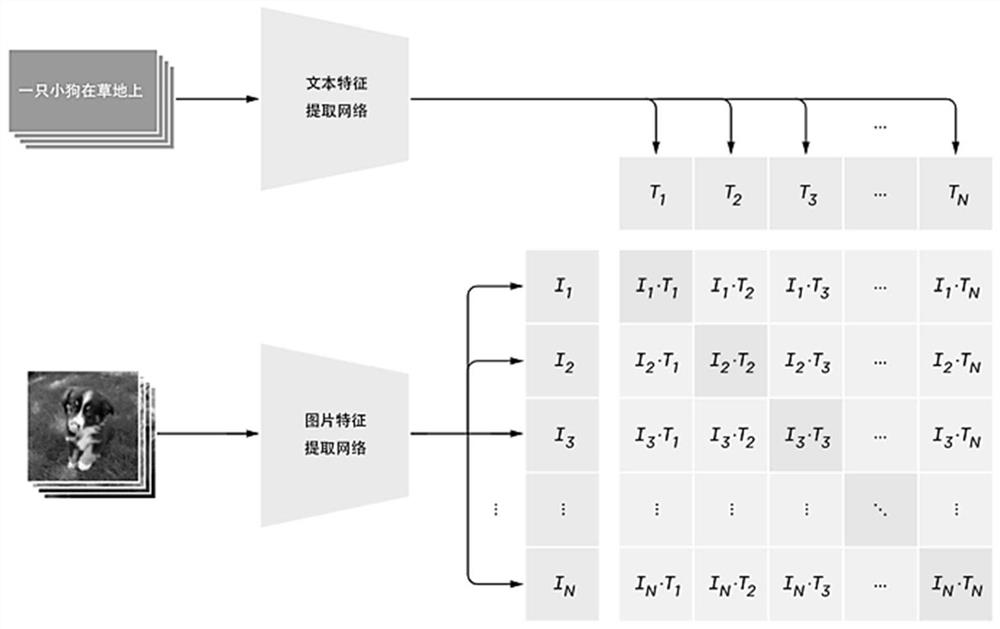

[0023] The present invention proposes a video retrieval method based on multimodal and self-supervised characterization learning, which does not rely on task-oriented labeling data, and only needs to collect image data within the platform or the Internet to train the characterization network. Given a search video, videos with similar images or similar events can be found in the tens of millions of video databases. This technology can be a solution to issues such as news event aggregation, copyright protection infringement retrieval, and multi-modal retrieval on short video platforms.

[0024] A video retrieval method based on multimodal and self-supervised representation learning, the specific implementation steps are as follows:

[0025] Step 1: Collect a sufficient number of images and corresponding text information. The text information includes title...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com