Target attitude estimation method and system fusing planar and three-dimensional information, and medium

A technology of three-dimensional information and target posture, applied in the field of image recognition and target posture recognition in video images, can solve the problems of difficult to deal with scene point cloud data noise, slow processing speed, large preparation workload, etc., to reduce the number of templates, reduce The processing speed is slow and the effect of reducing the burden

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

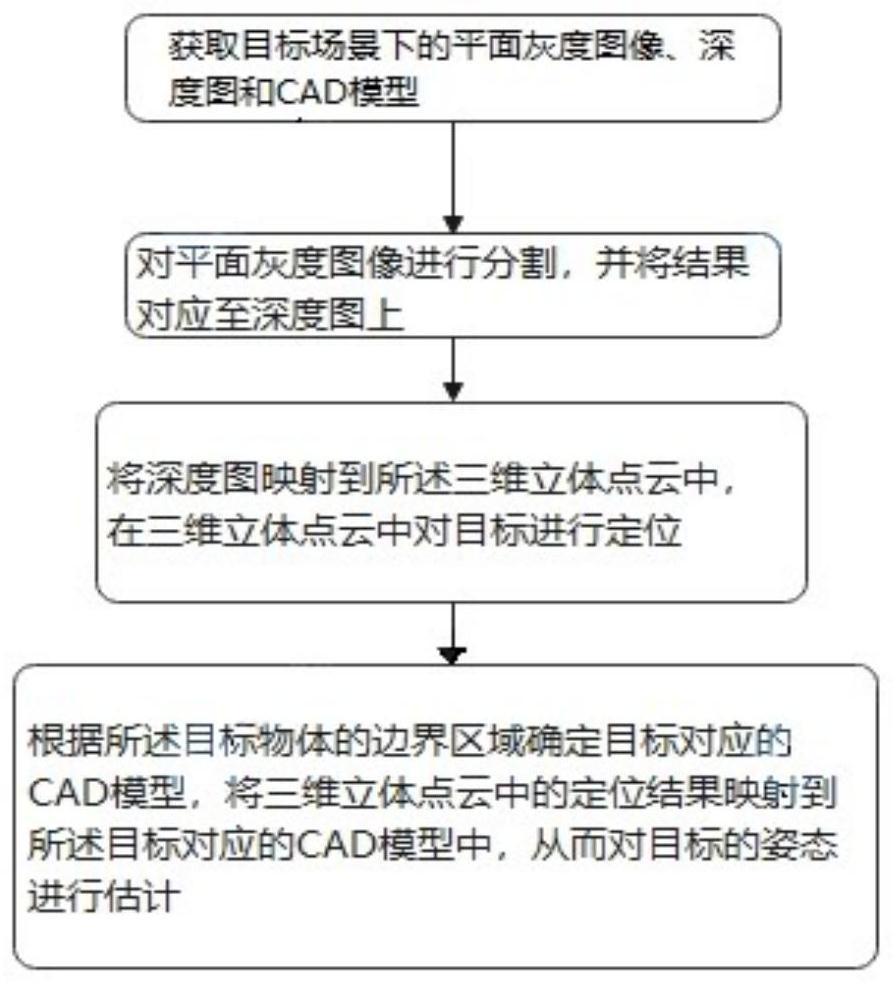

[0024] This embodiment discloses a method for estimating a target pose by fusing plane and three-dimensional information. figure 1 shown, including:

[0025] Obtain the planar grayscale image, depth map and CAD model of the target scene.

[0026] A complete image is composed of three channels: red, green, and blue. The thumbnails of the red, green, and blue channels are displayed in grayscale. Use different gray levels to represent the proportion of different colors in the image. This type of image is usually displayed as a grayscale from the darkest black to the brightest white, and can be converted into a flat grayscale image by means of floating-point arithmetic, integer arithmetic, shift arithmetic, and average arithmetic.

[0027] A depth map can also be called a distance image, which records the distance (depth) from the frame grabber to each point in the scene. It reflects the surface geometry of the scene.

[0028] Segment the target in the planar grayscale image ...

Embodiment 2

[0039] Based on the same inventive concept, this embodiment discloses a target pose estimation system that fuses plane and three-dimensional information, including:

[0040] An image acquisition module, configured to acquire a plane grayscale image, a depth map and a CAD model under the target scene;

[0041] The model training module is used to segment the planar grayscale image and map the result to the depth map;

[0042] The point cloud positioning module is used to map the depth map into the three-dimensional point cloud, and locate the target in the three-dimensional point cloud;

[0043] The attitude estimation module is used to determine the CAD model corresponding to the target according to the boundary area of the target object, and map the positioning results in the three-dimensional point cloud to the CAD model corresponding to the target, thereby estimating the attitude of the target.

Embodiment 3

[0045] Based on the same inventive concept, this embodiment discloses a computer-readable storage medium, on which a computer program is stored, and the computer program is executed by a processor to achieve the goal of fusing plane and three-dimensional information in any of the above items. The steps of the pose estimation method.

[0046] Those skilled in the art should understand that the embodiments of the present application may be provided as methods, systems, or computer program products. Accordingly, the present application may take the form of an entirely hardware embodiment, an entirely software embodiment, or an embodiment combining software and hardware aspects. Furthermore, the present application may take the form of a computer program product embodied on one or more computer-usable storage media (including but not limited to disk storage, CD-ROM, optical storage, etc.) having computer-usable program code embodied therein.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com