Man-machine interaction method and device based on sight tracking and computer equipment

A human-computer interaction and eye-tracking technology, applied in the field of human-computer interaction, can solve problems such as poor robustness and low precision, and achieve the effect of simple equipment, easy integration, and faster speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

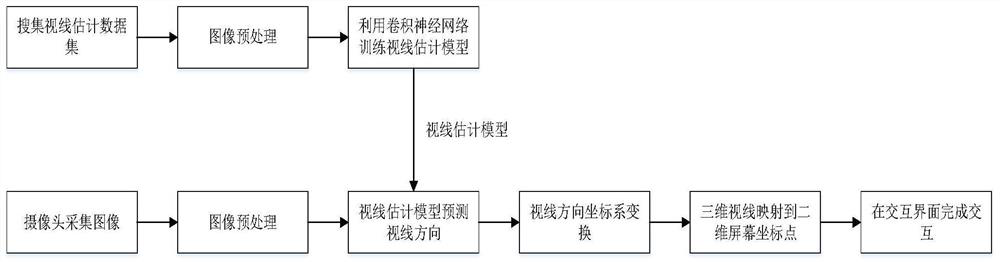

[0035] Such as figure 1 Shown is the overall structure diagram of the human-computer interaction method based on eye-tracking. This embodiment provides a human-computer interaction method based on eye-tracking, including the following steps:

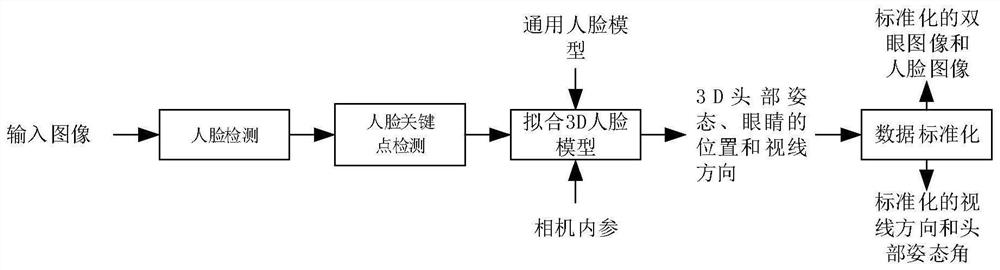

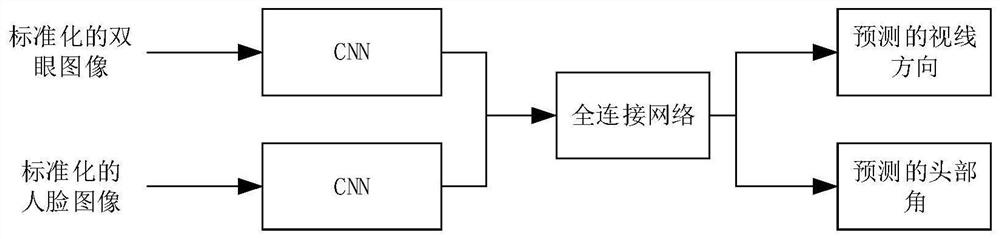

[0036] Step 1: Preprocess the pictures in the line of sight estimation dataset, such as figure 2 As shown, it is a schematic diagram of image preprocessing based on the human-computer interaction method based on gaze tracking. Extract the binocular image and the human face image as the training data of the line of sight estimation model of this embodiment, use the RetinaFace algorithm to detect the human face, then use the PFLD algorithm to detect the key points of the human face in the human face area, and extract 4 from the detected facial key points 1 eye corner and 2 mouth corner key points. The estimated head pose is obtained by computing the affine transformation matrix from the generic 3D face keypoint model to the 2D face key...

Embodiment 2

[0062] The disclosure of the present invention proposes a human-computer interaction device based on gaze tracking, which specifically includes:

[0063] A collection module, configured to collect user image data;

[0064] The calibration module is used to calculate the rotation and translation relationship between the camera and the screen according to the collected calibration pictures.

[0065] The preprocessing module is configured to preprocess the collected user images to obtain the input required by the processing module.

[0066] The preprocessing modules include:

[0067] 1. The recognition component is used to perform face detection and key point detection on the user image, and perform fitting according to the detected 2D face key points and the general 3D face key point model to obtain the estimated head pose angle.

[0068] 2. The standardization component is used to transform the original image into a standardized space through perspective transformation, and o...

Embodiment 3

[0079] The invention provides a computer device, including a memory, a processor, a network interface, a display and an input device, the processor of the computer device is used to execute a corresponding computer program, and the memory of the computer device is used to store a corresponding computer program and input Output information, the network interface of the computer equipment is used to realize the communication with the external terminal, the display of the computer equipment is used to display the program processing results, the input device of the computer equipment can be a camera for collecting images, and the processor executes The computer program implements the steps in the above embodiments of the eye-tracking-based human-computer interaction method. Alternatively, when the processor executes the computer program, the functions of the modules / units in the above-mentioned device embodiments are realized.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com