Semantic live-action three-dimensional reconstruction method and system of laser fusion multi-view camera

A multi-camera, 3D reconstruction technology, applied in the field of semantic real scene 3D reconstruction of laser fusion multi-camera, can solve the problem that the scene reconstruction model cannot meet the requirements of high precision and high information, achieve rich point cloud density, easy to use Acquiring, high-precision effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0034] Firstly, each sensor such as lidar, multi-eye camera, and IMU (inertial measurement unit) has a fixed relative pose, such as image 3 As shown, then calibrate the sensors to obtain the external parameters between the sensors, and the external parameters between the lidar and the IMU are expressed as The external parameters between the IMU and the i-th camera are expressed as The external parameters between the lidar and the i-th camera are expressed as Calibrate the camera to obtain the internal reference K of the i-th camera i and distortion coefficient (k 1 , k 2 ,p 1 ,p 2 , k 3 ) i . The calibrated sensors form a set of data acquisition system.

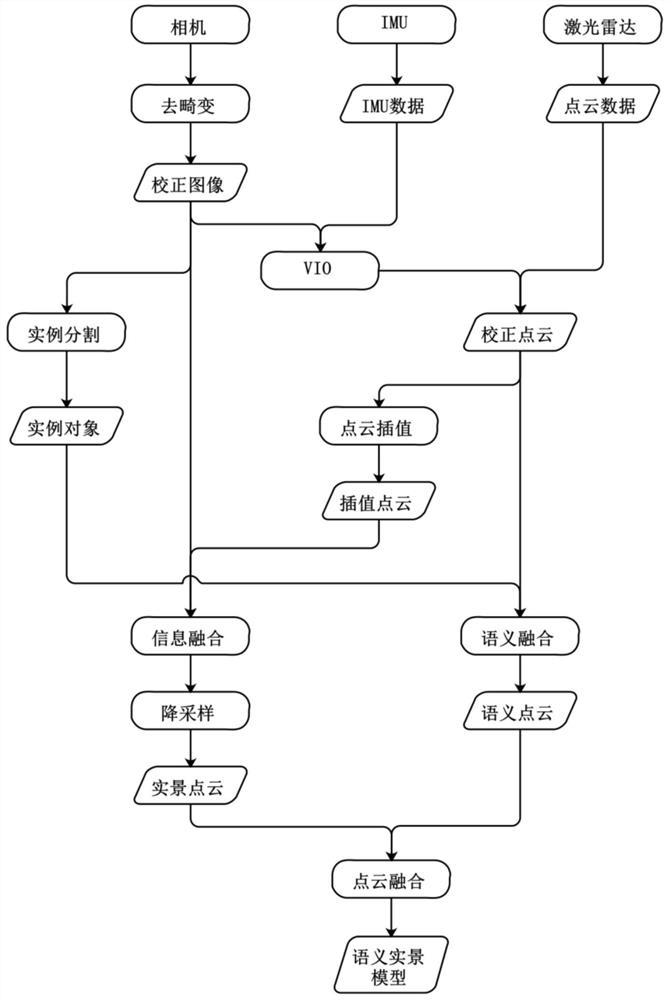

[0035] Such as figure 1 As shown, the present embodiment uses the above-mentioned data acquisition system to provide a semantic real scene three-dimensional reconstruction method of laser fusion multi-eye camera, which specifically includes the following steps:

[0036] Step 1: Acquire multi-camera images and c...

Embodiment 2

[0062] This embodiment provides a semantic real-scene 3D reconstruction system for laser fusion with multi-eye cameras, which specifically includes the following modules:

[0063] Multi-camera image acquisition and correction module, which is used to acquire multi-camera images and correct multi-camera images;

[0064] A laser point cloud data acquisition and correction module, which is used to acquire laser point cloud data and visual inertial odometry data, and align the laser point cloud data with the visual inertial odometer data according to the time stamp to correct the laser point cloud data;

[0065] Real point cloud building module, which is used to interpolate corrected laser point cloud data, obtain dense point cloud and project it to the imaging plane, and then match the pixels of the corrected multi-eye camera image to obtain each frame with RGB The dense point cloud of information is superimposed to obtain the real point cloud;

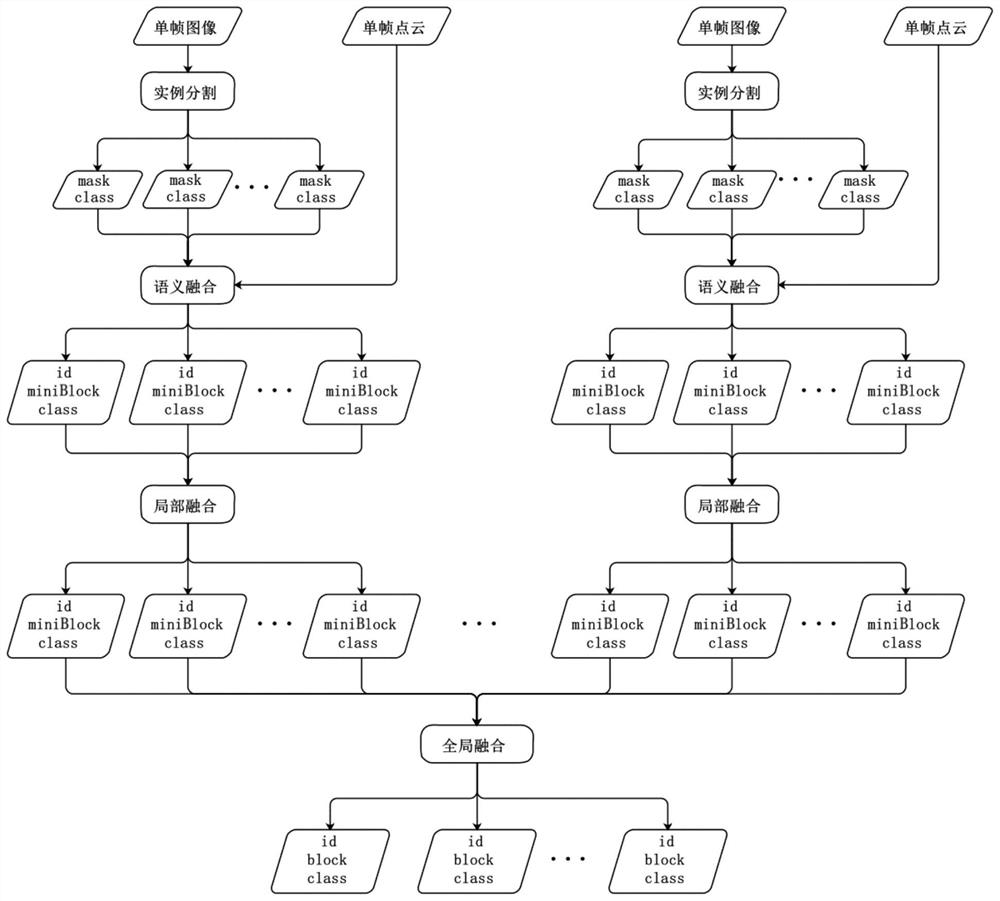

[0066] The semantic fusion modul...

Embodiment 3

[0069] This embodiment provides a computer-readable storage medium, on which a computer program is stored, and when the program is executed by a processor, the steps in the method for semantic real-scene 3D reconstruction of a laser fusion multi-eye camera as described in the first embodiment are implemented .

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com