Map construction method, device and system based on laser radar assisted vision

A technology of laser radar and map construction, which is applied in the computer field to achieve the effect of improving positioning accuracy, improving accuracy, and avoiding small differences

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

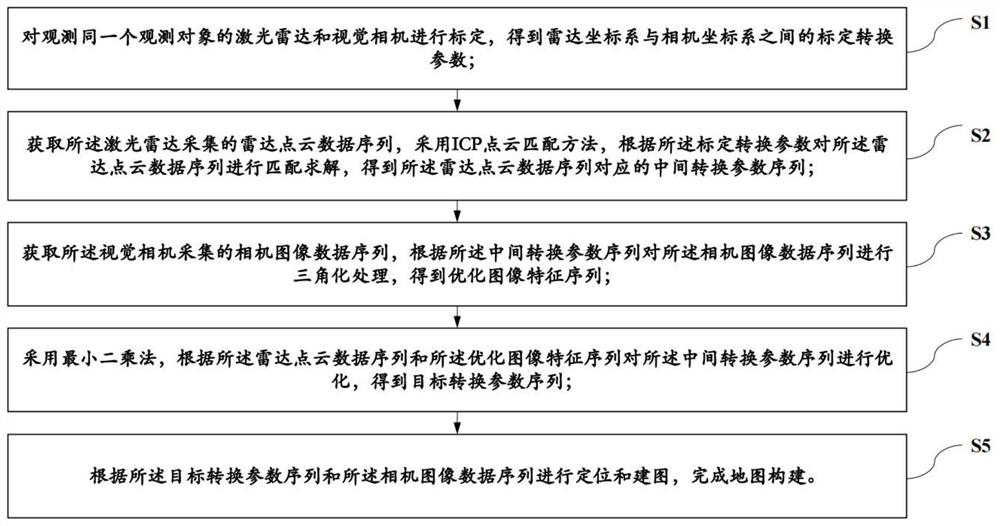

[0030] Embodiment one, as figure 1 As shown, a method of map construction based on lidar-assisted vision includes the following steps:

[0031] S1: Calibrate the lidar and visual camera that observe the same observation object, and obtain the calibration conversion parameters between the radar coordinate system and the camera coordinate system;

[0032] S2: Obtain the radar point cloud data sequence collected by the lidar, and use the ICP point cloud matching method to match and solve the radar point cloud data sequence according to the calibration conversion parameters, and obtain the radar point cloud data sequence corresponding to sequence of intermediate conversion parameters;

[0033] S3: Obtain the camera image data sequence collected by the visual camera, perform triangulation processing on the camera image data sequence according to the intermediate conversion parameter sequence, and obtain an optimized image feature sequence;

[0034] S4: Optimizing the intermediate...

Embodiment 2

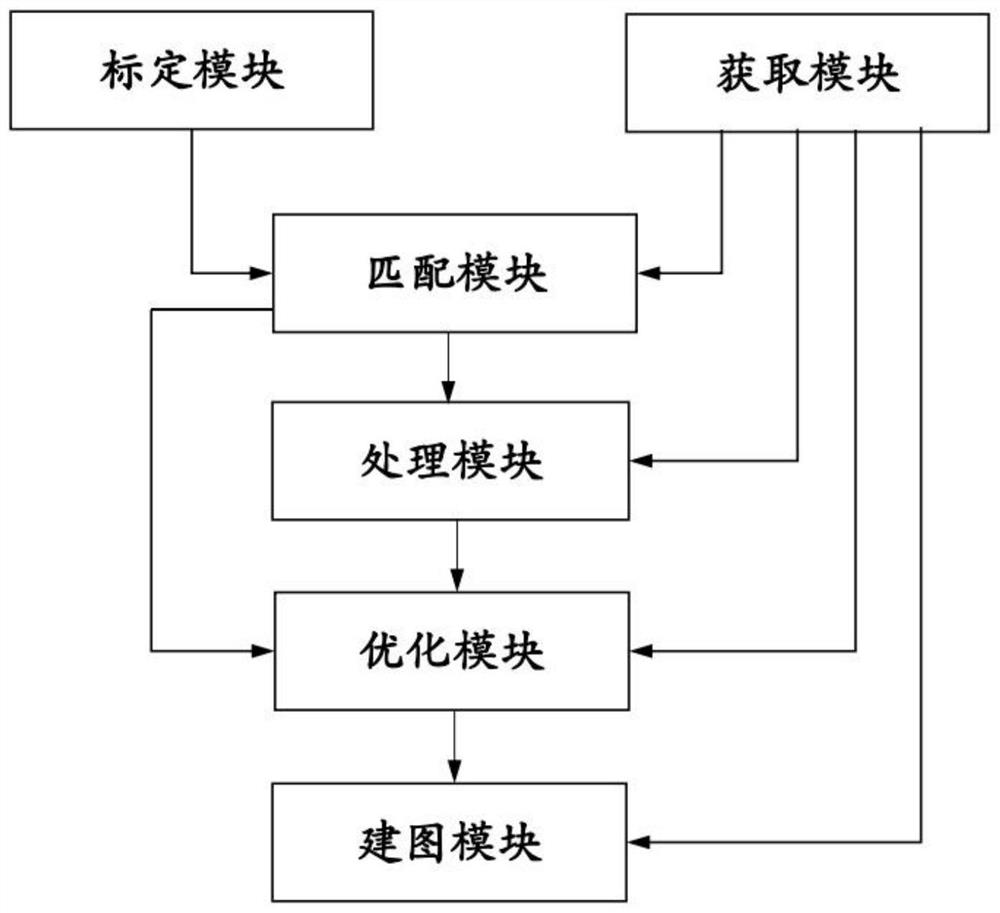

[0087] Embodiment two, such as figure 2 As shown, a map construction device based on lidar-assisted vision adopts the map construction method based on lidar-assisted vision in Embodiment 1, including a calibration module, an acquisition module, a matching module, a processing module, an optimization module and a mapping module. module;

[0088] The calibration module is used to calibrate the laser radar and the visual camera for observing the same observation object, so as to obtain the calibration conversion parameters between the radar coordinate system and the camera coordinate system;

[0089] The acquiring module is used to acquire the radar point cloud data sequence collected by the lidar and the camera image data sequence collected by the visual camera;

[0090] The matching module is used to use the ICP point cloud matching method to match and solve the radar point cloud data sequence according to the calibration conversion parameters, and obtain the intermediate con...

Embodiment 3

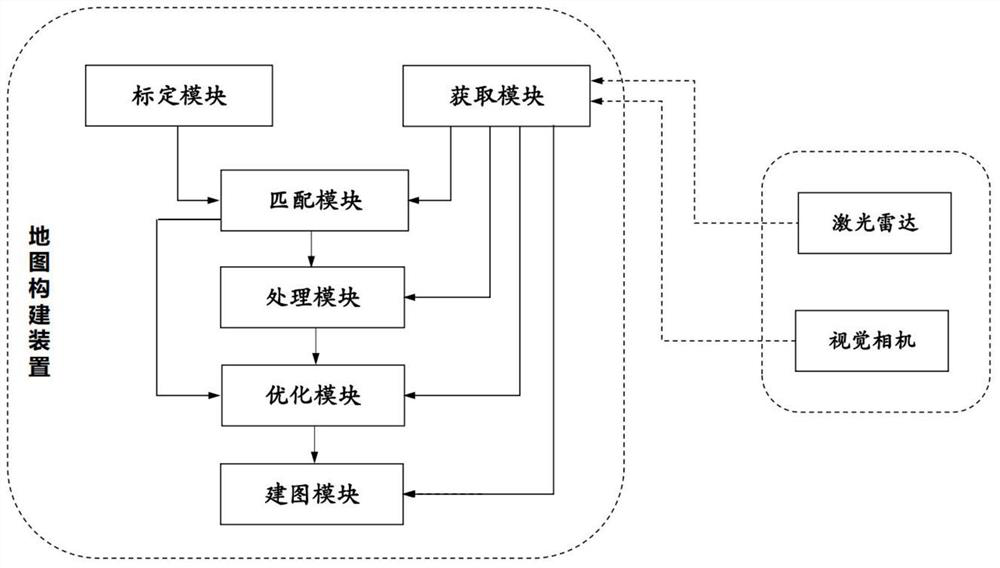

[0134] Embodiment three, as image 3 As shown, a map construction system based on laser radar-assisted vision includes a laser radar, a visual camera, and the map construction device in Embodiment 2. The laser radar and the visual camera are fixed together and are used to observe the same observing objects; both the lidar and the vision camera are connected in communication with the map construction device;

[0135] The lidar is used to collect the radar point cloud data sequence of the observed object and output it to the map construction device;

[0136] The visual camera is used to collect the camera image data sequence of the observed object and output it to the map construction device.

[0137] The map construction system based on laser radar-assisted vision in this embodiment realizes high-precision, real-time positioning and mapping, and can integrate laser radar positioning and machine vision positioning. Reduce the impact of the environment on visual positioning, ma...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com