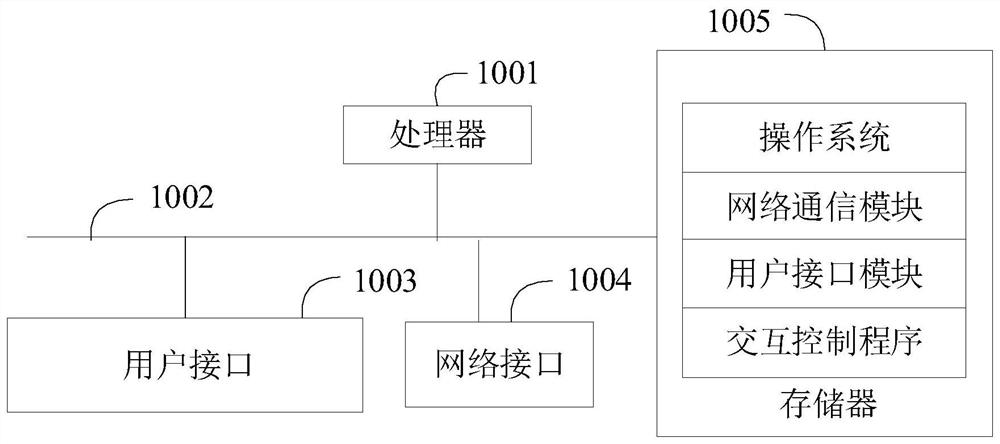

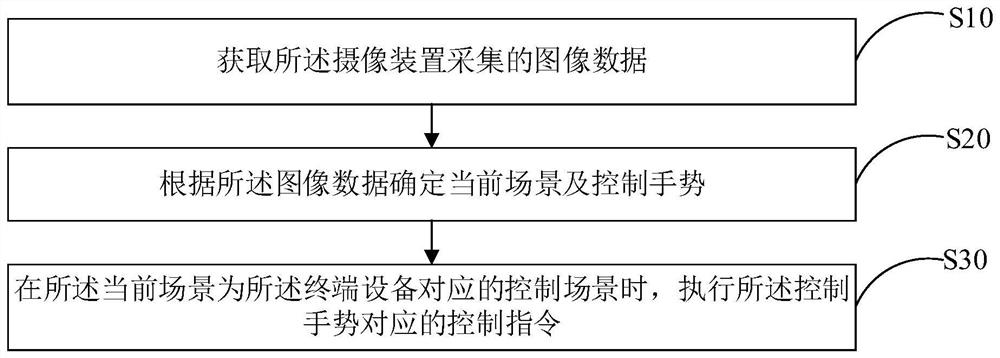

Interaction control method, terminal equipment and storage medium

A technology of interactive control and terminal equipment, applied in the field of gesture control, can solve problems such as misdetection of control gestures, misrecognition, and inaccurate detection of AR device control input, and achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

example 1

[0102] Example 1. In an AR control scenario, in order to avoid misrecognition of the user's actions on other electronic devices as control gestures. After the image data is acquired, it may be identified whether the image data contains electronic equipment, and then it is determined whether the current scene is the control scene according to the identification result. Wherein, when the electronic device is not included in the image data, the current scene is defined as the control scene; when the electronic device is included in the image data, the current scene is defined as the control scene other scenarios.

[0103] Specifically, in Example 1, after the image data is collected, the brightness value corresponding to each pixel in the image data may be acquired. It can be understood that, in this scenario, when the user operates other electronic devices, the display screens of the electronic devices will be on. In the image data of the lighted display screen, the correspond...

example 2

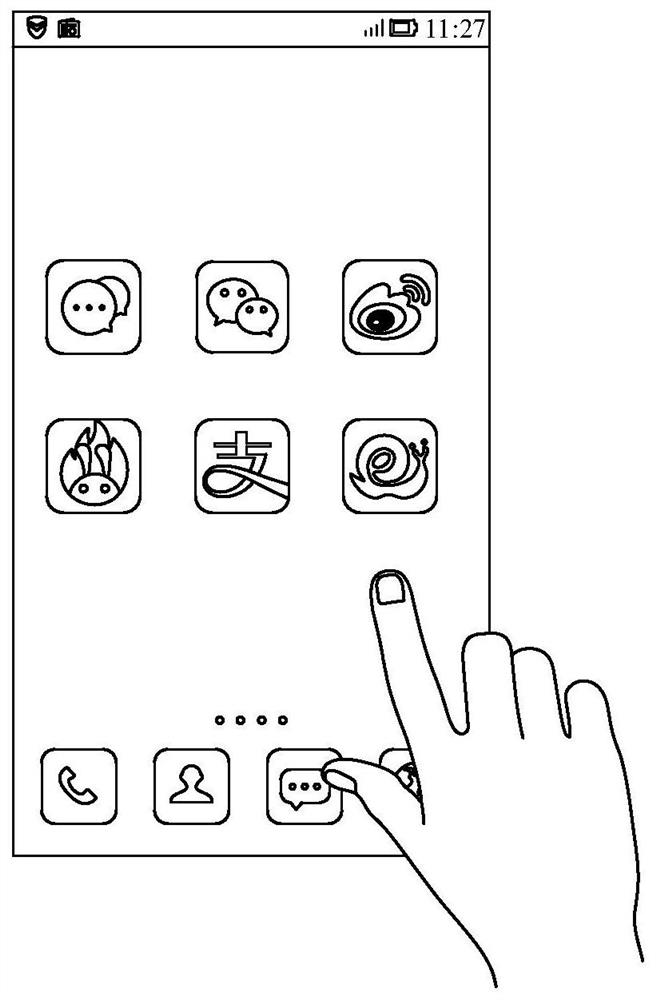

[0105] Example 2, as an optional implementation, is based on the above example 1. If the electronic device is directly included in the image data, the current scene is defined as a scene other than the control scene. Then the reliability of scene judgment is low. In order to improve the accuracy of scene judgment, when the electronic device is included in the image data, it may first be determined whether the hand in the image data overlaps with the electronic device. refer to image 3, when the hand overlaps the electronic device, the current scene is defined as a scene other than the control scene. Otherwise, refer to Figure 4 , defining the current scene as a control scene when the hand does not overlap the electronic device. This improves the accuracy of scene judgment.

[0106] Example 3, in an application scenario, the terminal device is set to be a smart TV. After the image data is acquired, an image recognition algorithm may be used to identify whether the hand ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com