Dense reconstruction method of UAV scene based on vi-slam and depth estimation network

A VI-SLAM and depth estimation technology, applied in the field of virtual reality, can solve the problem that large-scale scenes cannot be reconstructed quickly and densely, and achieve the effects of good generalization ability, fast operation efficiency and high accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0060] The present invention is described in further detail below in conjunction with the accompanying drawings and implementation examples:

[0061] The basic operation of the UAV scene reconstruction method in the present invention is to use the UAV equipped with IMU components to photograph the three-dimensional environment, transmit the obtained information to the back end for processing, and output the dense reconstruction point cloud rendering of the UAV scene. .

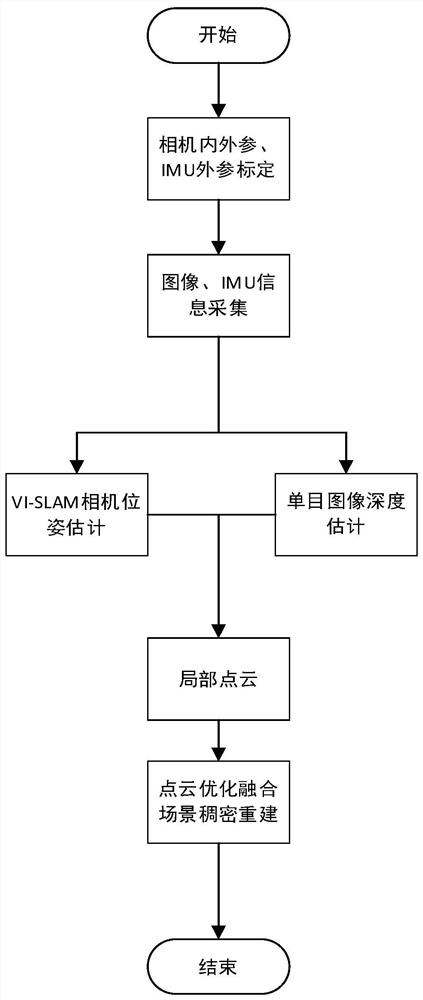

[0062] like figure 1 As shown, the steps of the UAV 3D reconstruction method based on VI-SLAM and depth estimation network of the present invention are as follows:

[0063] (1) Fix the inertial navigation device IMU to the drone, and calibrate the internal parameters, external parameters and IMU external parameters of the drone's own monocular camera;

[0064] (2) Use the UAV monocular camera and IMU to collect the image sequence and IMU information of the UAV scene;

[0065] (3) Use VI-SLAM to process the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com