Distributed task processing method, device, equipment, storage medium and system

A task processing and distributed technology, applied in the field of artificial intelligence and deep learning, can solve the problem that the model training task can only terminate the training, and achieve the effect of improving the efficiency of task processing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

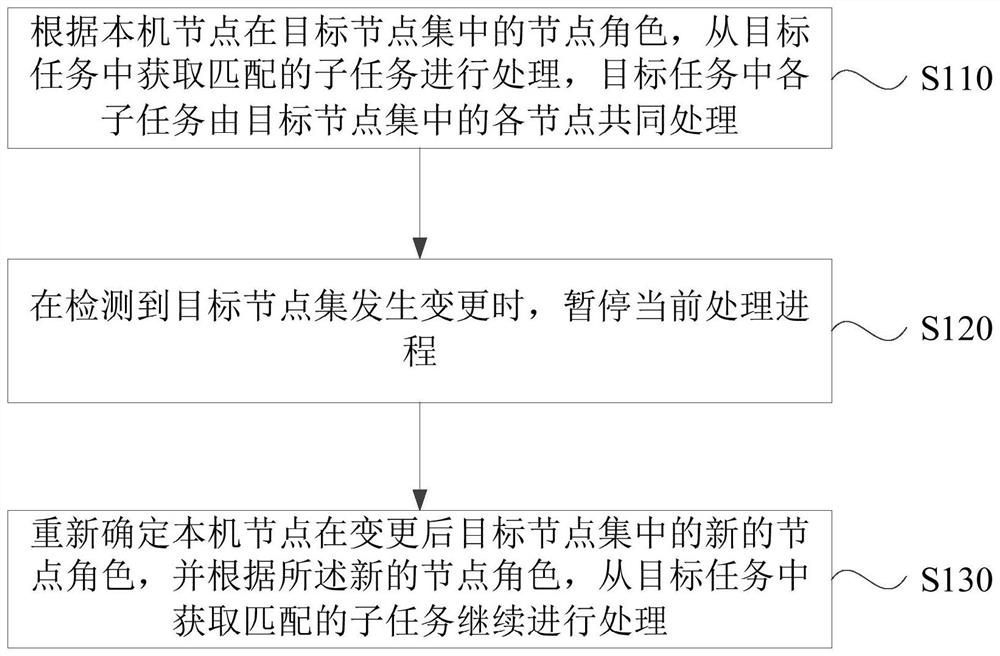

[0043] figure 1 It is a flowchart of a distributed task processing method in Embodiment 1 of the present invention. This embodiment is applicable to the situation where local nodes process training tasks in a distributed training scenario. This method can be implemented by distributed task processing device, which may be implemented by software and / or hardware, and integrated in the node.

[0044] Such as figure 1 As shown, the method includes:

[0045] S110. According to the node role of the local node in the target node set, obtain matching subtasks from the target task for processing, and each subtask in the target task is jointly processed by each node in the target node set.

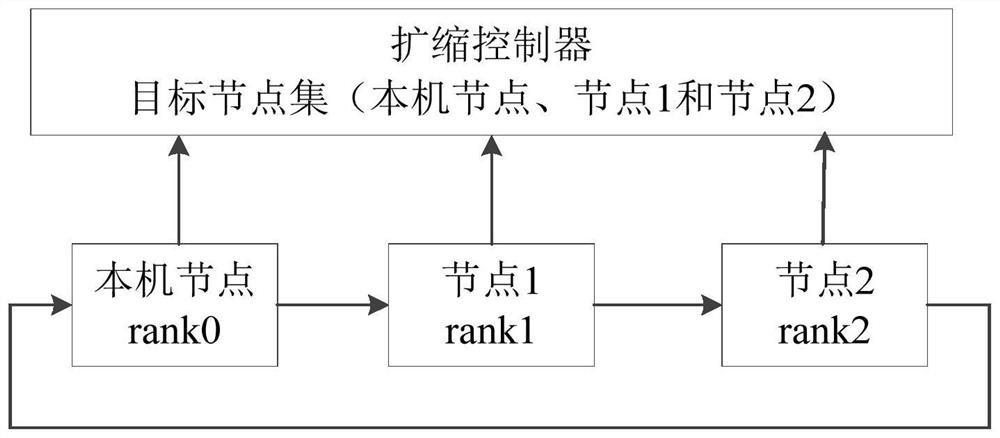

[0046] Wherein, the local node is a worker node in the distributed training, the target node set includes multiple nodes, and each node in the same target node set processes the same target task. The node role is used to indicate the position of the local node in the target node set, and the posi...

Embodiment 2

[0055] Figure 2a It is a flowchart of a distributed task processing method in Embodiment 2 of the present invention. On the basis of the above embodiments, this embodiment determines the node role of the local node in the target node set, according to the local node The process of processing the target task by the node role and the process of detecting whether the target node set has changed have been further refined, and the processes of node registration, generation of target node set, node shutdown, and node failure handling have been added.

[0056] Correspondingly, the method of this embodiment may include:

[0057] S210. According to the node start instruction of the node controller, acquire the task ID of the target task, wherein all the nodes in the target node set of the target task share the same task ID.

[0058] Wherein, the node controller is used to realize the opening and closing of the node, and the node start instruction is used to instruct the node to start...

Embodiment 3

[0106] image 3 It is a flow chart of a distributed task processing method in Embodiment 3 of the present invention. This embodiment is applicable to the situation where a node set is assigned to a training task in a distributed training scenario. This method can be implemented by a distributed task The processing device may be implemented by means of software and / or hardware, and integrated into the node controller.

[0107] Such as image 3 As shown, the method includes:

[0108] S310. Acquire a target task, where the target task includes multiple subtasks.

[0109] In the embodiment of the present invention, after acquiring the target task, the node controller allocates the target node set for the target task.

[0110] S320. According to the target task and currently available computing resources, create a target node set matching the target task, and assign the same task identifier to each node in the target node set.

[0111] In the embodiment of the present invention...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com