A distributed model training system and application method

A model training and distributed technology, applied in the field of distributed model training system, can solve the problems of GPU performance not being fully utilized, limited SSD read and write speed, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

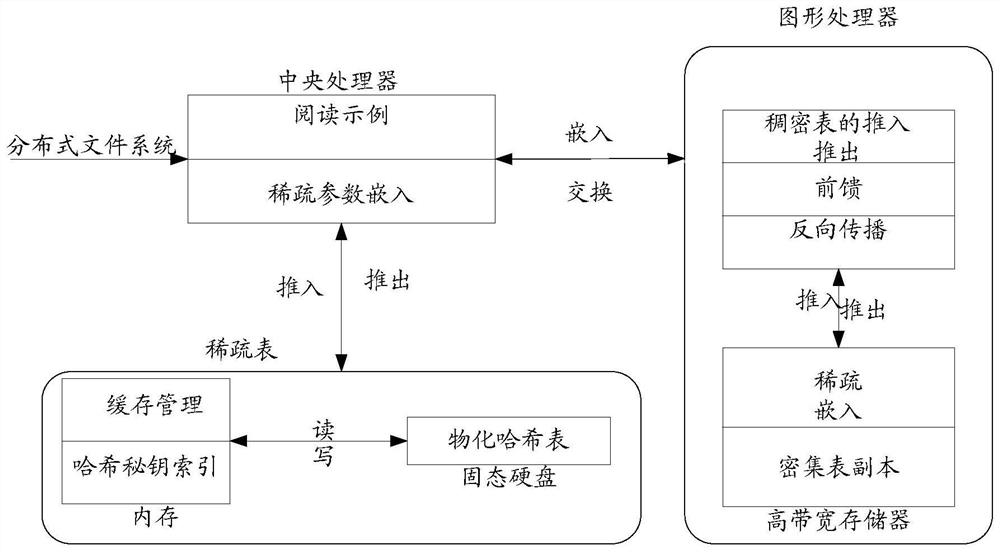

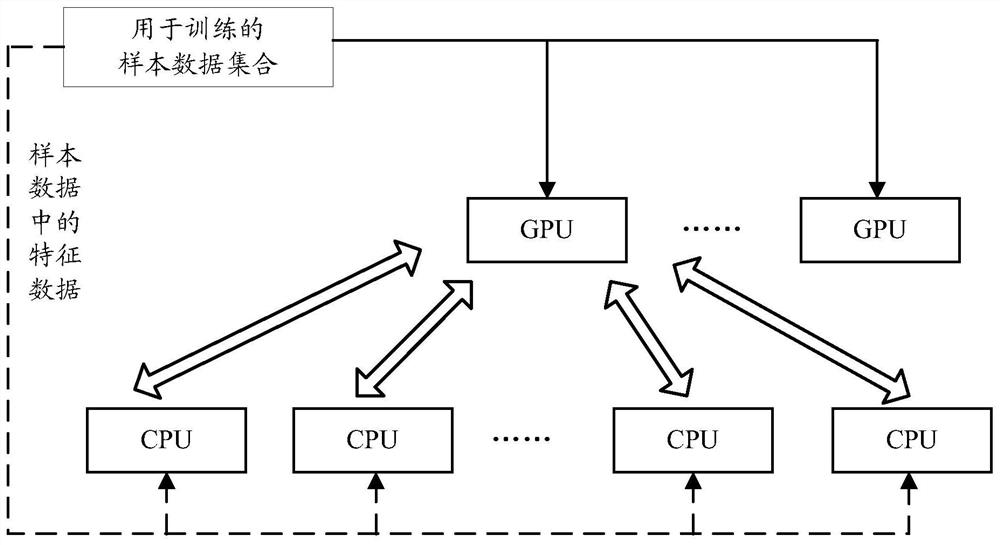

[0050] In order to give full play to the computing performance of the GPU and improve the efficiency of model training, in the embodiments of the present disclosure, a distributed system is used for model training. Specifically, the model is split into two parts, and one part is embedded on several CPUs ( Embedding) service model, and the other part is a deep neural network part built on several GPUs.

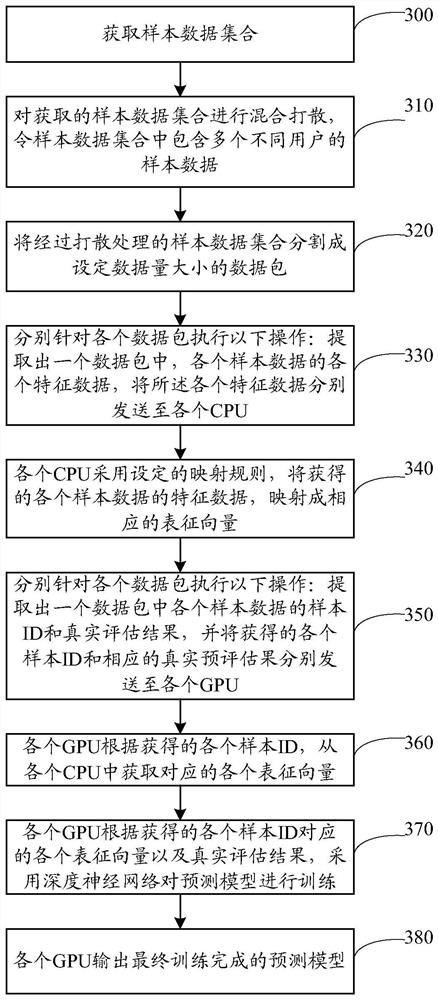

[0051] refer to figure 2 As shown, the Embedding service model built on several CPUs receives the feature data set in the sample data set used for training, maps the feature data into a representation vector and sends it to the GPU; and several GPUs receive The sample ID and real evaluation results in the sample data set, combined with the characterization vectors obtained from several CPUs, based on the deep neural network, the prediction model is trained until the training is completed, wherein, in the training process, each time the prediction model is After parameter adju...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com