Image retrieval method based on feature fusion

A technology of image retrieval and feature fusion, applied in still image data retrieval, image coding, image data processing, etc., can solve the problem of lack of local information in image representation, achieve the effect of reducing storage cost, saving storage cost, and strong discrimination

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

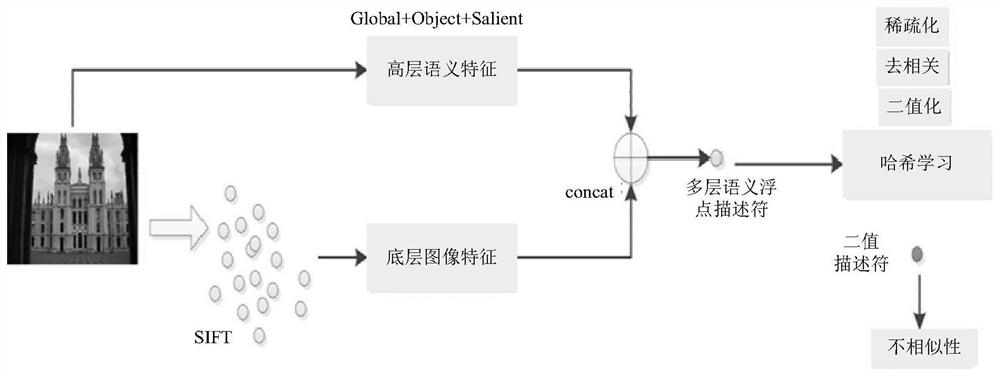

[0058] An image retrieval method based on feature fusion, such as figure 1 shown, including:

[0059] Model training step: establish a convolutional neural network for extracting image features, and use the training image set to train it to obtain a feature extraction network;

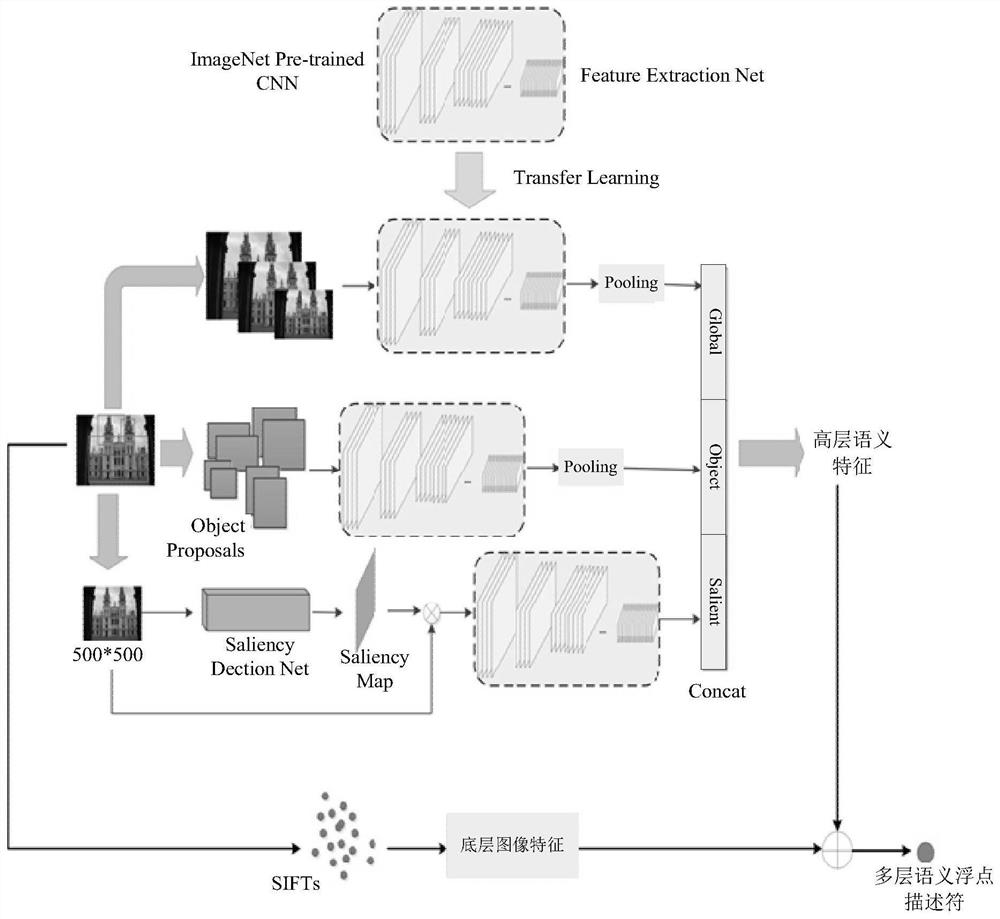

[0060] Multi-layer semantic floating-point descriptor construction step: extract at least one high-level semantic feature and at least one low-level image feature of the image, and fuse the extracted high-level semantic feature and low-level image feature to obtain the multi-layer semantic floating point of the image Descriptor; figure 1 and figure 2 As shown, in this embodiment, the high-level semantic features include a global descriptor (Global), an object descriptor (Object) and a salient region descriptor (Salient), and the underlying image features include a SIFT descriptor, wherein the global descriptor describes The global feature of the image, the object descriptor describes the object inf...

Embodiment 2

[0128] An image retrieval method based on feature fusion, this embodiment is similar to the above-mentioned embodiment 1, the difference is that in this embodiment, the high-level semantic features include global descriptors and object descriptors, and the underlying image features include SIFT descriptors .

[0129] For the specific implementation of this embodiment, reference may be made to the description in Embodiment 1 above, which will not be repeated here.

Embodiment 3

[0131] An image retrieval method based on feature fusion, this embodiment is similar to the above-mentioned embodiment 1, the difference is that in this embodiment, high-level semantic features include global descriptors, and low-level image features include SIFT descriptors.

[0132] For the specific implementation of this embodiment, reference may be made to the description in Embodiment 1 above, which will not be repeated here.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com