A Self-learning Emotional Interaction Method Based on Multimodal Recognition

An interactive method and multi-modal technology, applied in the field of human-computer interaction, can solve problems such as insufficient interactive ability, and achieve the effect of improving interactive experience, human-like self-learning and self-adaptive ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

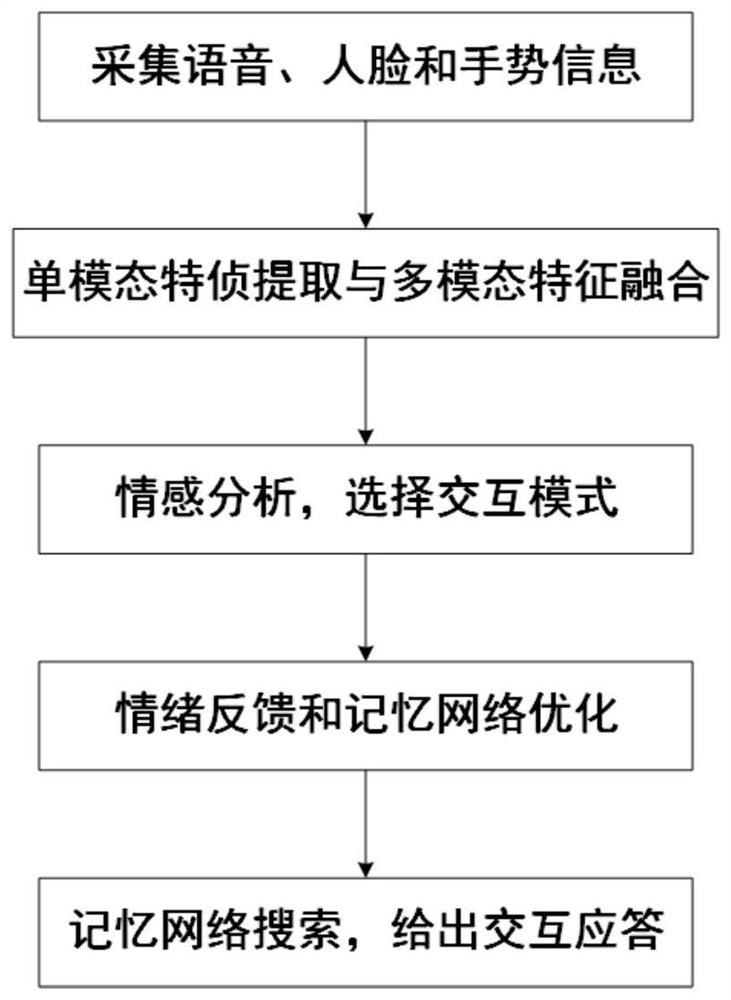

[0068] This embodiment specifically discloses a self-learning emotion interaction method based on multimodal recognition, as shown in the attached figure 1 shown, including the following steps:

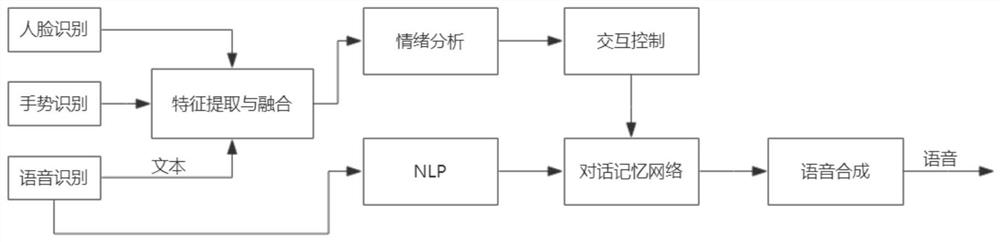

[0069] S1. Use the microphone array and the non-contact channel of the camera to collect voice, face and gesture information respectively, as shown in the attached figure 2 As shown in the left half, the technologies used are facial recognition, speech recognition and gesture recognition. Face recognition converts face image signals into face image information, speech recognition extracts voice information from voice signals, and gesture recognition converts gesture image signals into gesture information.

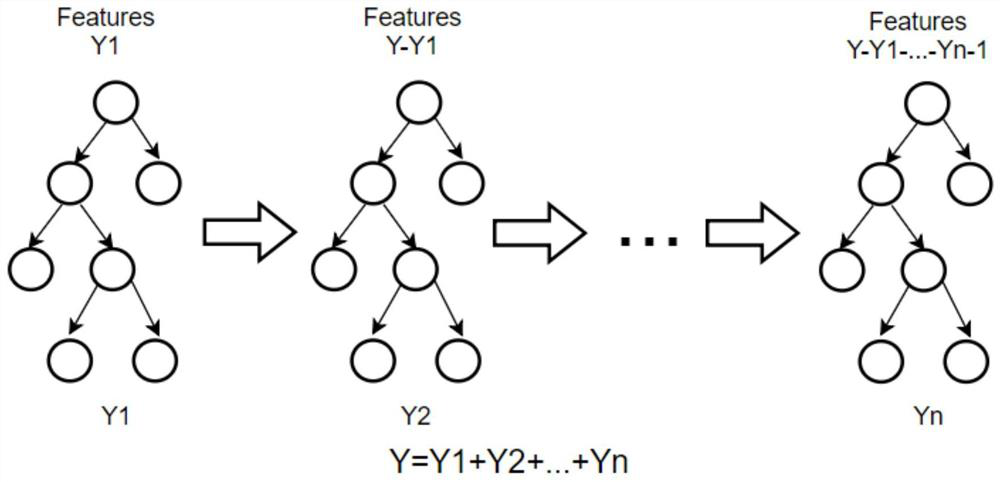

[0070] S2. Process the face image information, voice information and gesture information through a multi-layer convolutional neural network, such as figure 2 As shown in the right part, through emotion analysis technology and under the auxiliary processing of NLP, the speech em...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com