Foundation cloud picture classification method based on heterogeneous feature fusion network

A heterogeneous feature fusion, ground-based cloud map technology, applied in the field of pattern recognition, can solve the problems of time-consuming, unreliable method, high cost of human eye observation, etc., to achieve strong robustness, improve generalization ability, and good recognition results. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

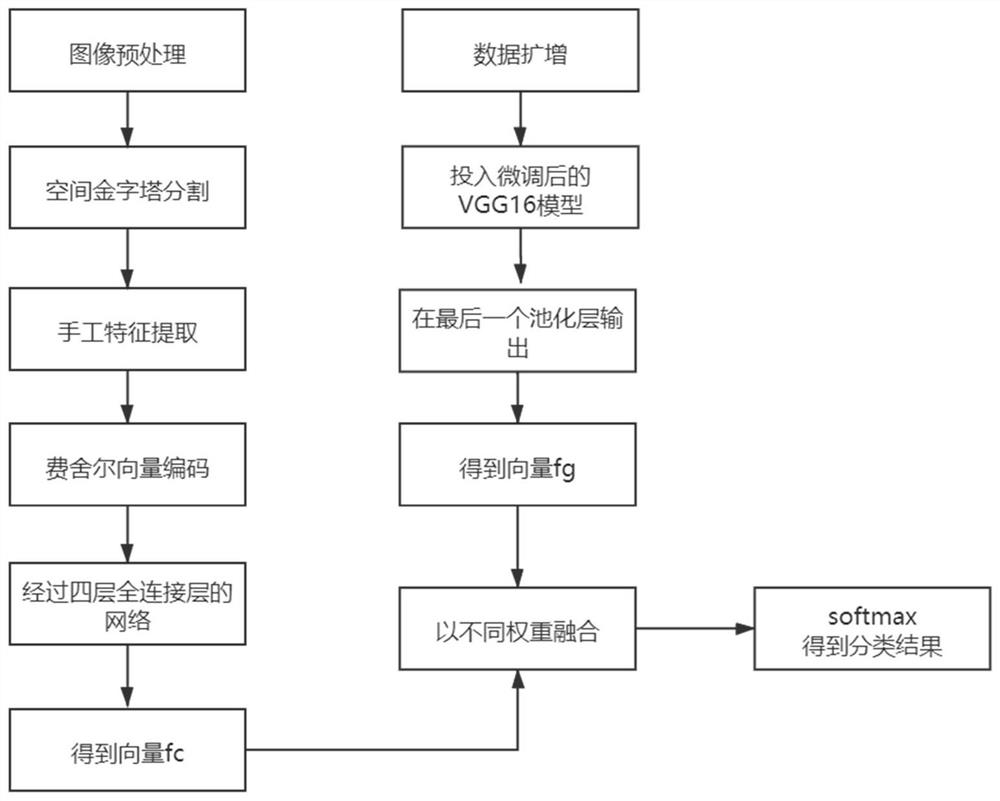

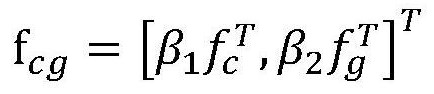

[0040] Such as figure 1As shown, the ground-based cloud image classification method of the present invention is divided into two parts, which are the manual feature extraction process and the deep semantic feature extraction process. The manual feature extraction process first uses different preprocessing methods for images of different data sets, and then uses the spatial pyramid strategy to batch divide the images into local regions of different sizes, and then extracts SIFT, local binary mode, Structural texture features based on the features of the gray-level co-occurrence matrix and color features extracted based on the difference between the red and blue channels, and then transform the feature vectors into Fisher vectors to solve the problem of inconsistent feature lengths of a single image, and finally convert all parts of each image The feature vectors of the regions are concatenated to obtain the manual feature vector of each image, and the Fisher vector is put into ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com