Target detection method based on laser radar and image video fusion

A laser radar and target detection technology, applied in image enhancement, image analysis, image data processing, etc., can solve the problems of increased false detection rate and decreased detection accuracy rate, so as to reduce false detection rate, enhance robustness, The effect of improving the detection accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

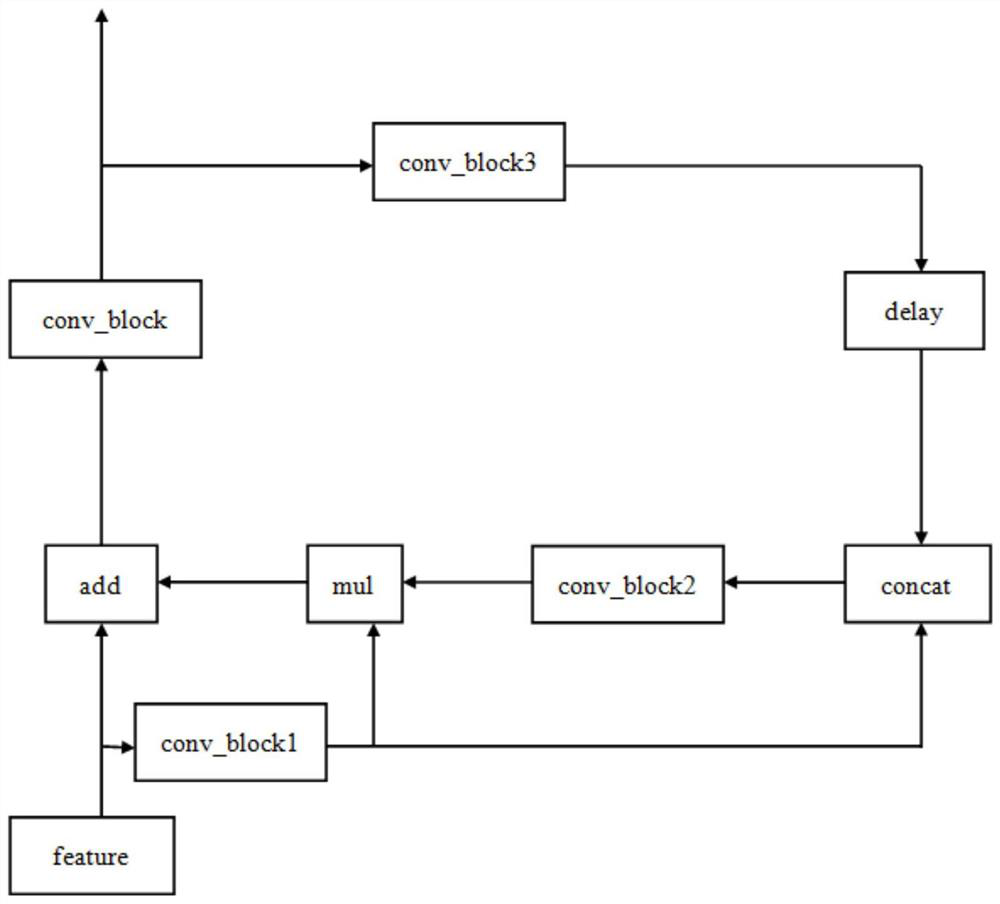

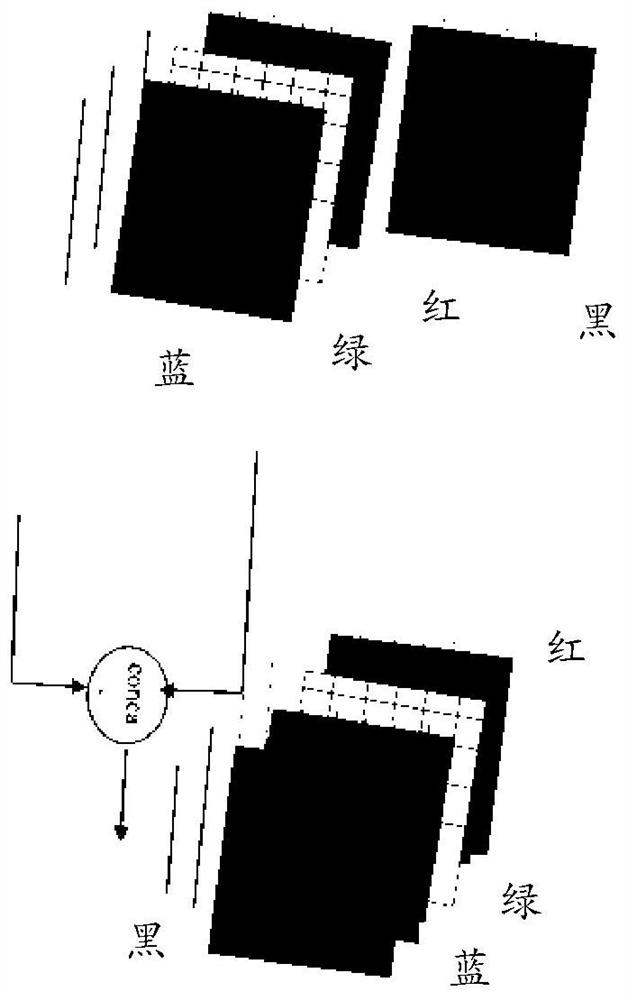

[0047] An embodiment of a target detection method for laser radar and image and video fusion in the present invention is basically as attached figure 1 shown, including steps:

[0048] S1. Fix the relative position of the image sensor and the lidar, and ensure that the image sensor and the lidar have a common view area;

[0049] S2. The image sensor collects image and video data, the laser radar collects 3D point cloud data, and the two data collections maintain real-time synchronization;

[0050] S3. Calibrate both the image video data and the three-dimensional point cloud data, and obtain the mapping relationship matrix T from the laser point cloud to the pixel plane;

[0051] S4. Obtain each frame of image data and point cloud data sequentially through the data interface in real time, run the algorithm and calculate the detection result by fusing the two-way data according to the mapping relationship matrix T;

[0052] S5. Outputting the detection result.

[0053] The sp...

Embodiment 2

[0072] The only difference from Embodiment 1 is that when the focus of the camera is suddenly blurred due to movement, or the area occupied by the dynamic object becomes smaller due to the change from near to far, a pre-judgment is made to determine whether the focus of the camera is suddenly blurred or the dynamic Is the area occupied by the target smaller in the frame due to the movement of the lens or the movement of the object being photographed. Specifically, set the reference object in advance to determine whether there is relative motion between the camera or the captured object and the reference object: if there is relative motion between the camera and the reference object, it means that the focus of the camera is suddenly blurred or the dynamic target is in the frame. The smaller occupied area is caused by the movement of the lens. At this time, adjust the static posture of the camera to keep it still; on the contrary, if there is relative motion between the captured ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com