Target detection and motion state estimation method based on vision and laser radar

A technology of laser radar and motion state, which is applied in the field of smart car environment perception, can solve the problems of laser radar not having visual recognition, low precision, and limited detection distance of objects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0056] The technical solutions in the embodiments of the present invention will be described clearly and in detail below with reference to the drawings in the embodiments of the present invention. The described embodiments are only some of the embodiments of the invention.

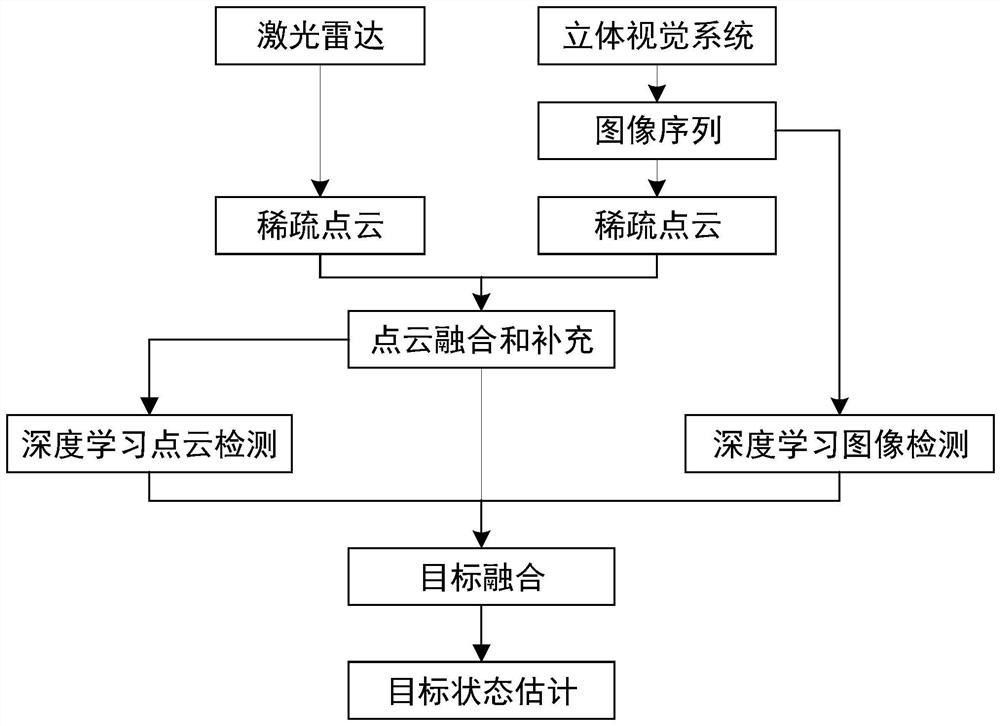

[0057] The technical scheme that the present invention solves the problems of the technologies described above is:

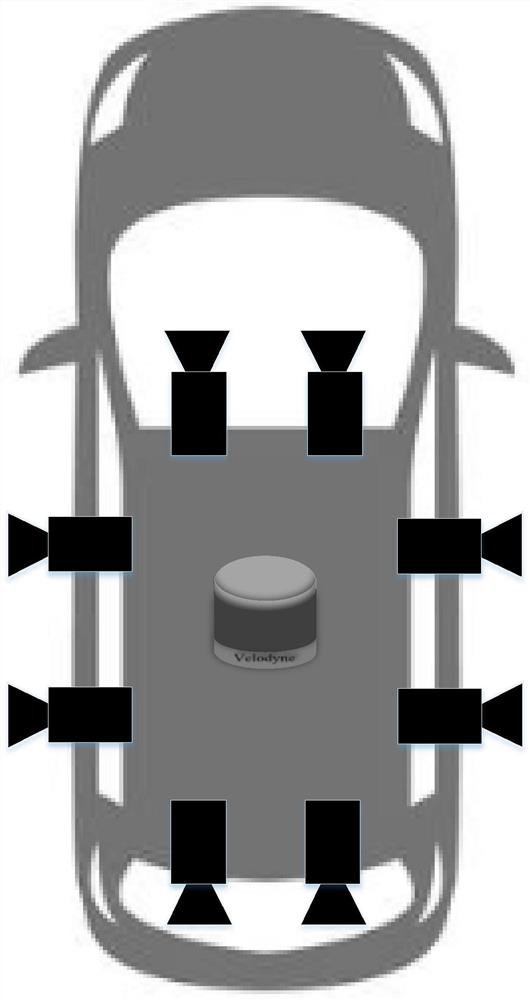

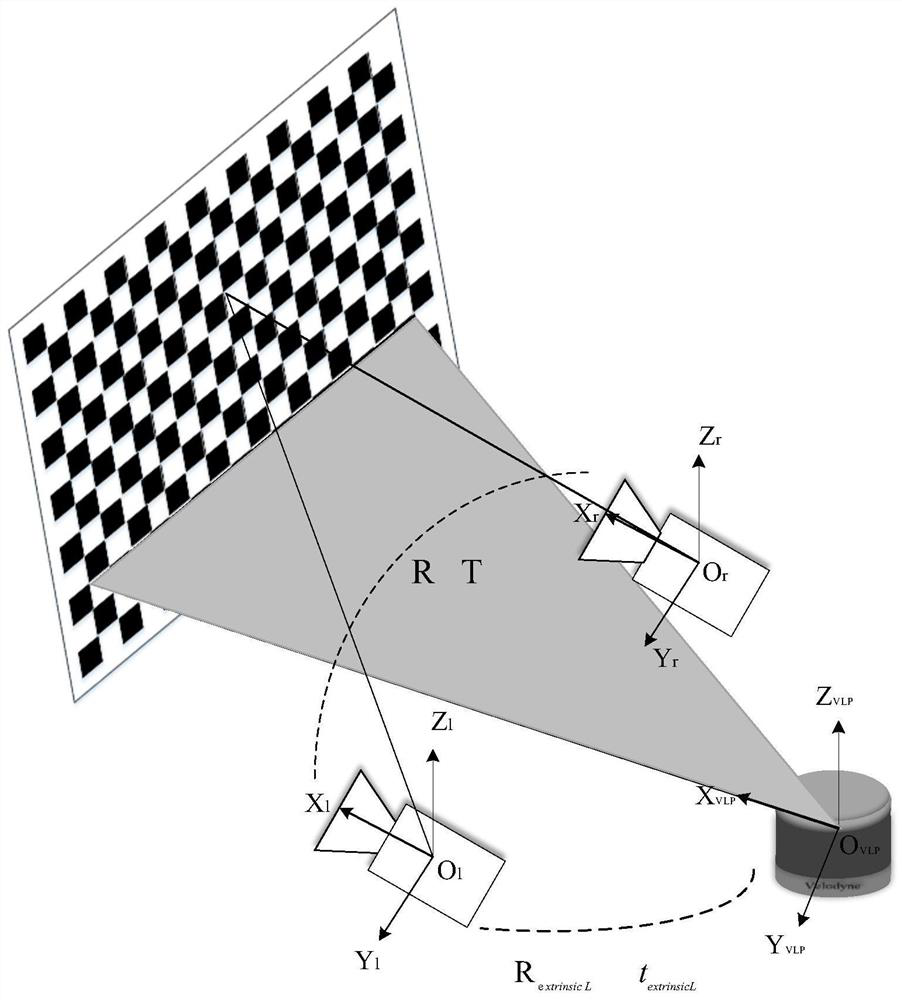

[0058] The object of the present invention is to provide a kind of target detection and motion state estimation algorithm based on vision and laser radar under the complex road conditions of mountainous areas such as vehicles and pedestrians, through 8 cameras (wherein 2 cameras on the front side of the car, 2 cameras on the right side of the car, 2 cameras on the left side of the car, 2 cameras on the rear side of the car) and a lidar. Using the principle of stereo vision and the fusion technology of laser radar to solve the target detection and target movement state analysis of complex ro...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com