Video stabilization method based on iterative strategy of recurrent neural network

A technology of cyclic neural network and video stabilization, applied in the field of remote sensing image processing, can solve the problem of not being able to make good use of timing information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

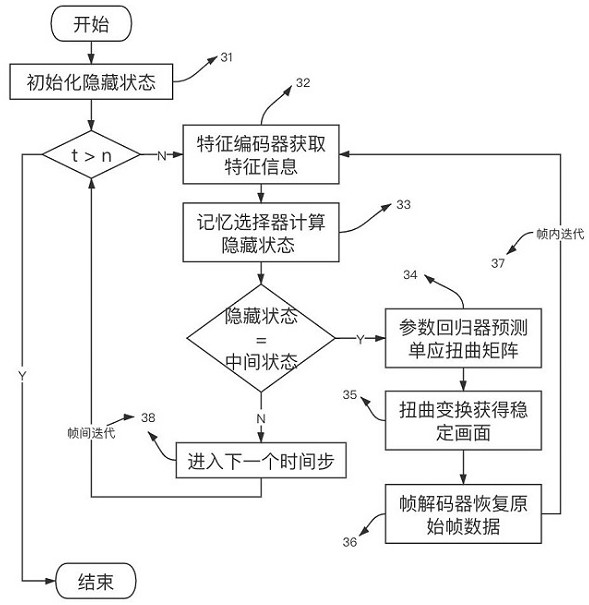

[0047] The present invention combines remote sensing image processing technology with deep learning to provide a video stabilization method based on a cyclic neural network iterative strategy to achieve stabilization of shaking sequence images and improvement of picture quality. The cyclic neural network can transmit the motion state between video frames in a long-term sequence, and provide a reference for the current frame distortion, making the stabilized picture more coherent and clear. The idea of this method is simple and clear, avoiding the unreal jitter artifacts caused by the loss of the timing relationship between frames, and updating the learned hidden state through the iterative strategy of the recurrent neural network, thereby effectively improving the stability effect.

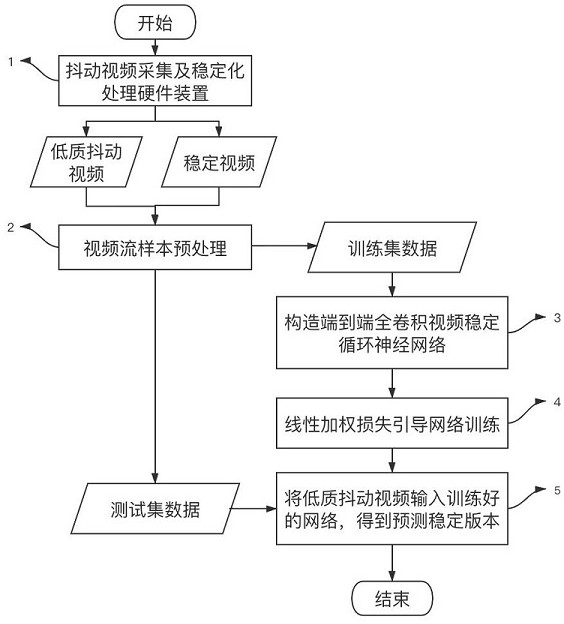

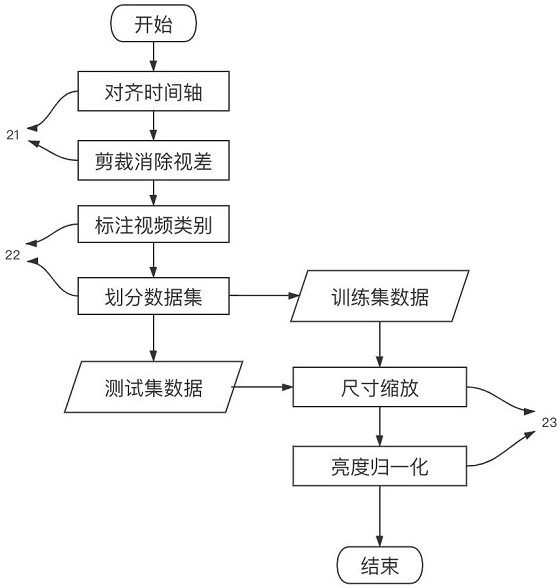

[0048] combine figure 1 , detail the main process steps of the inventive method:

[0049] Step 1: Use a jitter video acquisition and stabilization processing hardware device to obtain paired vi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com