Human body action recognition method

A human action recognition and action sequence technology, applied in the field of action recognition, can solve problems such as slow practical application, increased computational complexity of the learning model, difficulty in traversing the order of joint points, etc., and achieve the effect of reducing the impact of data noise

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

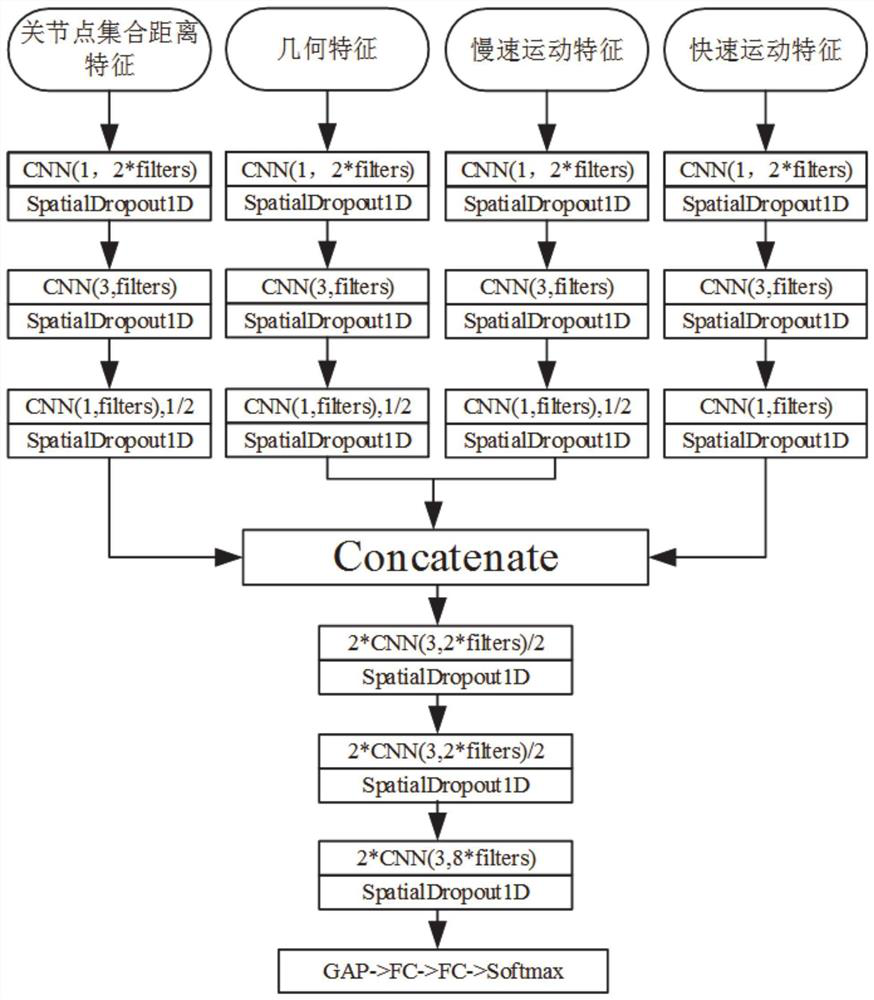

[0026] In a typical implementation of the present disclosure, such as figure 1 As shown, a human action recognition method is proposed.

[0027] Include the following steps:

[0028] Obtain the coordinate data of the joint points, and establish the distance feature, geometric feature and motion feature of the joint point set;

[0029] Model the spatio-temporal features of the action sequence from multiple perspectives, and use a one-dimensional temporal convolutional network to model the timing information of the action sequence;

[0030] Classify and recognize human actions through spatiotemporal features and time series information.

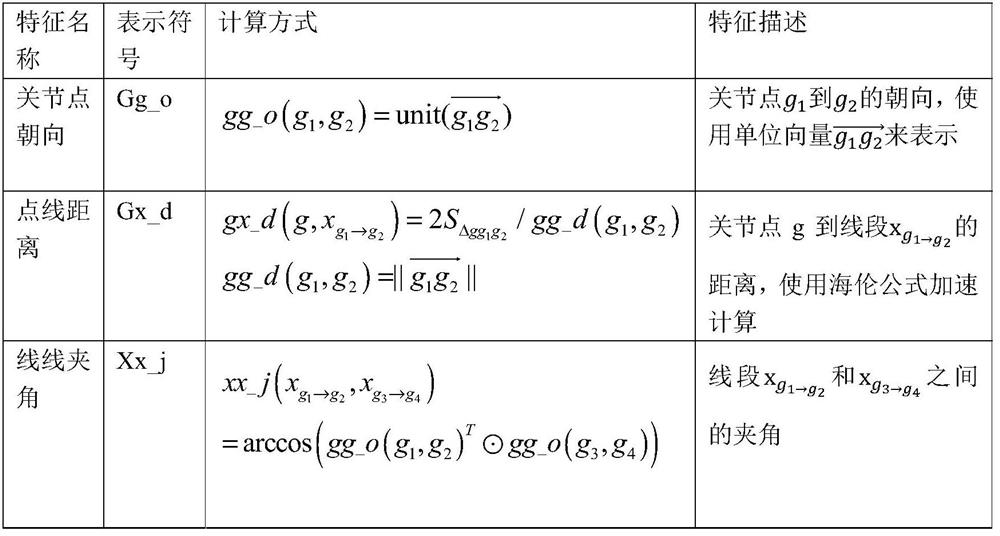

[0031] Specifically, the joint point-based human behavior feature representation includes joint point set distance feature representation, geometric feature representation and motion feature representation.

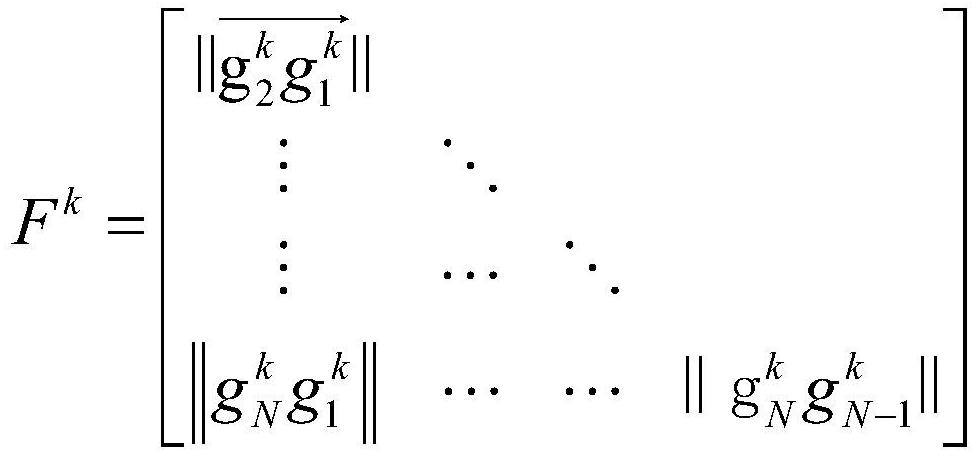

[0032] Treat combined distance features with joint points:

[0033] First calculate the distance between two joint points to get a sy...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com