Sparse matrix vector multiplication vectorization implementation method

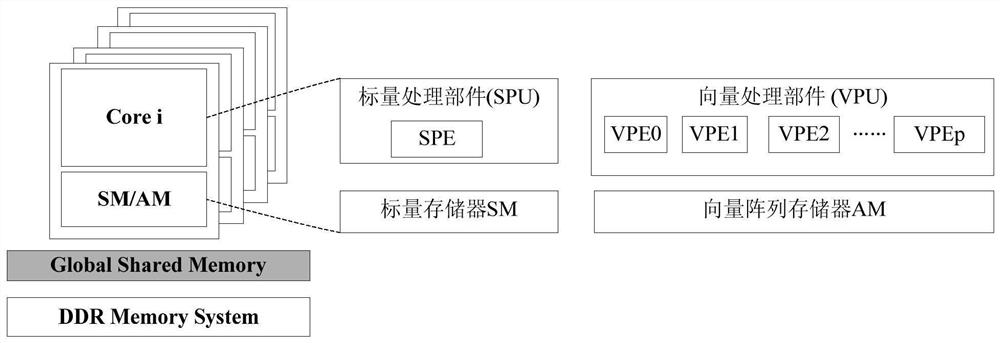

A sparse matrix and vector multiplication technology, applied in the field of multi-core vector processors, can solve the problems of waste of computing resources, hardware overhead (large area, power consumption, etc.), reduce the number of times to read data, reduce computing time, and improve computing efficiency Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0046] The present invention will be further described below in conjunction with the accompanying drawings and specific preferred embodiments, but the protection scope of the present invention is not limited thereby.

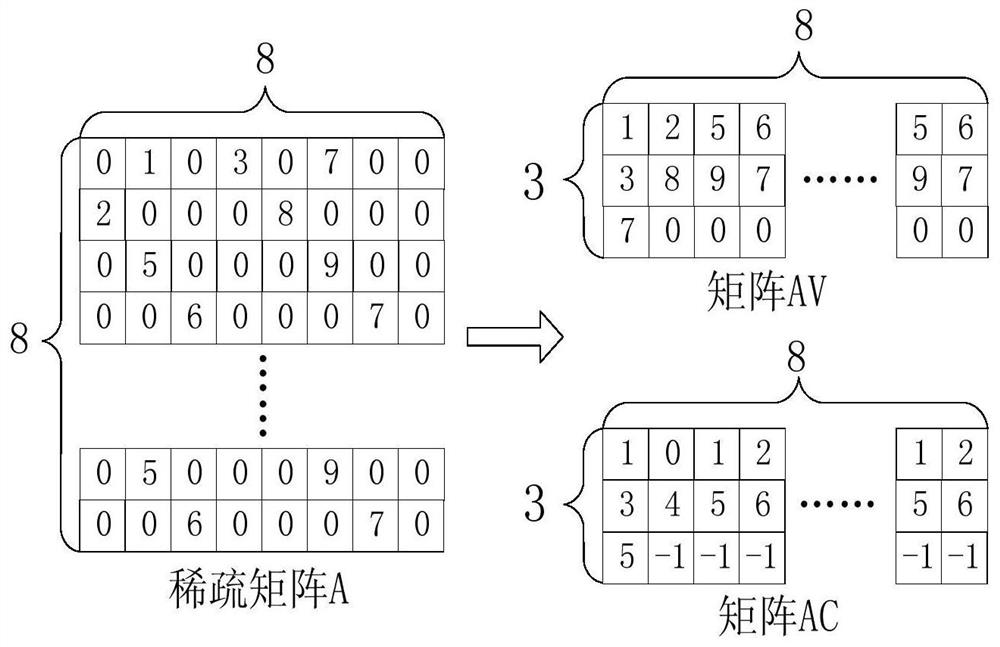

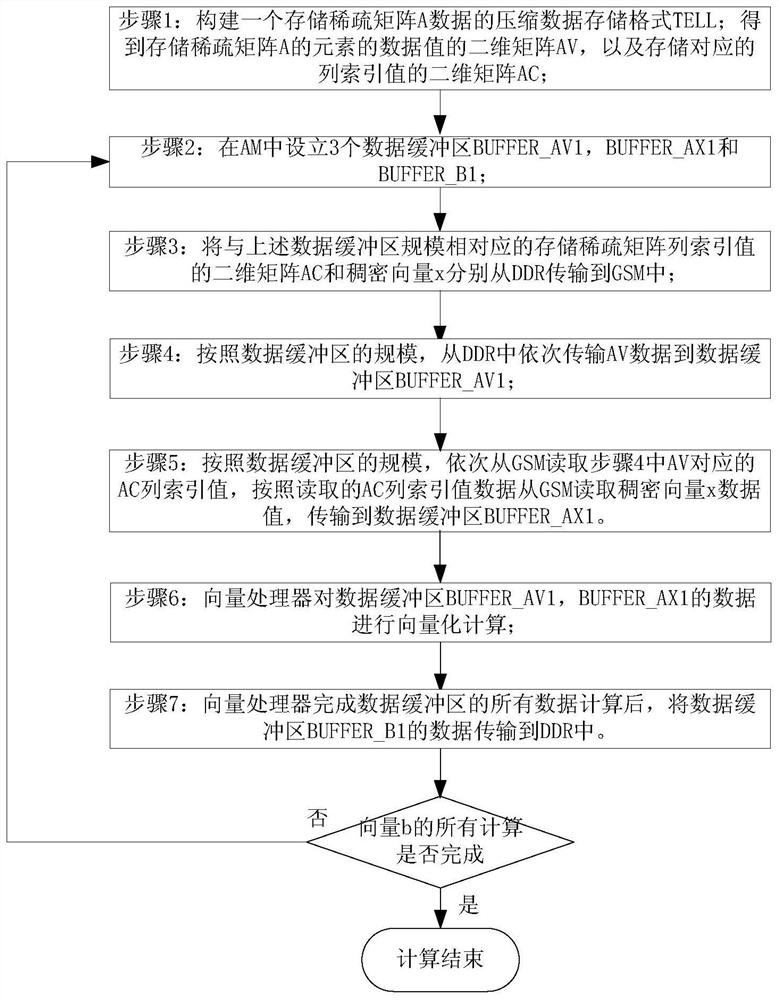

[0047] The present embodiment assumes that the problem solved by the SPMV algorithm is: Ax=b, wherein A is a sparse matrix to be calculated, the number of rows of the matrix is n, the maximum number of non-zero elements in each row is maxnonzeros, and x is a dense vector to be calculated, The number of elements is n, b is the result vector, and the number of elements is n. Such as image 3 As shown, the steps of the implementation method of sparse matrix-vector multiplication and vectorization for multi-core vector processors in this embodiment are as follows:

[0048] Step 1. Perform data storage according to the TELL (Transposed ELLPACK) data format: construct the first matrix AV and the second matrix AC in DDR respectively, read the data values of non-ze...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com