Neural network distributed training method for dynamically adjusting Batch-size

A neural network and dynamic adjustment technology, applied in neural learning methods, biological neural network models, neural architectures, etc., can solve problems such as computing power consumption, achieve the effect of reducing synchronization overhead and improving training efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

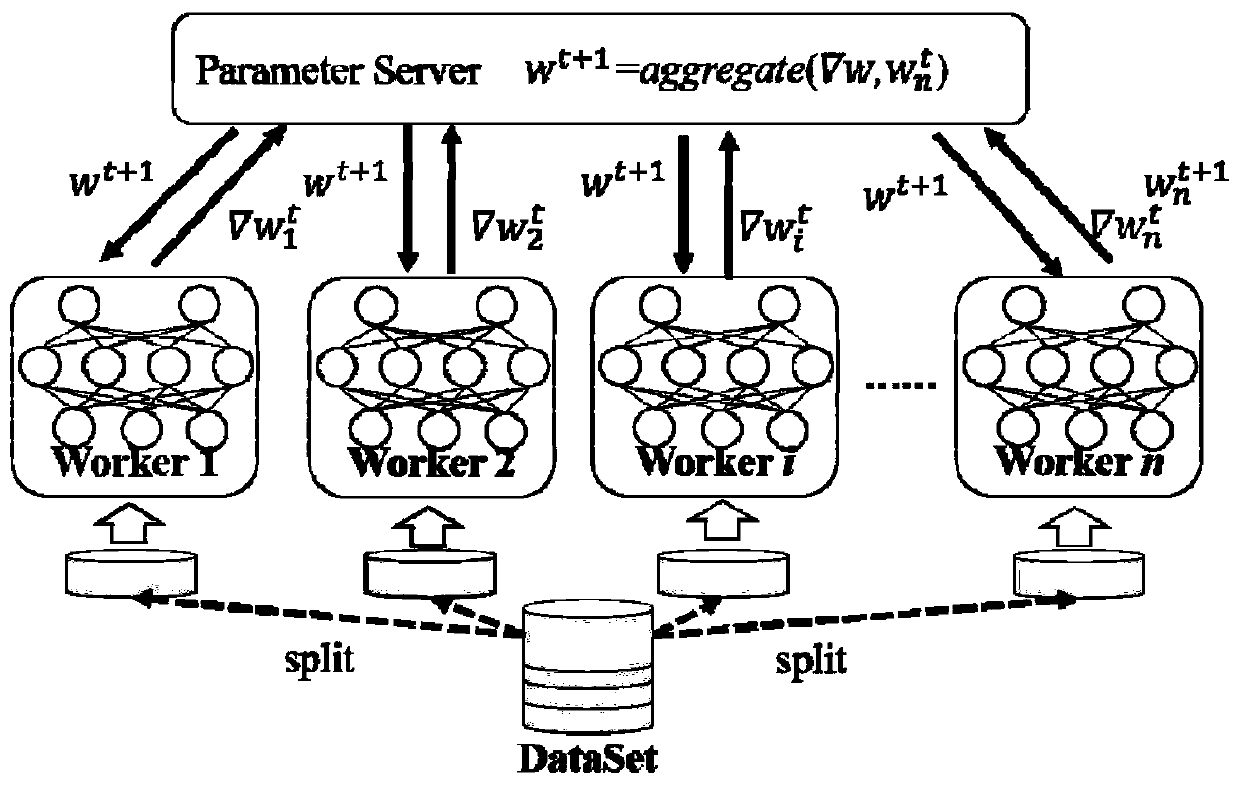

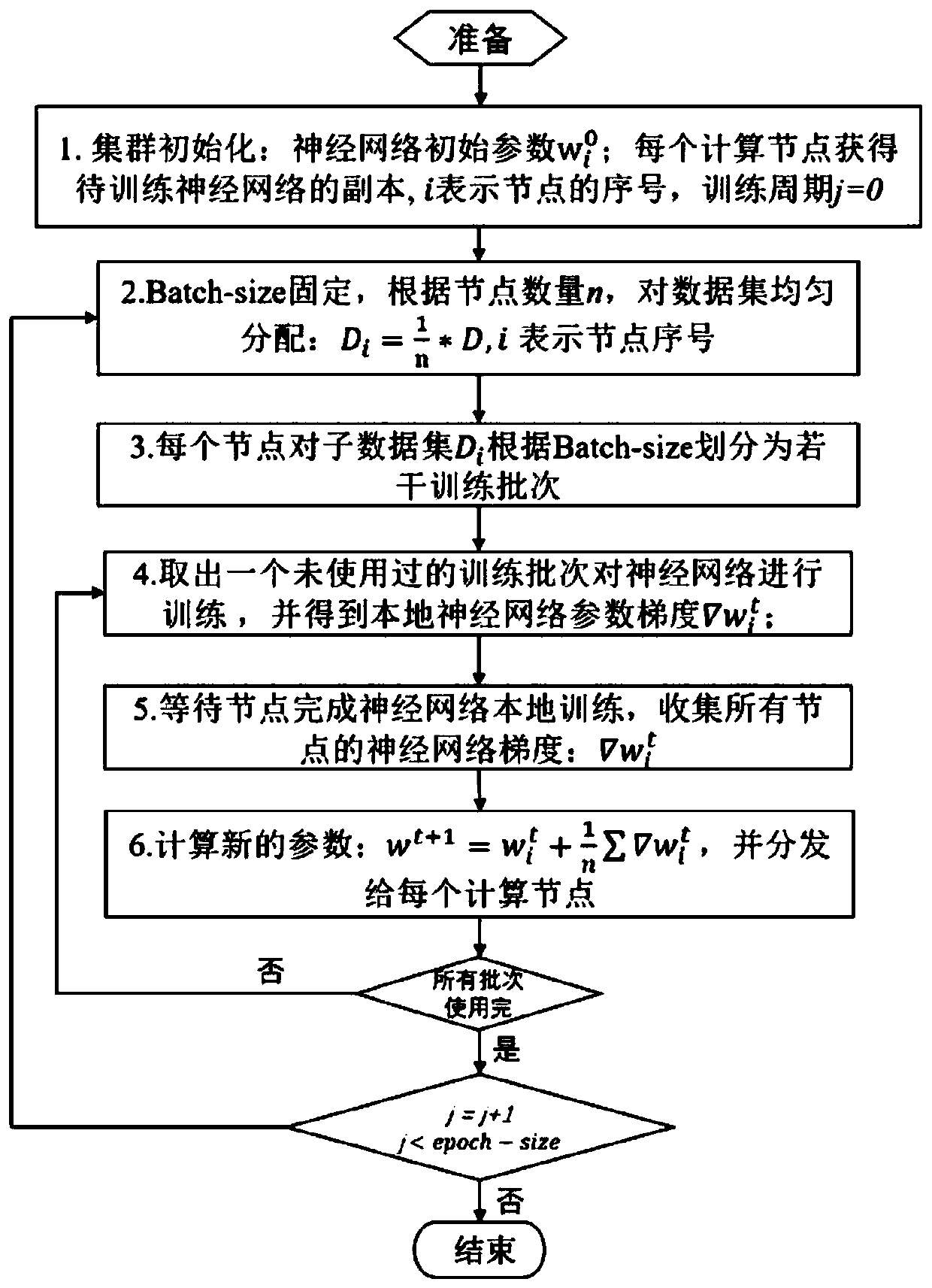

[0062] Please refer to Figure 4 , the present embodiment dynamically adjusts the neural network distributed training method of Batch-size, comprises the following steps:

[0063] S1. Each computing node obtains the neural network after parameter initialization;

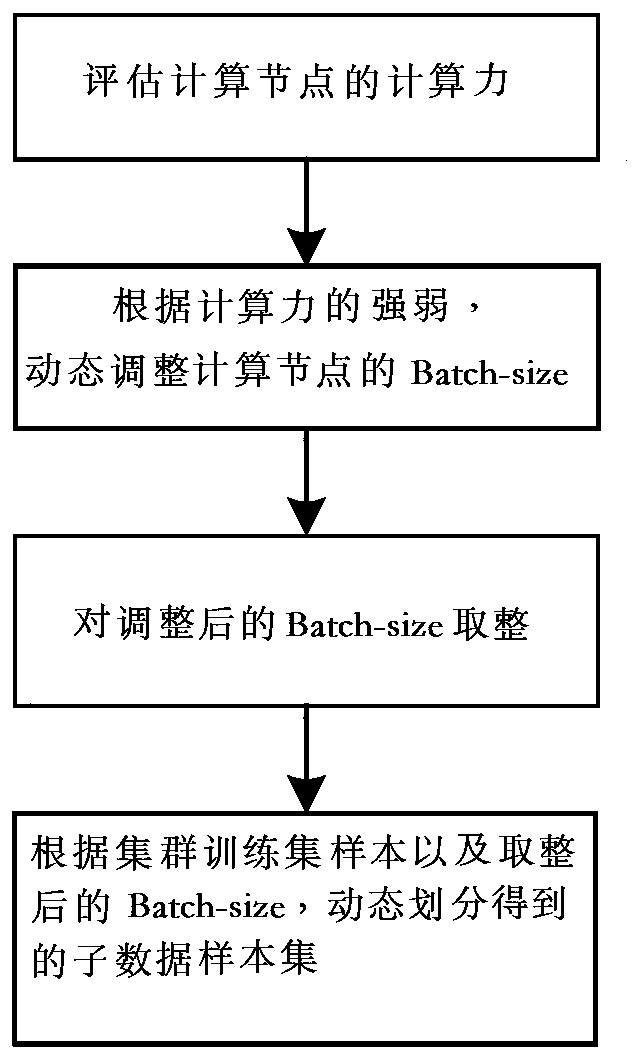

[0064] S2. For each computing node, dynamically adjust the Batch-size according to its computing power, and divide and obtain sub-data sample sets according to the cluster training set samples and the adjusted Batch-size;

[0065] S3. For each computing node, divide its local sub-data sample set into several training batch sample sets;

[0066] S4. For each computing node, obtain an unused training batch sample set to train the local neural network, and obtain the trained gradient of the local neural network;

[0067] S5. Collect the gradients trained by the local neural network of all computing nodes;

[0068] S6. Calculate new neural network parameters according to all trained gradients and current neural networ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com