A virtual reference frame generation method based on parallax-guided fusion

A virtual reference and reference frame technology, which is applied in digital video signal modification, image communication, electrical components, etc., can solve the problems that the coding performance needs to be further improved, the predictive coding process is not accurate enough, and the lack of deep learning algorithms is beneficial to storage. and transmission, reducing encoding bits, and reducing compression distortion

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

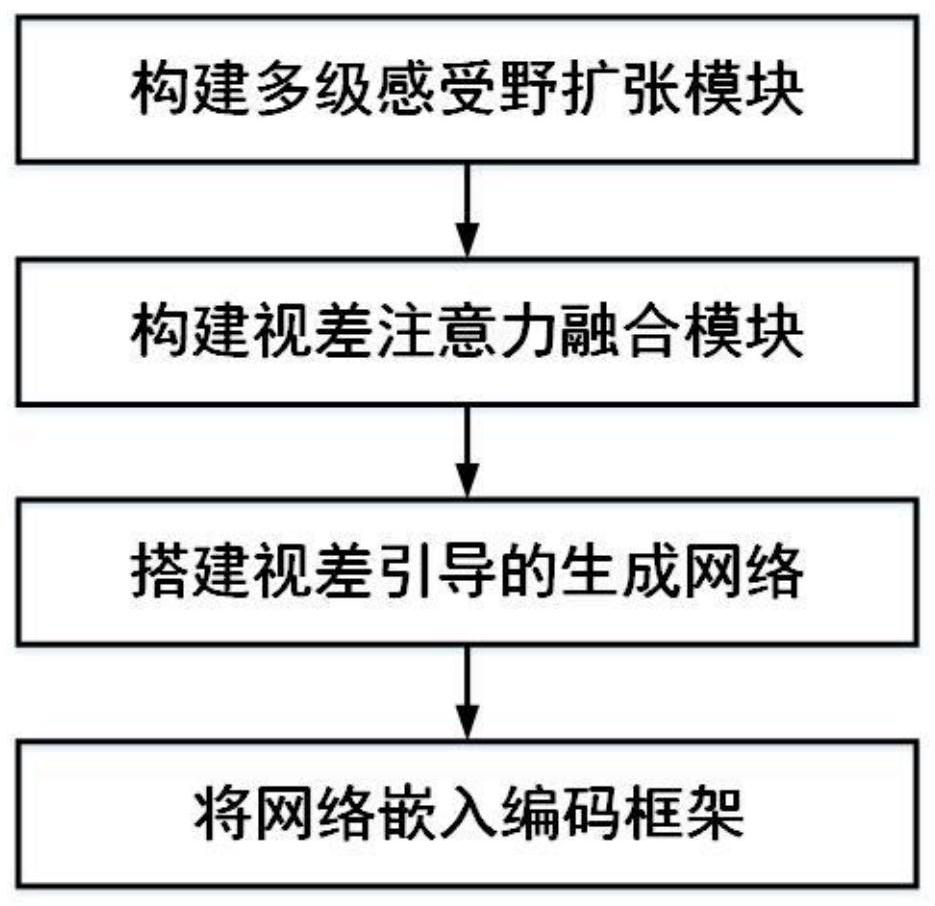

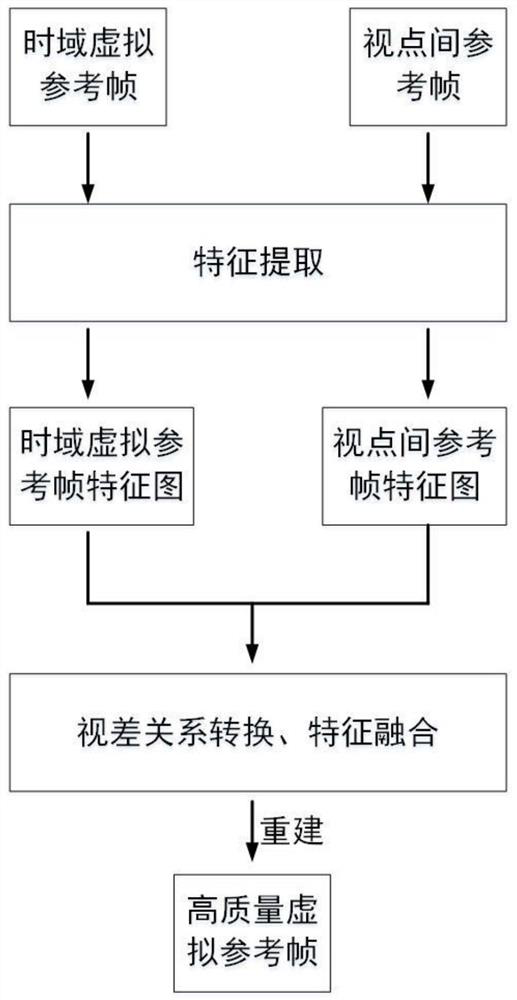

[0041] The present invention proposes a virtual reference frame generation method based on parallax-guided fusion, builds a parallax-guided generation network, uses the learning characteristics of convolutional neural networks, learns and converts the parallax relationship between adjacent viewpoints and generates a high-quality The virtual reference frame provides a high-quality reference for the current coded frame, which improves the prediction accuracy and thus improves the coding efficiency. The whole process is divided into four steps: 1) Multi-level receptive field expansion; 2) Parallax attention fusion; 3) Parallax-guided generative network framework integration; 4) Embedding coding framework. The specific implementation steps are as follows:

[0042] 1. Multi-level receptive field expansion

[0043] Feature representation with rich information is crucial for image reconstruction tasks. Considering that both multi-scale feature learning and multi-level receptive field...

Embodiment 2

[0064] The method provided by the embodiment of the present invention is verified below in combination with specific experiments, see the following description for details:

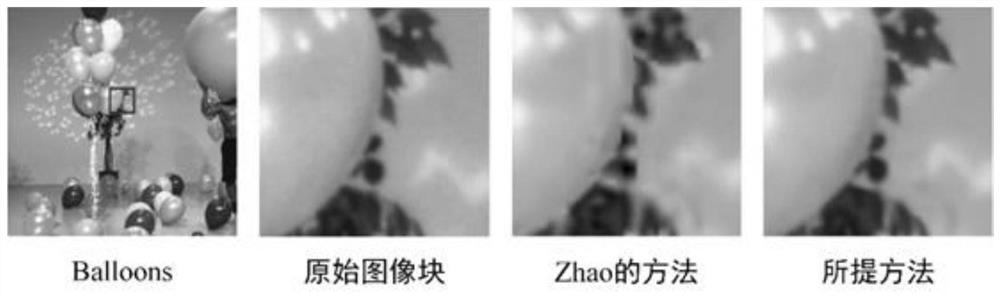

[0065] The present invention transplants Zhao et al.'s method of using a separable convolutional network to generate a virtual reference frame in the field of 2D video coding to the HTM16.2 platform, and compares it with the method proposed in the present invention. Compared to the 3D-HEVC baseline, the present invention is able to achieve an average bit saving of 5.31%, and Zhao's method is able to achieve an average bit saving of 3.77%. Compared with Zhao's method, the present invention can achieve an additional 1.54% bit saving, indicating that the proposed method of the present invention is well applicable to multi-view video coding. In order to verify the effectiveness of the proposed method of the present invention more intuitively, figure 2 The visualization results of different methods to genera...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com