Video recommendation method based on multi-modal video content and multi-task learning

A multi-task learning and video content technology, applied in the field of video recommendation based on multi-modal video content and multi-task learning, can solve problems such as dependence, cold start, and video inaccuracy, and achieve the effect of reducing the scale of parameters

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

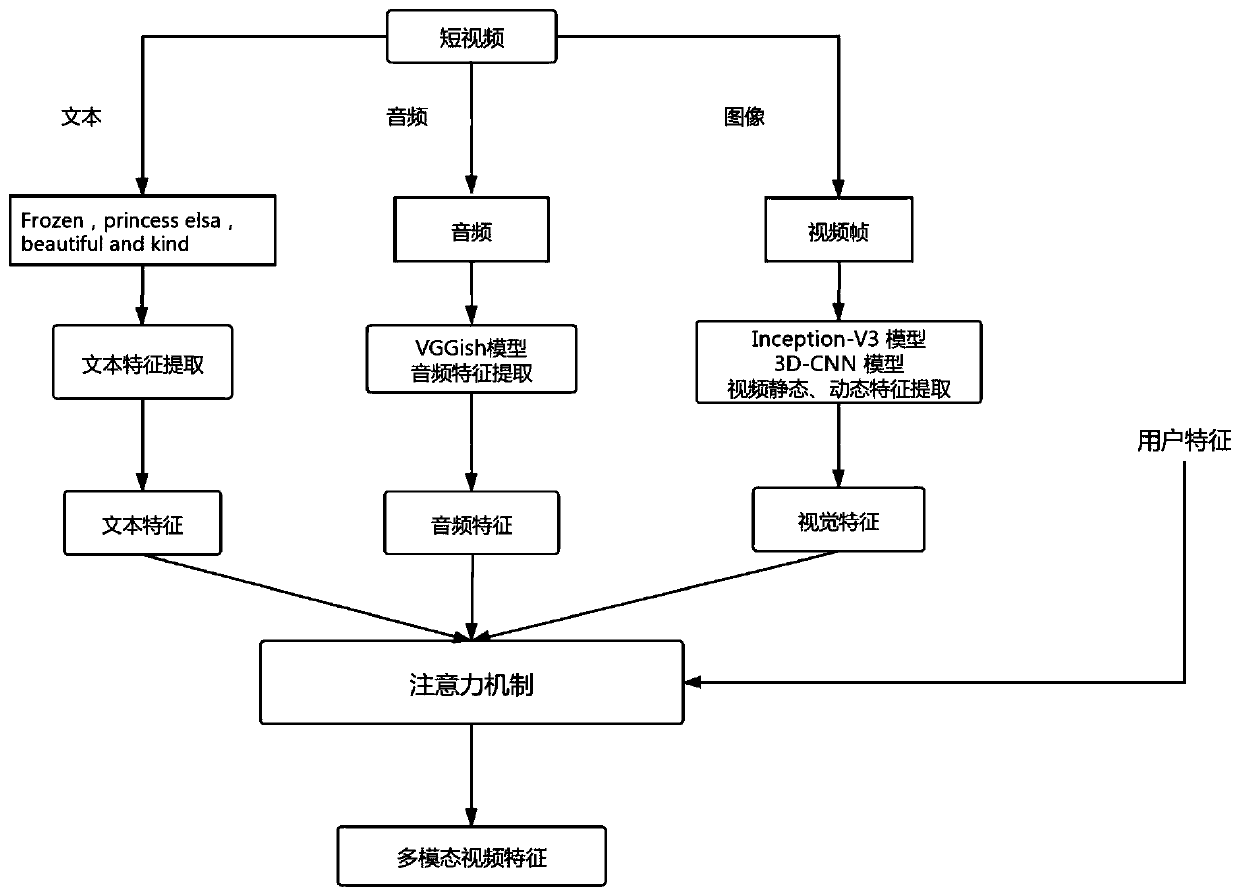

[0042] figure 1 The flow chart of the video recommendation method based on multi-modal video content and multi-task learning disclosed in the present invention is given, which specifically includes the following steps:

[0043] T1, video multi-modal feature extraction:

[0044] a. The extraction of video frames, through the opencv video reading class cv2.VideoCapture to intercept video frame pictures, save them in the path folder, the number of frames starts from 0, considering the short and precise characteristics of short videos, intercept each frame of video The picture is intercepted without skipping frames.

[0045] b. Video static feature extraction, adjust the size of each frame of the video to [299, 299] and then input it into the pre-trained Inception-V3 network, as attached figure 1 As shown, the input is mapped to a 2048-dimensional feature vector as the static original feature vector of the video frame. In order to preserve the information of each frame of the vide...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com