Quickly-constructed human face action unit recognition method and system

A face action and recognition method technology, applied in character and pattern recognition, instruments, calculations, etc., can solve the problems of high sample quality and quantity requirements, long parameter adjustment time, low accuracy, etc., and achieve the speed of calculation. The effect of fast, short parameter adjustment time, and low sample quality and quantity requirements

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0065] The face action unit recognition method of the rapid construction of the present embodiment comprises the following steps:

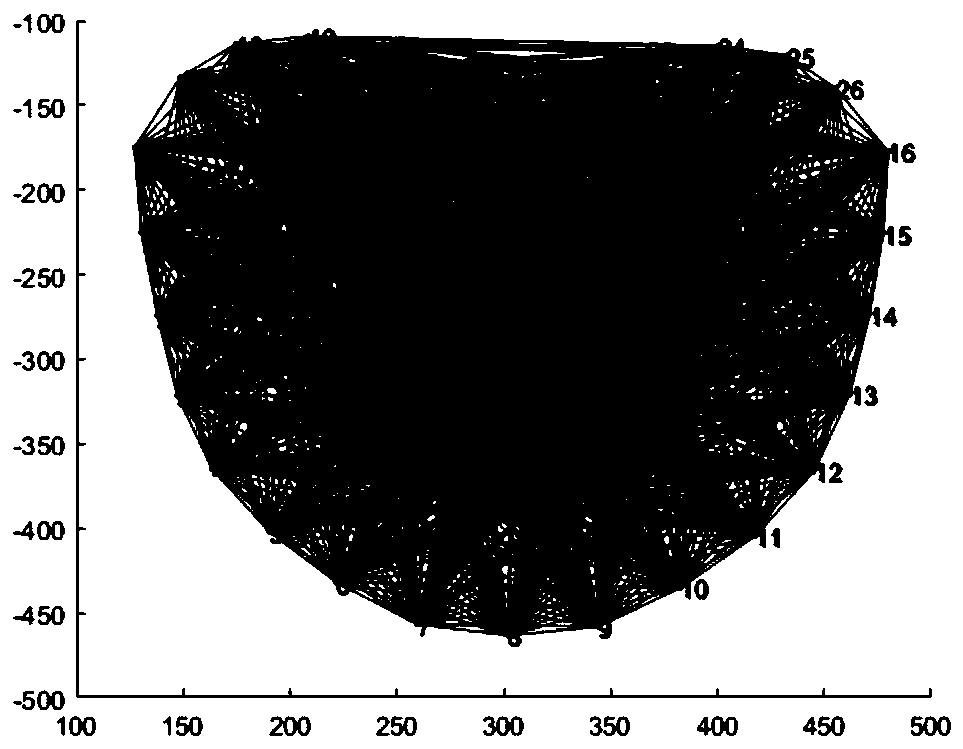

[0066] Inputting the face image to be recognized into the AU motion unit recognition model for identifying the AU motion unit of the face image to be recognized, wherein the construction process of the AU motion unit recognition model includes:

[0067] S1. Generate a sample neutral frame based on the face image of a certain sample with a neutral expression within a preset time period in the sample library, such as within 1S, specifically based on the face of a certain sample with a neutral expression within a preset time period in the sample library Each neutral frame of the image determines the median of each neutral frame; according to the median, the neutral frame of the sample is generated as the sample reference face image and accordingly as the reference metric vector of the sample face image.

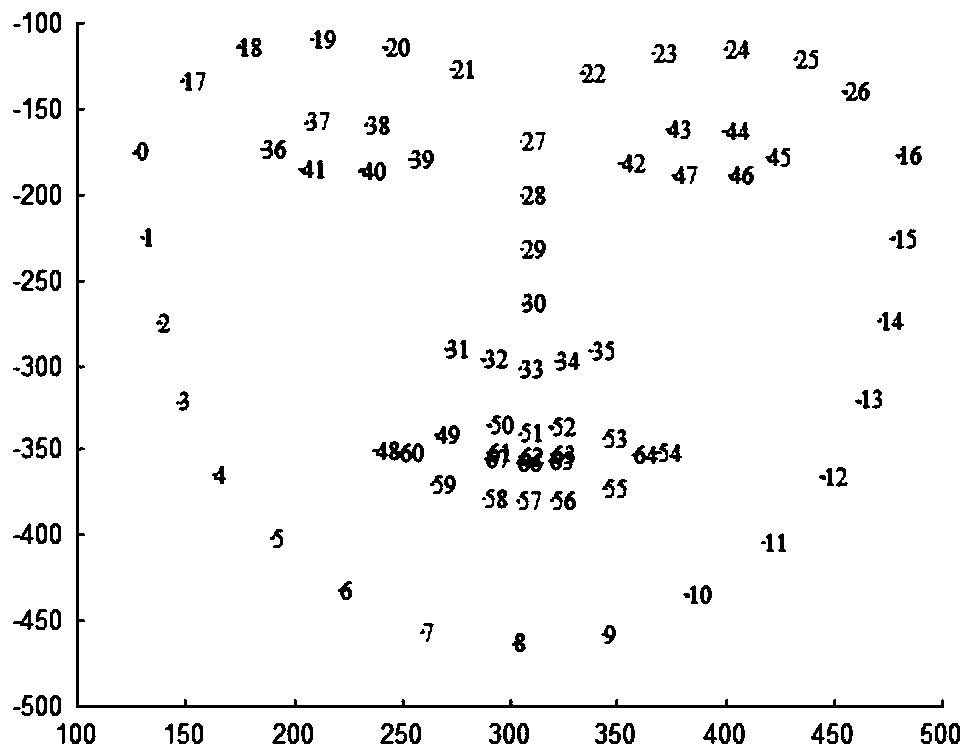

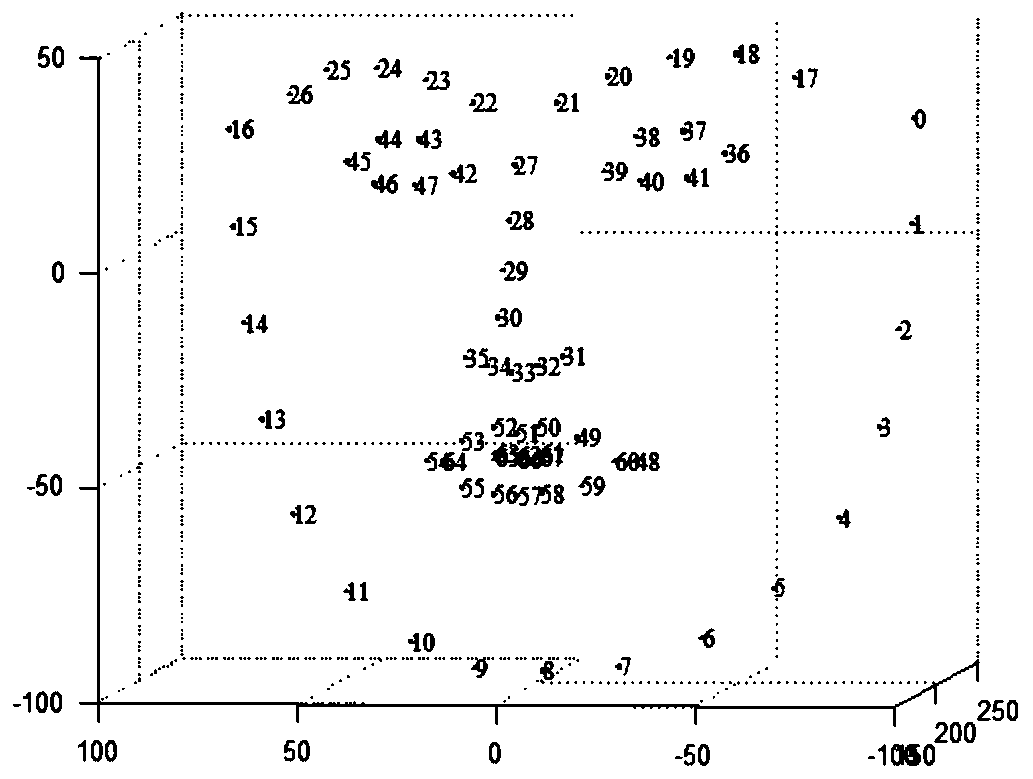

[0068] S2. Collect the key points of the samp...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com