Data protection method, device and apparatus, and computer storage medium

A data protection, computer program technology, applied in digital data protection, computer security devices, computing, etc., can solve problems such as inflexible user privacy data protection methods

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

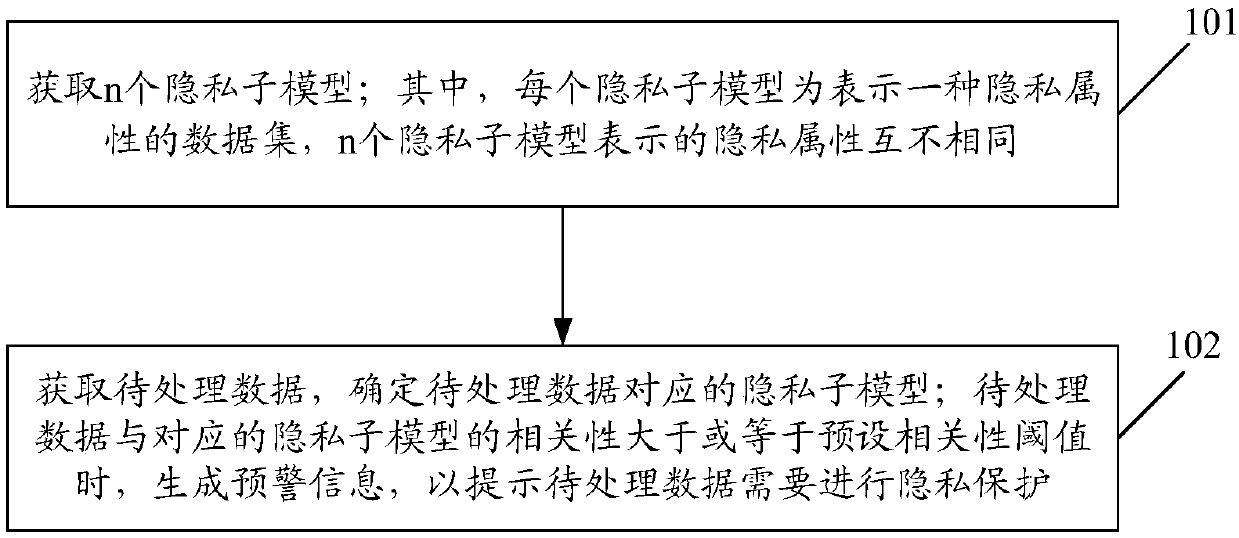

[0031] The first embodiment of the present invention describes a data protection method, figure 1 It is a flowchart of a data protection method in an embodiment of the present invention, such as figure 1 As shown, the process can include:

[0032] Step 101: Obtain n privacy sub-models; wherein, each privacy sub-model is a data set representing a privacy attribute, and the privacy attributes represented by the n privacy sub-models are different from each other, and n is an integer greater than 1.

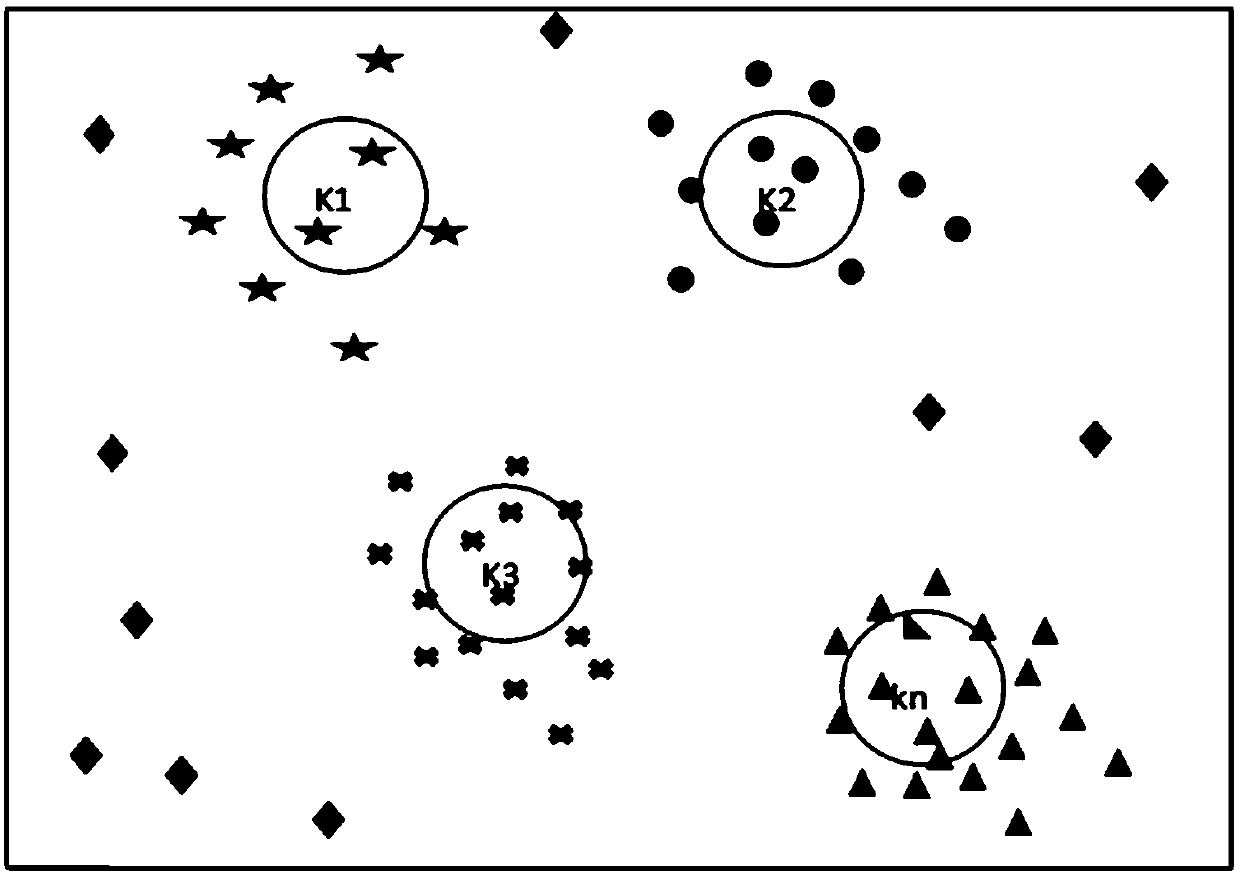

[0033] For the implementation of this step, for example, training data may be obtained first, and the training data is used to represent user data generated when the application is running; then, the training The data is clustered to obtain n privacy sub-models.

[0034] In actual implementation, the original user data generated when the application is running can be obtained, and the above-mentioned original user data can be preprocessed to obtain training data; for example, at le...

no. 2 example

[0061] On the basis of the data protection methods proposed in the foregoing embodiments of the present invention, further illustrations are given.

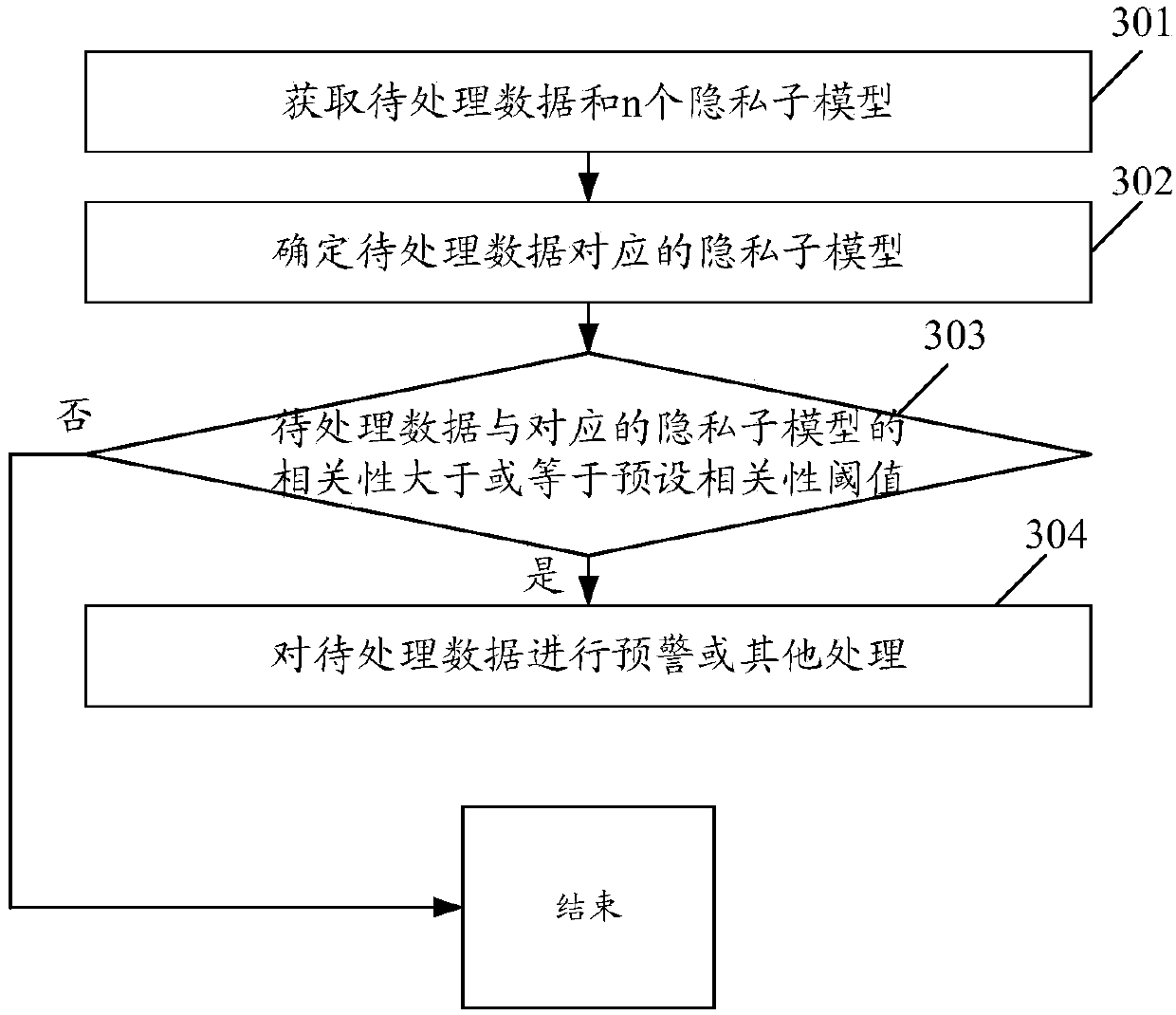

[0062] image 3 It is a flowchart of another data protection method according to an embodiment of the present invention, such as image 3 As shown, the process can include:

[0063] Step 301: Obtain data to be processed and n privacy sub-models.

[0064] The implementation of this step has been described in the first embodiment, and will not be repeated here.

[0065] Step 302: Determine the privacy sub-model corresponding to the data to be processed.

[0066] The implementation of this step has been described in step 102, and will not be repeated here.

[0067] Step 303: Determine whether the correlation between the data to be processed and the corresponding privacy sub-model is greater than or equal to a preset correlation threshold, if yes, execute step 304, and if not, end the process.

[0068] Step 304: Carry out early ...

no. 3 example

[0072] On the basis of the data protection methods provided in the foregoing embodiments, a fourth embodiment of the present invention provides a data protection device.

[0073] Figure 4 It is a schematic diagram of the composition and structure of a data protection device according to an embodiment of the present invention, such as Figure 4 As shown, the device includes an acquisition module 401 and a decision module 402, wherein,

[0074]The obtaining module 401 is used to obtain n privacy sub-models; wherein, each privacy sub-model is a data set representing a privacy attribute, and the privacy attributes represented by the n privacy sub-models are different from each other, and n is greater than 1 integer;

[0075] A decision-making module 402, configured to acquire data to be processed, and determine a privacy sub-model corresponding to the data to be processed; when the correlation between the data to be processed and the corresponding privacy sub-model is greater t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com