Inter-frame prediction method and device, video encoder and video decoder

An inter-frame prediction and preset position technology, applied in the field of video coding and decoding, can solve the problem of high complexity, reduce the number of comparisons, improve the efficiency of inter-frame prediction, and improve the performance of coding and decoding

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

example 1

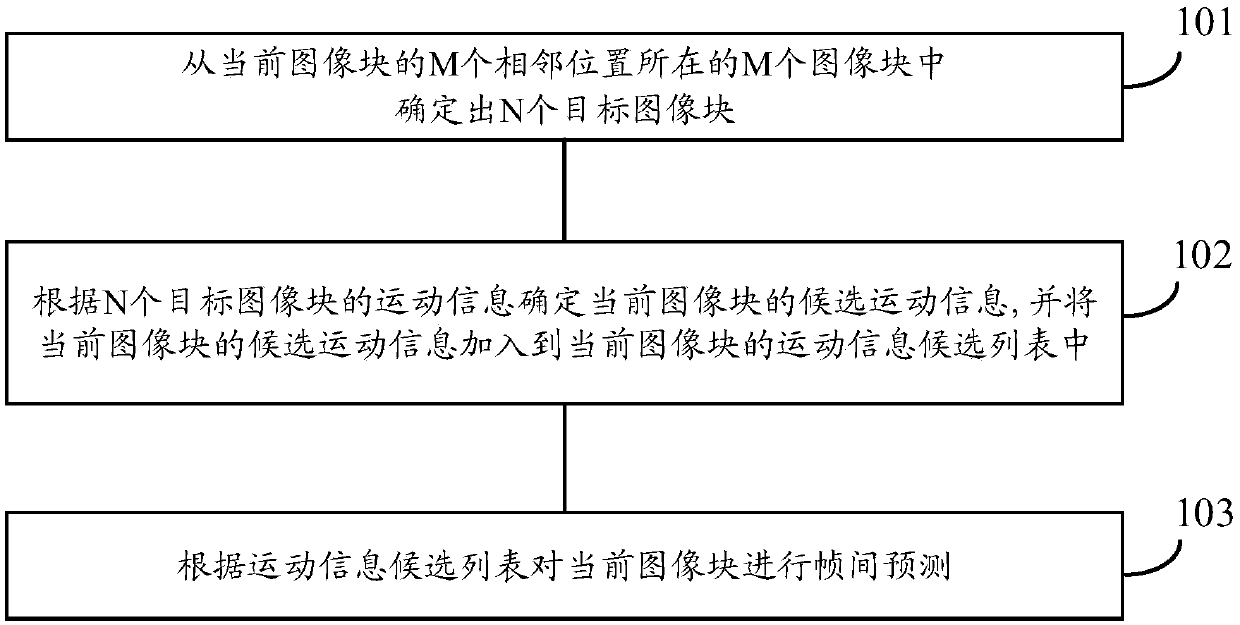

[0211] Example 1: Construction method 1 of the motion information candidate list in the affine mode.

[0212] In Example 1, every time an affine coding block that meets the requirements is found, the candidate motion information of the current image block will be determined according to the motion information of the control points of the affine coding block, and the obtained candidate motion information will be added to the motion information candidate list.

[0213] In the first instance, the motion information of the candidate control points of the current image block is derived mainly by using the inherited control point motion vector prediction method, and the derived motion information of the candidate control points is added to the motion information candidate list. The specific process of example one is as follows Figure 8 as shown, Figure 8 The shown process includes step 401 to step 405, and step 401 to step 405 will be described in detail below.

[0214] 401. Ac...

example 2

[0234] Example 2: Construction method 2 of the motion information candidate list in the affine mode.

[0235] Different from the first example, in the second example, the coding block candidate list is constructed first, and then the motion information candidate list is obtained according to the coding block candidate list.

[0236] It should be understood that, in the first example above, every time an affine coding block at an adjacent position is determined, the motion information of the candidate control points of the current image block is derived according to the motion information of the control points of the affine coding block. In the second example, all the affine coding blocks are determined first, and then the motion information of the candidate control points of the current image block is derived according to all the affine coding blocks. In the second example, the candidate information in the motion information candidate list is generated once. Compared with the ...

example 3

[0258] Example 3: Construction method 1 of constructing a motion information candidate list in translation mode.

[0259] The specific process of example three is as follows Figure 10 as shown, Figure 10 The shown process includes steps 601 to 605, which are described below.

[0260] 601. Acquire a motion information candidate list of a current image block.

[0261] The specific process of step 601 is the same as the specific process of step 401 in the first example, and will not be described again here.

[0262] 602. Traverse the adjacent positions of the current image block, and acquire the coding block where the current adjacent position is located.

[0263] Different from step 402 in the first example, what is found by traversing adjacent positions here may be a common translation block. Of course, the coding blocks traversed here may also be affine coding blocks, which are not limited here.

[0264] 603. Determine whether the motion information candidate list is em...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com