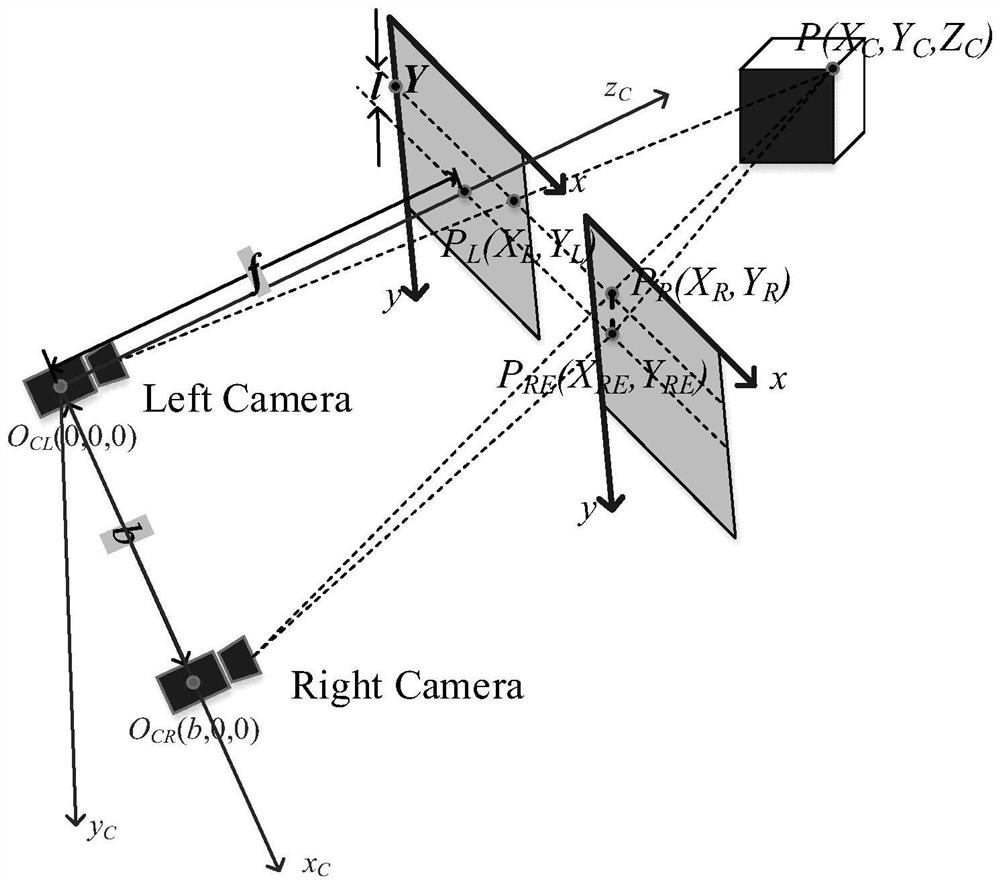

A depth imaging and information acquisition method based on binocular vision

A technology of depth information and binocular vision, applied in image analysis, image enhancement, image data processing, etc., can solve problems such as difficulty in adapting to two-dimensional parallax, and achieve a wide range of applications

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] Below in conjunction with accompanying drawing and specific embodiment the technical scheme is further described as follows:

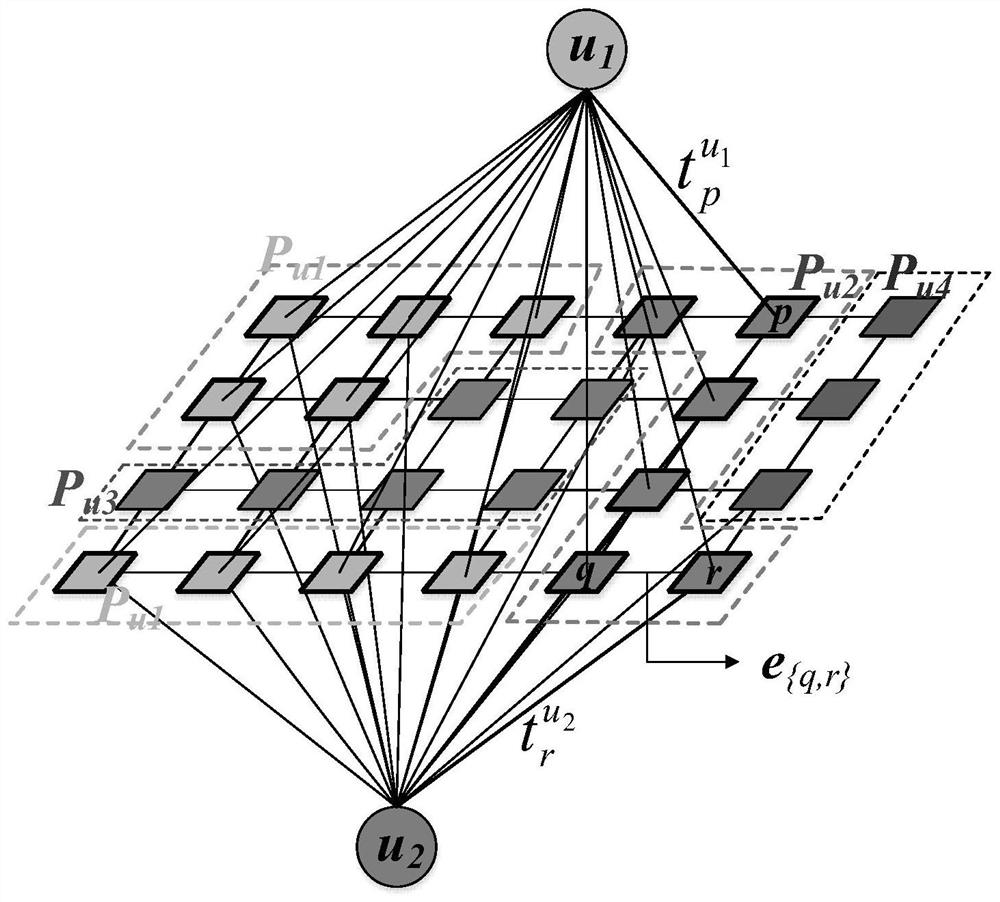

[0033] like Figure 4 As shown, the process of depth imaging and information acquisition method based on binocular vision is described. Firstly, color image acquisition is performed to obtain a color image of a monitoring area, a suitable two-dimensional parallax range is set, and the maximum flow monitoring value is initialized. According to the label Set rules, construct a two-dimensional disparity label set, iteratively select a pair of two-dimensional disparity label combinations to construct a network graph, and design edge weights, then perform maximum flow algorithm optimization, calculate the network maximum flow and minimum cut results, and judge Whether the maximum flow has decreased compared with the maximum flow monitoring value. If the judgment is no, the maximum flow monitoring value update and label update are not performed. If ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com