A method and computer for expressing deep dynamic contextual words

A dynamic context and context technology, applied in computing, natural language translation, instruments, etc., can solve problems such as high difficulty, small resource generation of word representation models, no context and dynamic concepts, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0039] In order to make the object, technical solution and advantages of the present invention more clear, the present invention will be further described in detail below in conjunction with the examples. It should be understood that the specific embodiments described here are only used to explain the present invention, not to limit the present invention.

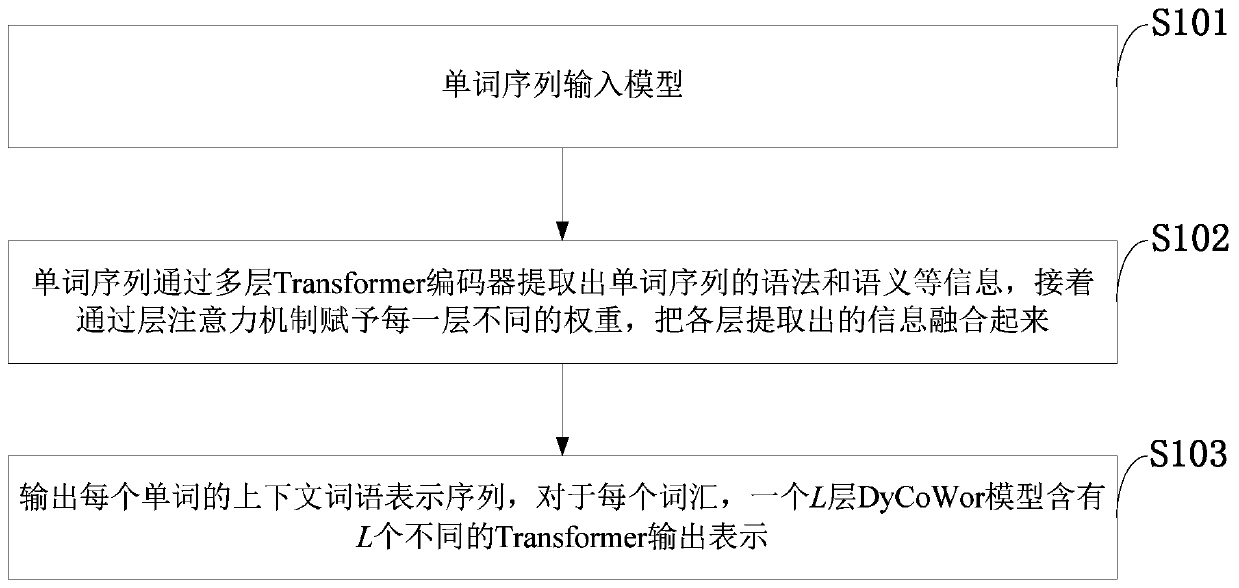

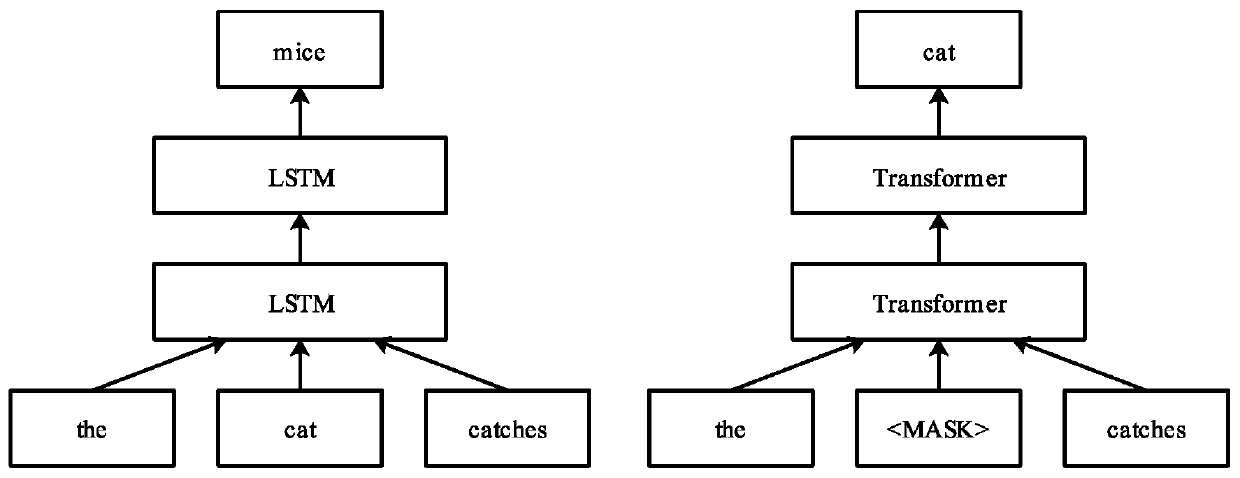

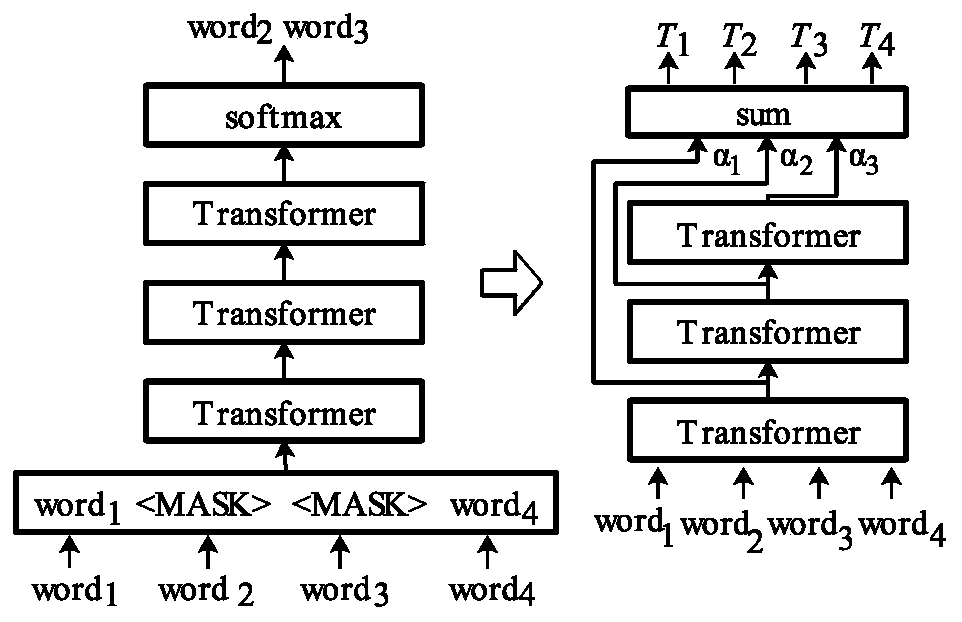

[0040] At present, the mainstream word representation technology has no concept of context and dynamics, and using fixed vectors as word representations cannot solve the problem of polysemy, which directly affects the computer's further understanding of natural language. The deep dynamic context word representation model of the present invention is a multi-layer deep neural network; Each layer of the model captures the information (grammatical information and semantic information etc.) The mechanism gives different weights to each layer of the neural network, and integrates semantic information at different levels to form a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com