Indoor scene semantic annotation method

A technology for semantic labeling and indoor scenes, applied in the fields of multimedia technology and computer graphics, can solve the problems of not increasing the receptive field of feature learning, and not improving the feature expression of feature learning.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

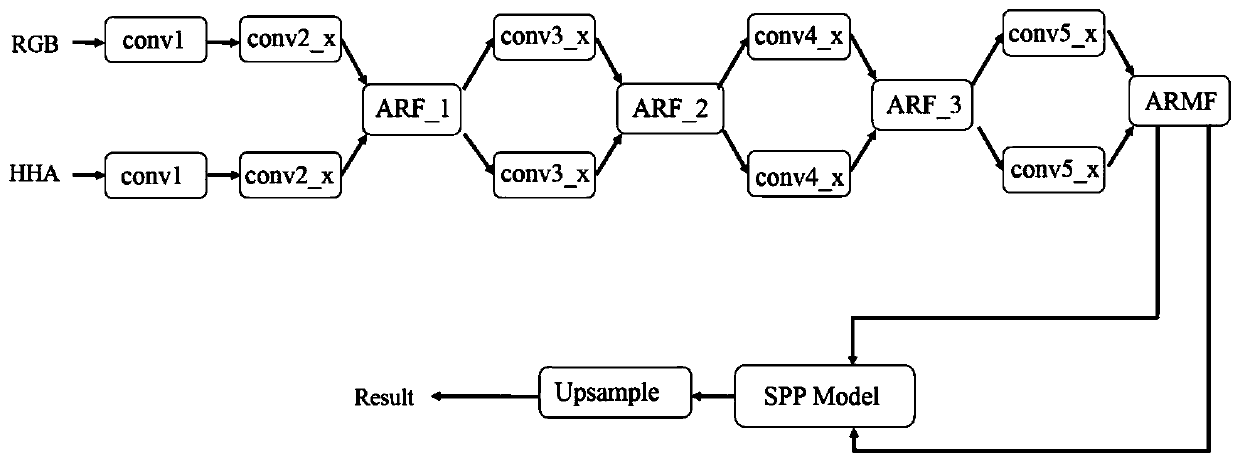

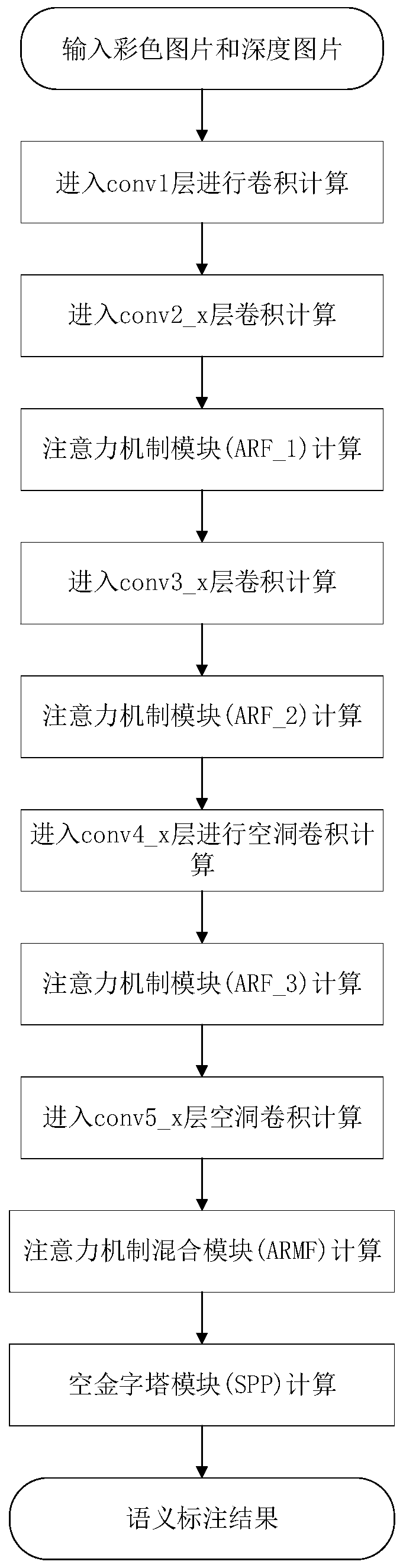

[0037] like figure 1 , 2 As shown, this indoor scene semantic annotation method includes the following steps:

[0038] (1) Input color picture and depth picture;

[0039] (2) Entering the neural network, the color picture and the depth picture pass through conv1 and conv2_x respectively;

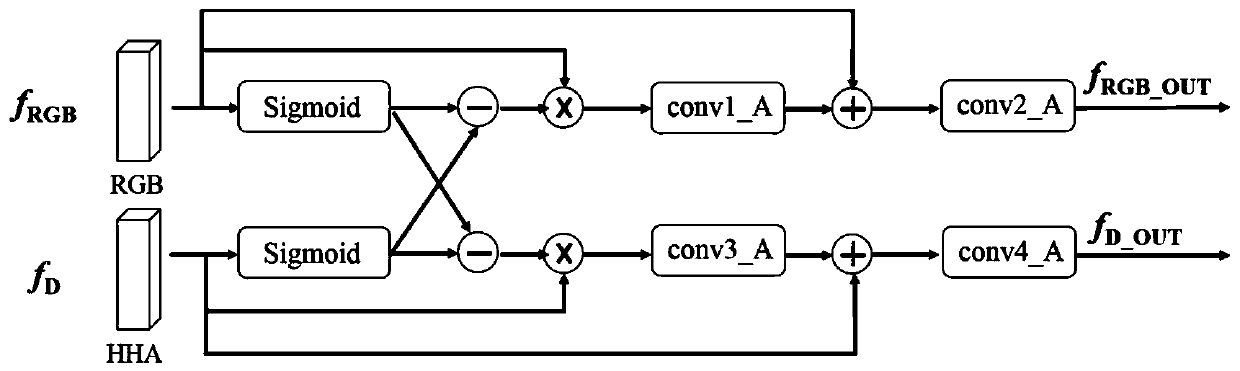

[0040] (3) Enter the first attention mechanism module ARF_1, and obtain the feature map through the calculation of ARF_1;

[0041] (4) Enter conv3_x for convolution calculation;

[0042] (5) Enter the second attention mechanism module ARF_2, and obtain the feature map through the calculation of ARF_2;

[0043] (6) Enter conv4_x to perform hole convolution calculation;

[0044] (7) Enter the third attention mechanism module ARF_3, and obtain the feature map through the calculation of ARF_3;

[0045] (8) Enter conv5_x to perform hole convolution calculation;

[0046] (9) Enter the attention mechanism mixing module ARMF for calculation;

[0047] (10) Enter the spatial pyramid module SPP...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com