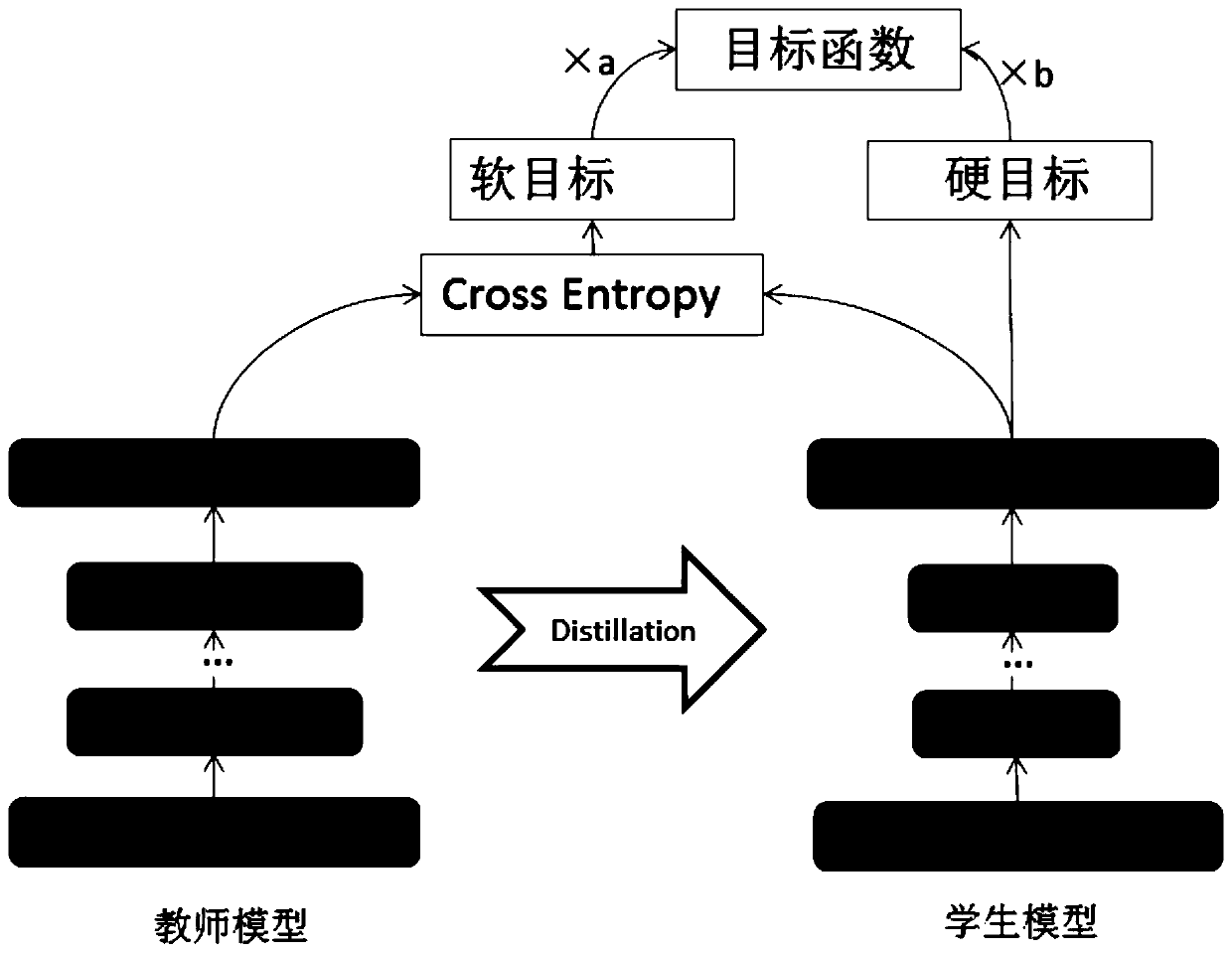

Neural network model compression and acceleration method based on entropy attention

A neural network model and convolutional neural network technology, applied in the field of neural networks, can solve problems such as large number of parameters, long inference time, and large training time.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0047] In order to make the purpose, technical solution and advantages of the present invention clearer, the present invention takes the Cifar10 target recognition task as an example to further describe the present invention.

[0048] The Cifar10 training sample is a 32×32 optical image, and the image data display is shown in the attachment Figure 5 .

[0049] In the experiment on the Cifar10 dataset, the ResNet series network is used, but the networks of different depths and widths are used as the teacher network and the student network respectively. The specific experimental results are shown in Table 1.

[0050] Table 1 Comparison experiment of knowledge transfer based on information entropy attention on Cifar10

[0051] teacher parameter(M) student parameter(M) teacher student(%) F_AT eat KD F_AT+KD EAT+KD R-16-2 0.69 R-16-1 0.18 93.83 90.85 91.41 91.31 91.33 91.31 91.33 R-40-2 2.2 R-16-1 0.18 94.82 90.85 91.17 91.3...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com