Image fusion method based on self-learning neural unit

A technology of neural unit and image fusion, applied in the field of image fusion based on self-learning neural unit

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

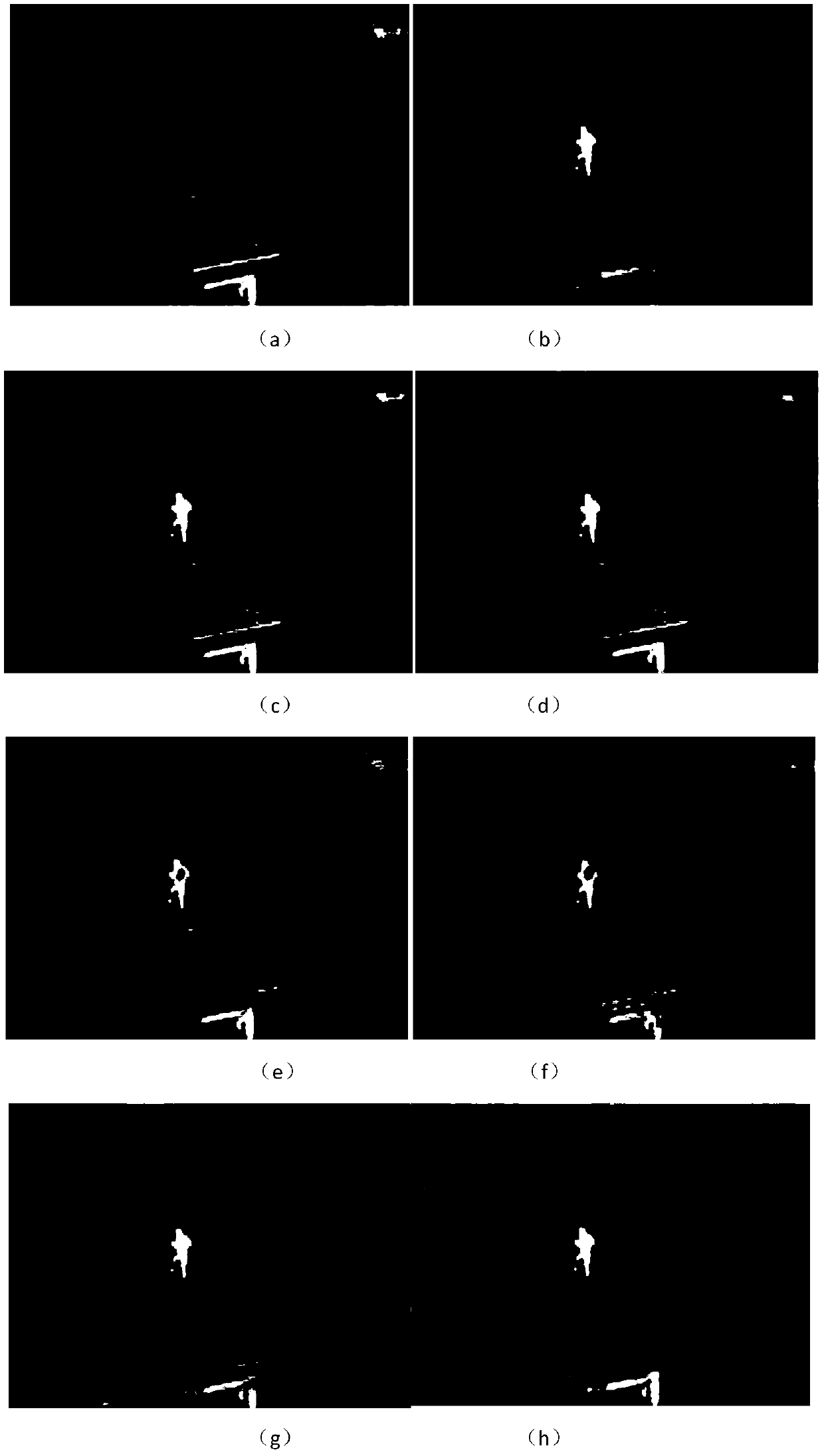

[0071] An embodiment of the present invention (IR-VIS infrared and visible light image) will be described in detail below in conjunction with the accompanying drawings. This embodiment is carried out under the premise of the technical solution of the present invention, such as figure 1 As shown, the detailed implementation and specific operation steps are as follows:

[0072] Step 1. Perform Mask R-CNN processing on the infrared and visible light images to obtain the corresponding mask image, mask matrix, category information, and score information. According to the correctness and needs of the classification information judged subjectively by the human eye, the mask matrix whose category information of the infrared image is "person" is obtained.

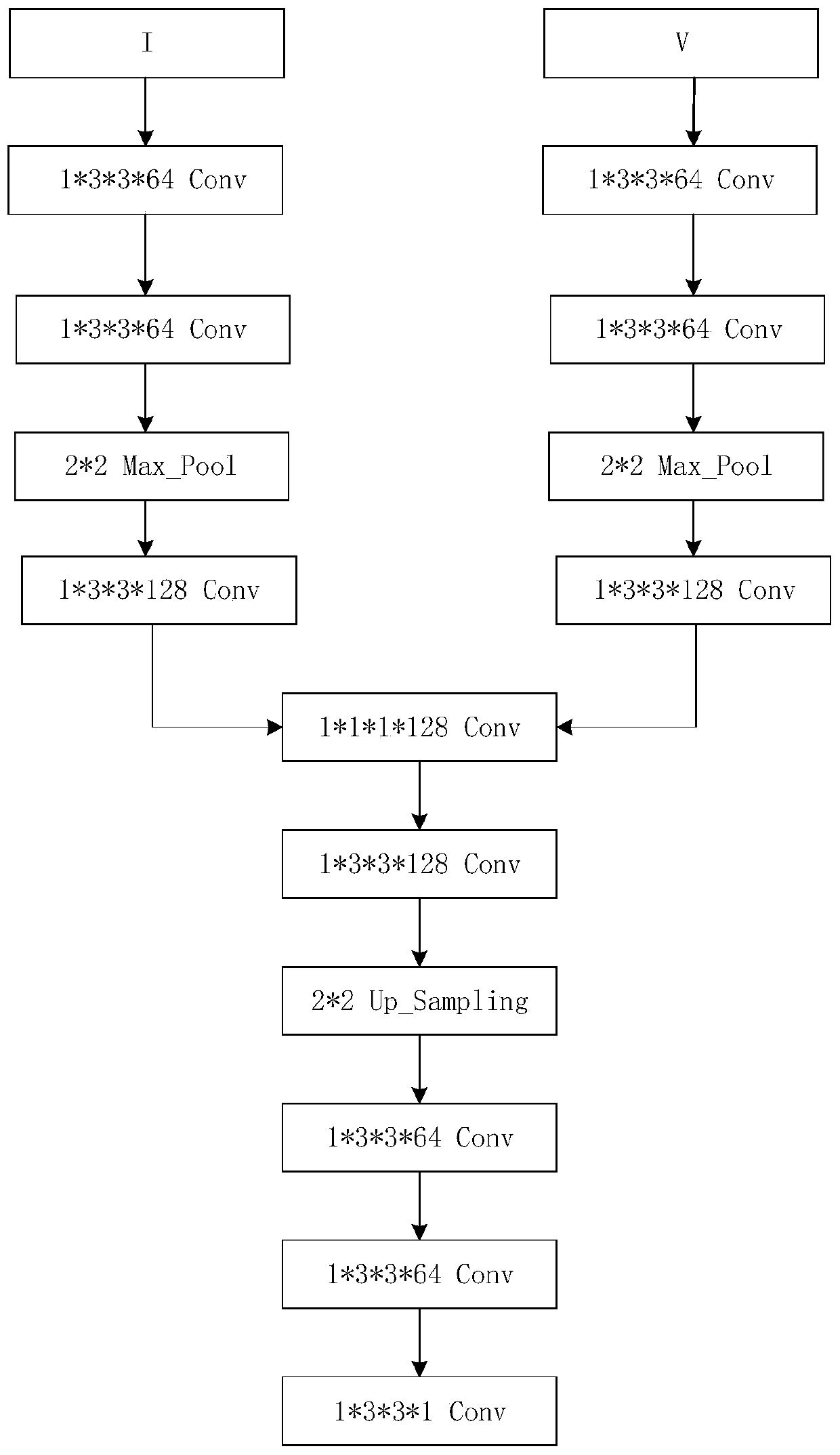

[0073] Step 2, build an autoencoder network, and use the convolutional neural network (CNN) to select, fuse, and reconstruct image features. The autoencoder network consists of three parts: encoding layer, fusion layer and decoding...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com