Moving target tracking method based on template matching and deep classification network

A deep classification and template matching technology, applied in the field of image processing, can solve the problem that the model cannot continue to accurately track the target, cannot achieve long-term accurate tracking, and the classifier recognition ability is not strong enough, and achieves the effect of accurately tracking the target.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

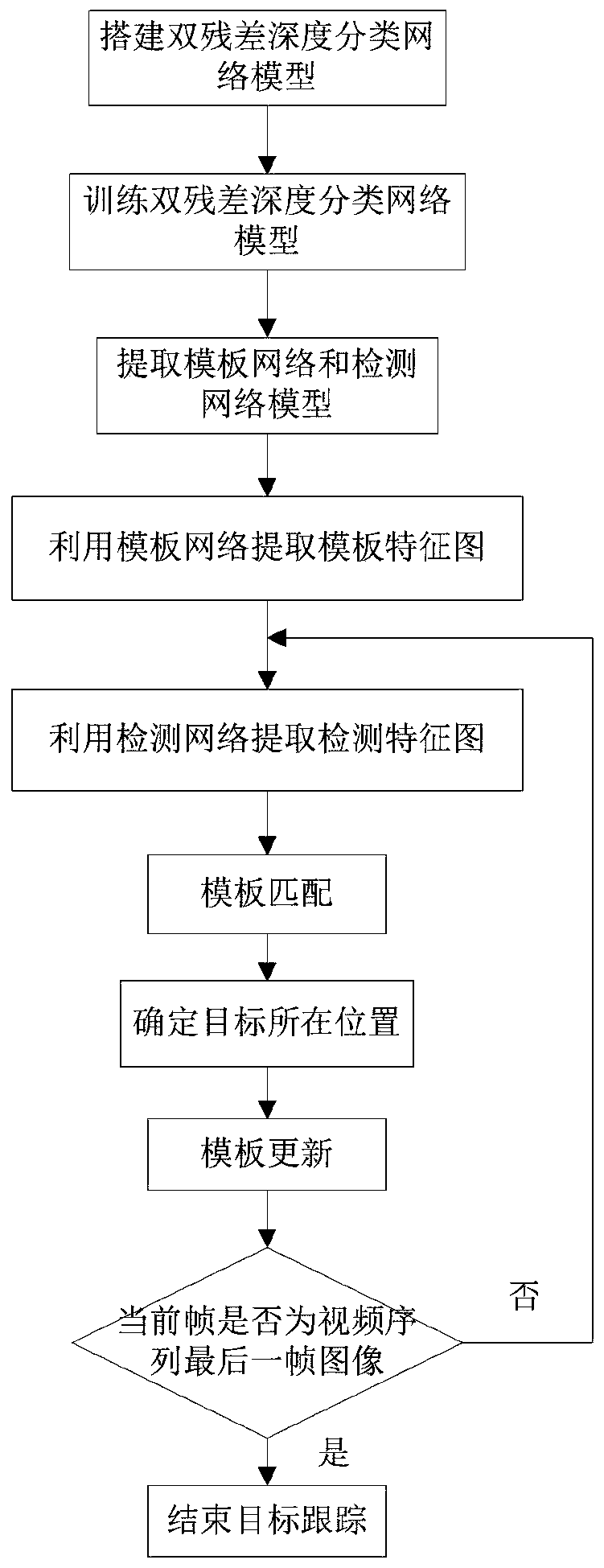

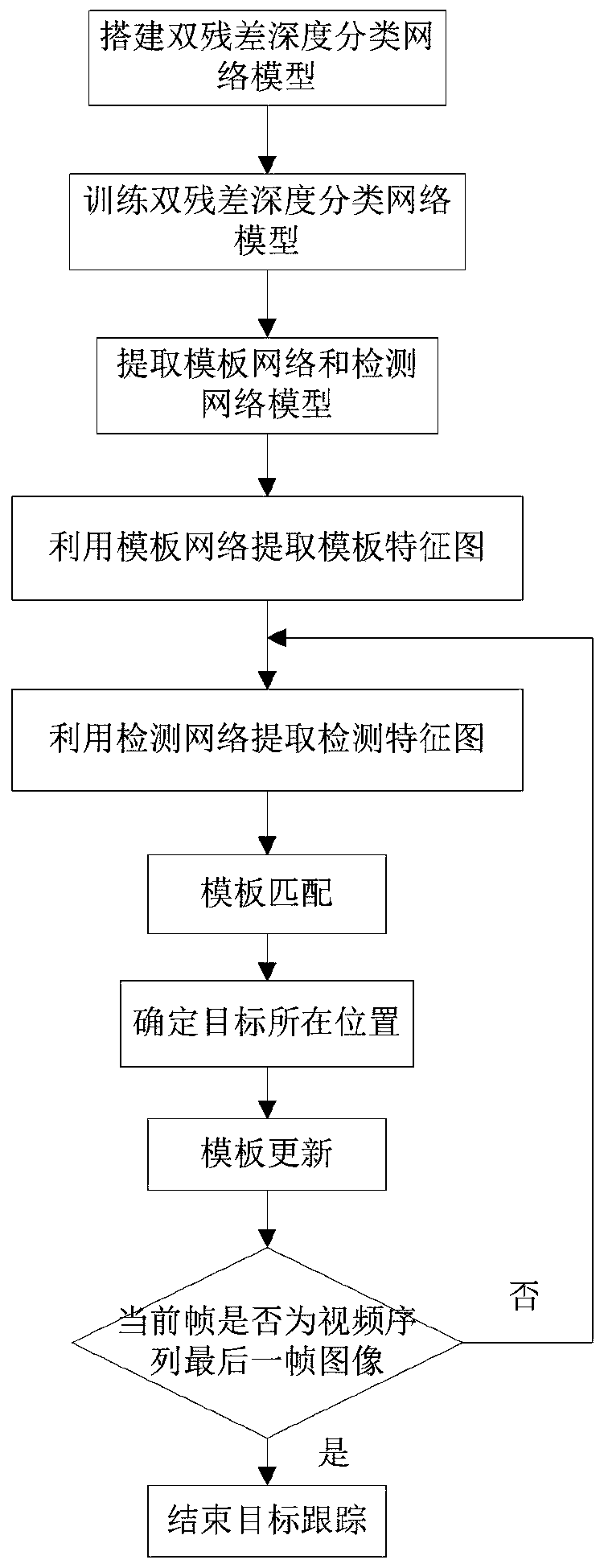

[0036] The embodiments and effects of the present invention will be further described below in conjunction with the accompanying drawings.

[0037] refer to figure 1 , the concrete steps of the present invention are as follows.

[0038] Step 1, build a dual residual deep classification network model.

[0039] 1.1) Set up the front-end network:

[0040] Adjust the input layer parameters of the existing two deep residual neural networks ResNet50, where the number of neurons in the input layer of the first network is set to 224×224×3, and the number of neurons in the input layer of the second network is set to The number is set to 448×448×3, and the parameters of other layers remain unchanged, and these two deep residual neural networks are used as the front-end network of the dual residual deep classification network model;

[0041] 1.2) Set up the backend network:

[0042] Two three-layer fully connected networks are built as the back-end network of the dual residual deep cla...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com