Mask pooling model training and pedestrian re-identification method for pedestrian re-identification

A pedestrian re-identification and model training technology, applied in the field of computer vision, to achieve good training effect, improve accuracy and efficiency, and improve accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

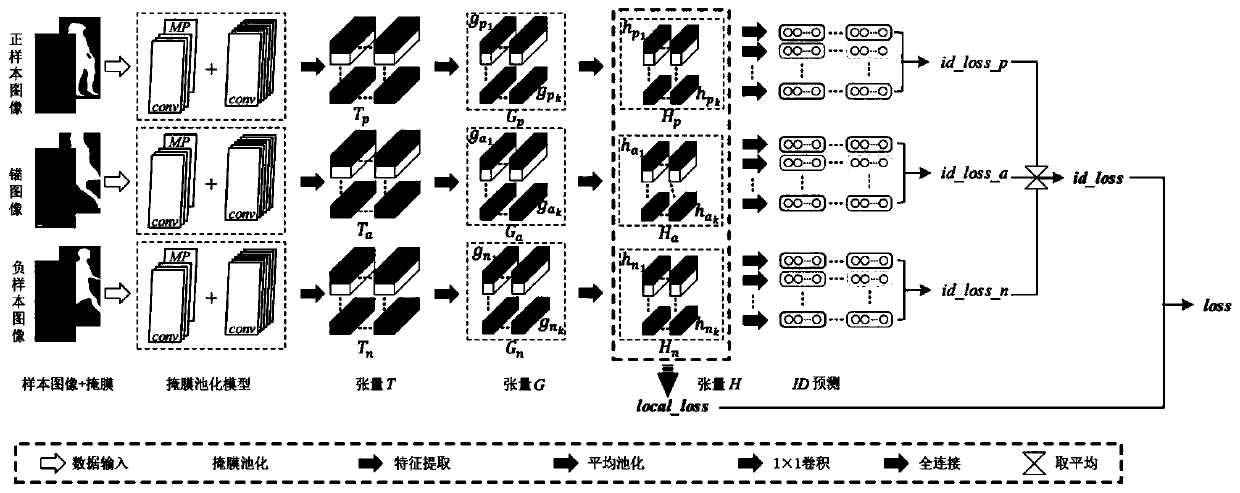

[0059] Such as figure 1 As shown, this embodiment provides a mask pooling model training method for pedestrian re-identification, including training steps:

[0060] S1. Obtain anchor image a, positive sample image p, and negative sample image n;

[0061] S2. Input a, p, n and the masks corresponding to a, p, n into the mask pooling model respectively, and obtain the corresponding tensor T a , T p , T n ;

[0062] S3. to T a , T p , T n Perform pooling operation and convolution operation respectively to obtain the corresponding tensor H a 、H p 、H n ;

[0063] S4. H a 、H p 、H n Enter the classifier respectively to get the corresponding prediction result R a , R p , R n ;

[0064] S5. According to the prediction result R a , R p , R n Calculate the loss value;

[0065] S6. Train the mask pooling model according to the loss value.

[0066] The traditional triple loss method requires an image with 3 input channels: anchor image a, positive sample image p, and ...

Embodiment 2

[0122] A pedestrian re-identification method, comprising: inputting a pedestrian image to be recognized into a mask pooling model, and the mask pooling model is trained by using the mask pooling model training method as described in Embodiment 1.

[0123] Through the mask pooling model described in Example 1, background features can be gradually removed and the most critical pedestrian features can be obtained. Such as Figure 4 Shown is the result of removing background features from pedestrian images. It can be seen that the outline features of pedestrians have been preserved to the greatest extent, and the cluttered background has been effectively removed, improving the accuracy and efficiency of pedestrian re-identification.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com