Method for accurately positioning moving object based on deep learning

A technology for precise positioning of moving objects, applied in the field of precise positioning of moving objects based on deep learning, can solve problems such as large amount of calculation, decreased real-time performance, and failure to be applied, and achieve real-time positioning and high real-time performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

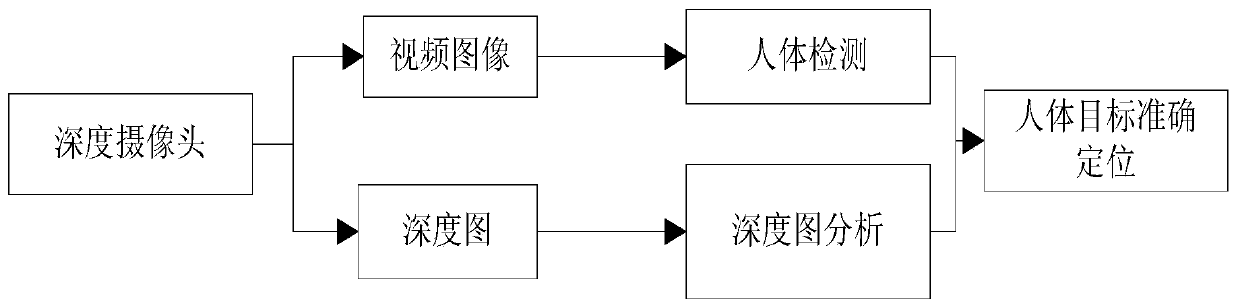

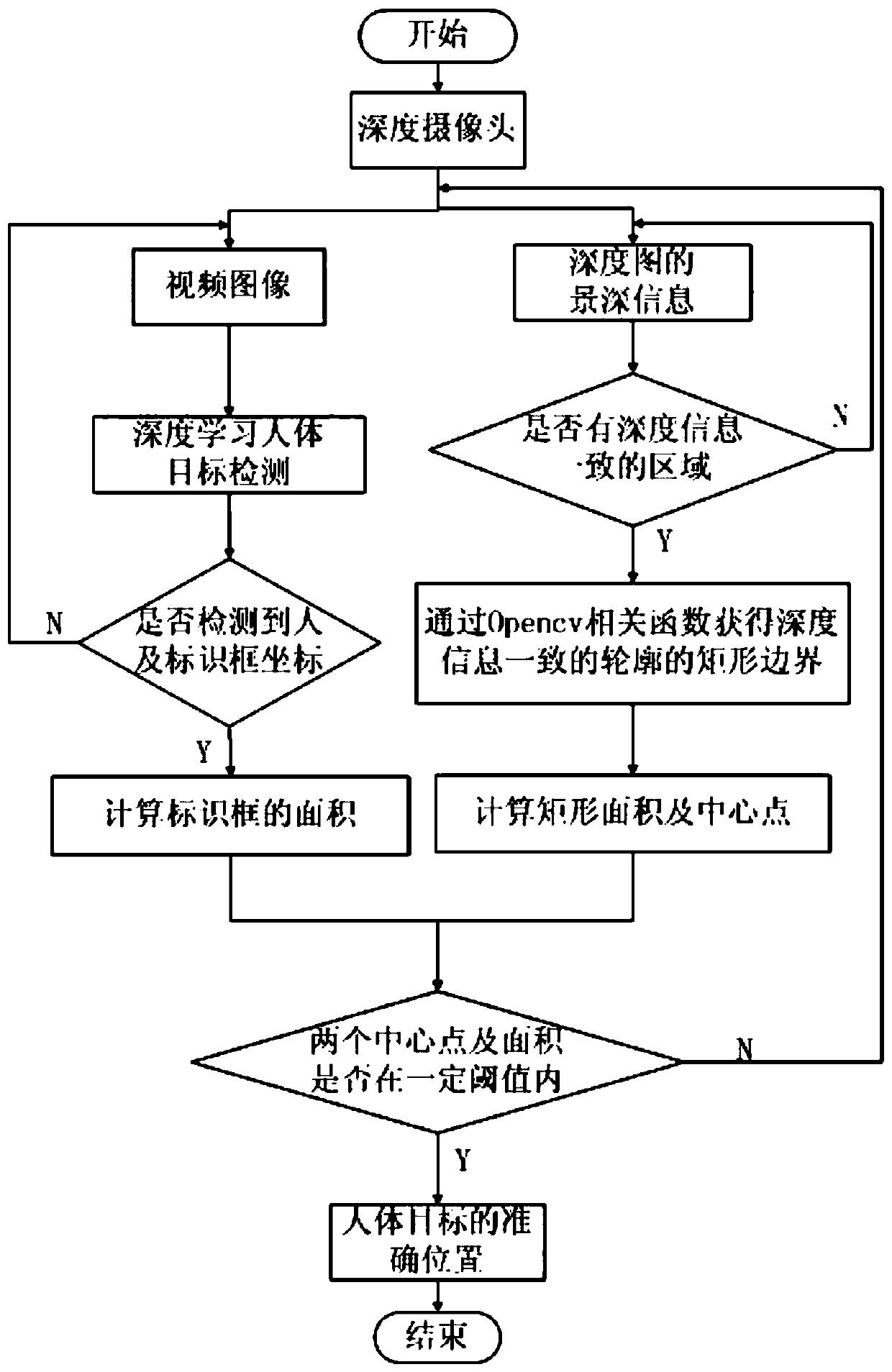

[0049] Example 1. A method for precise positioning of moving objects based on deep learning, such as Figure 1-5 shown, follow the steps below:

[0050] a. Obtain the video sequence to be detected and the corresponding depth map;

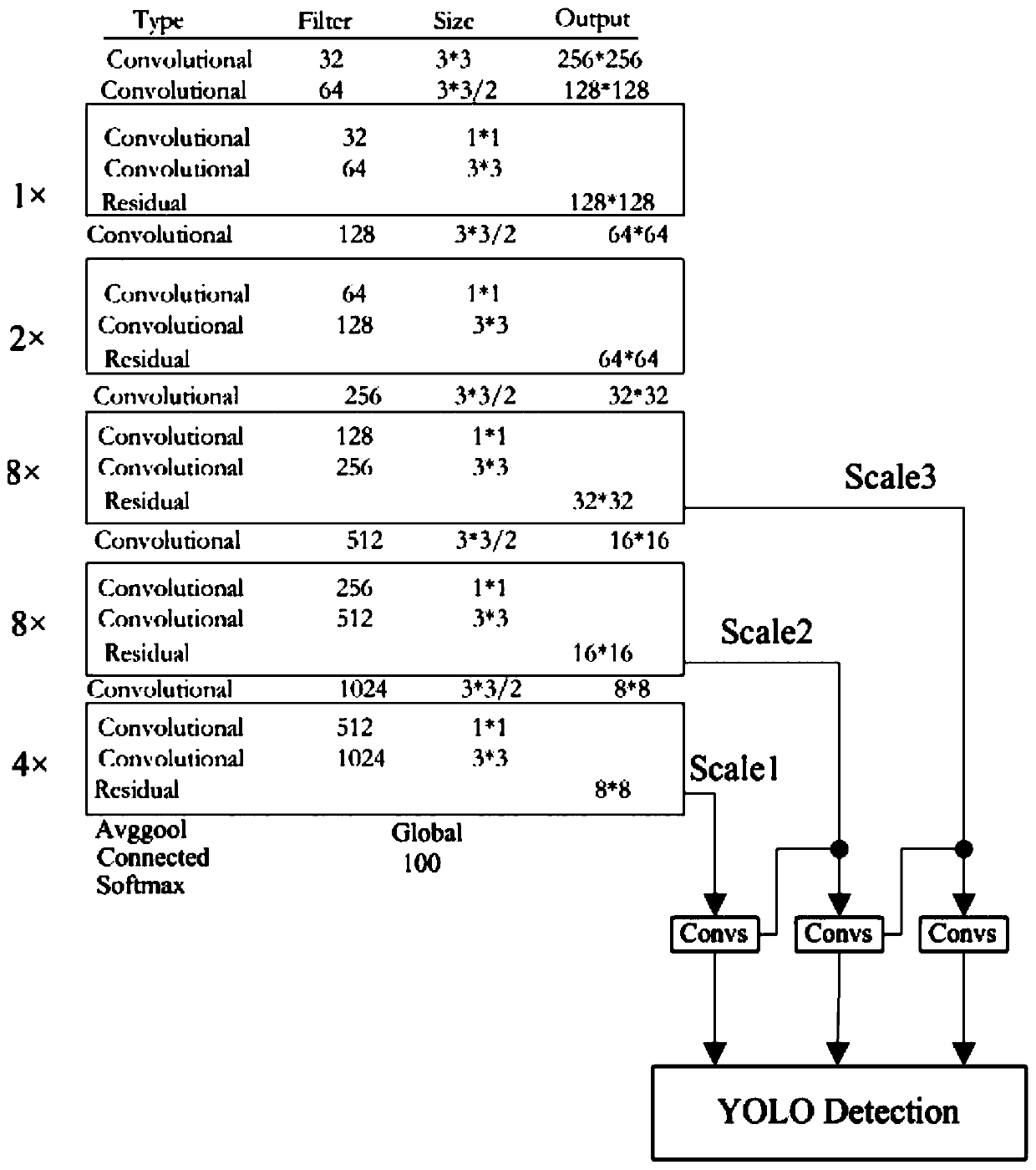

[0051] b. Use darknet-yolo-v3 to detect the moving target in the video sequence and mark the logo frame;

[0052] c. Combined with the depth of field information in the depth map, use the correlation function of Opencv to find the contour in the depth map, and draw the rectangular boundary surrounding the contour to obtain a rectangle of the region of interest;

[0053] d. Calculate the area of the logo frame, the center point of the logo frame, the area of the rectangle, and the center point of the rectangle;

[0054] e. Match the area of the marked frame, the center point of the marked frame, the area of the rectangle, and the center point of the rectangle. When the two match within a preset threshold range, the position of the marked f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com