Activation function parameterization improvement method based on recurrent neural network

A technology of cyclic neural network and activation function, applied in neural learning methods, biological neural network models, neural architectures, etc., can solve the problem of increasing the difficulty of cyclic neural network training, activation function falling into the saturation region, and inability to effectively correct weights, etc. problem, to achieve the effect of preventing gradient disappearance, avoiding too small derivative, and good effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] The present invention will be further described below in conjunction with the accompanying drawings. The following examples are only used to illustrate the technical solution of the present invention more clearly, but not to limit the protection scope of the present invention.

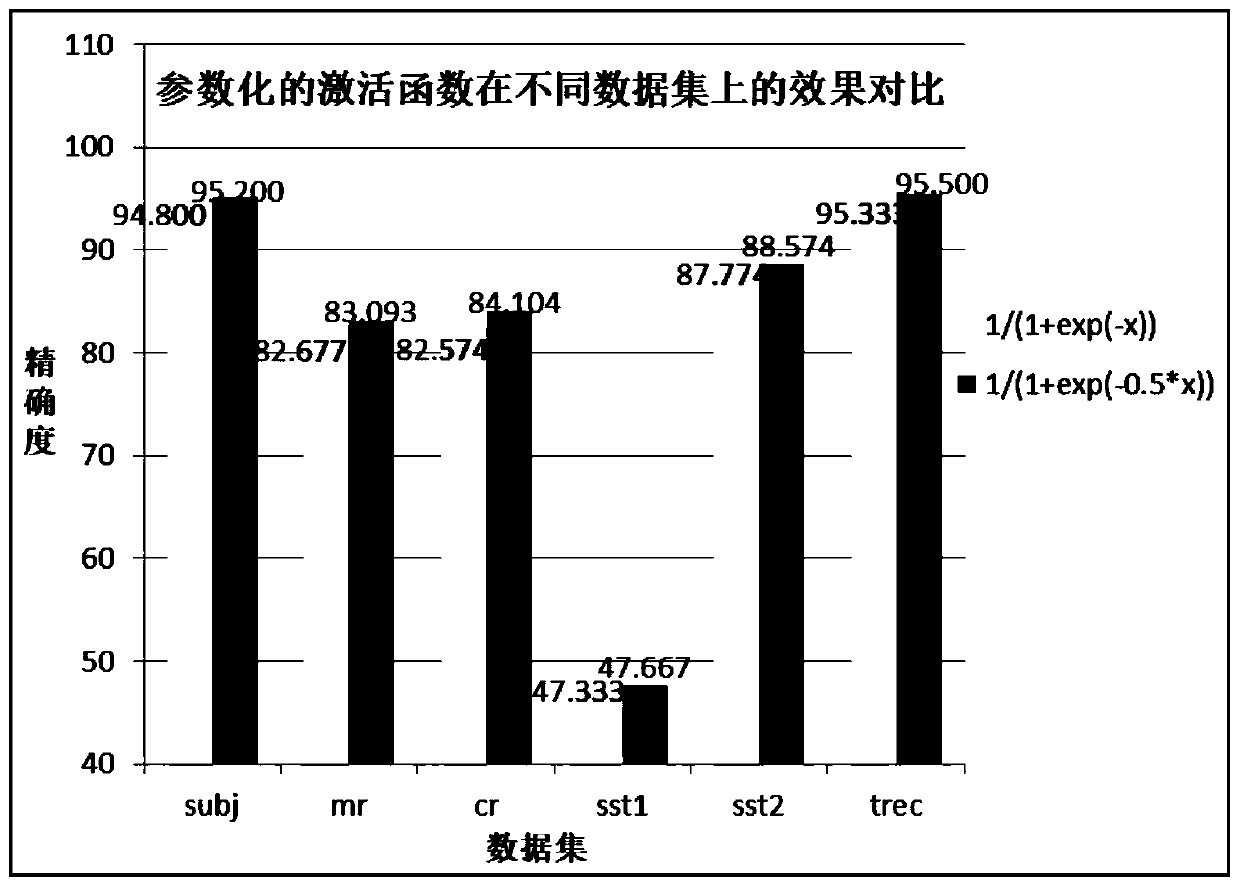

[0033] Based on the DC-Bi-LSTM network, the present invention designs a universal Sigmoid activation function form, modifies the activation function expression in combination with the internal structure of LSTM, and confirms the function expression form by testing different parameter combinations. This improves the accuracy of text classification.

[0034] A method for improving parameterization of an activation function based on a recurrent neural network, comprising the following steps:

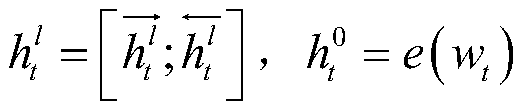

[0035] In step 1, a bidirectional long-short-term memory network (Bi-LSTM) is constructed on the basis of a long-term short-term memory network (LSTM); in this embodiment, the Bi-LSTM has 15 layers.

[0036...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com