A system and method for complementing lidar three-dimensional point cloud targets

A 3D point cloud and lidar technology, applied in the field of lidar object detection and recognition, can solve the problems of camera influence, lack of depth information, etc., achieve enhanced density and uniformity, good completion effect, and improved ability to extract features Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0029] This embodiment is based on the 2017 Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition:

[0030] "C.R.Qi, H.Su, K.Mo, and L.J.Guibas. Pointnet: Deep learning on point sets for 3d classification and segmentation. Proc. Computer Vision and Pattern Recognition (CVPR), IEEE, 1(2):4, 2017" proposed program improvements.

[0031] In this embodiment, a system for complementing a lidar three-dimensional point cloud target includes a first coding layer, a second coding layer, and a third coding layer;

[0032] The first coding layer includes the first shared multi-layer perceptron, the first point-wise maximum pooling layer; the second coding layer includes the second shared multi-layer perceptron, the second point-wise maximum pooling layer; the third coding layer includes the first Three shared multi-layer perceptrons, the third point-wise maximum pooling layer;

[0033] In the first coding layer, the input data includes three-dimens...

Embodiment 2

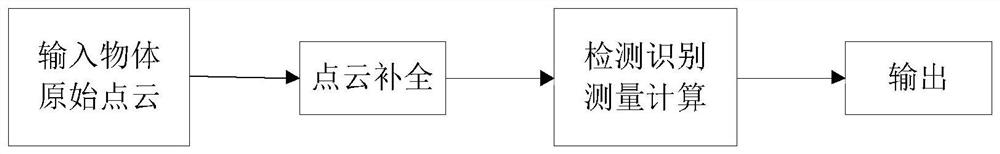

[0041] This embodiment provides a method for complementing the lidar 3D point cloud target:

[0042] Setting the first encoding layer, including the first shared multi-layer perceptron, the first point-wise maximum pooling layer;

[0043] Set the second encoding layer, including the second shared multi-layer perceptron, the second point-wise maximum pooling layer;

[0044] Set the third encoding layer, including the third shared multi-layer perceptron and the third point-wise maximum pooling layer;

[0045] In the first coding layer, the input data includes three-dimensional coordinates of m points, and the data format is a matrix P of m×3, and each row of the matrix is a three-dimensional coordinate pk=(x, y, z) of a point; the input data is first After obtaining the point feature matrix Point feature i by the first shared multi-layer perceptron, each point feature is f 1k ; Then, the point feature matrix Point feature i obtains the global feature matrix Global feature i ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com