Model training method, customer service system, data labeling system and readable storage medium

A technology for model training and training data sets, applied in the field of data processing, can solve the problems of high acquisition cost, low quality of classification models, and poor response quality of customer service systems, so as to improve classification accuracy and quality, reduce costs and workload, and increase The effect of quality and data

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0056] It should be understood that the specific embodiments described here are only used to explain the present invention, not to limit the present invention.

[0057] The technical idea of the present invention is briefly described here.

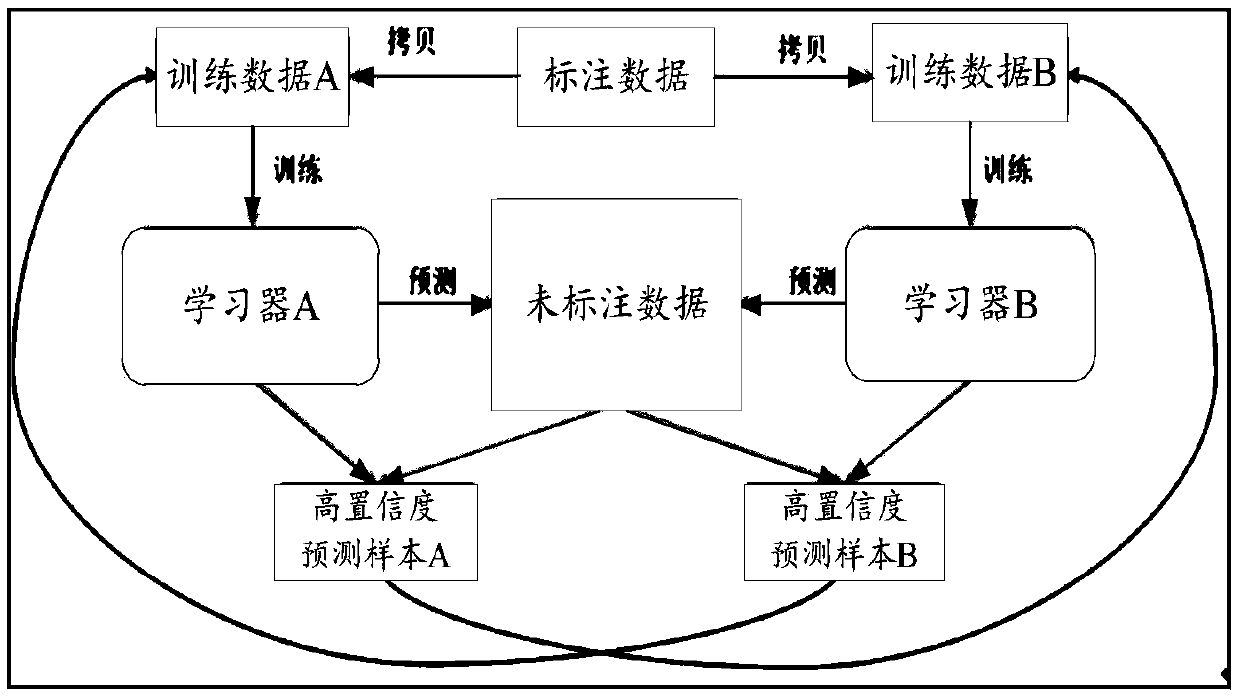

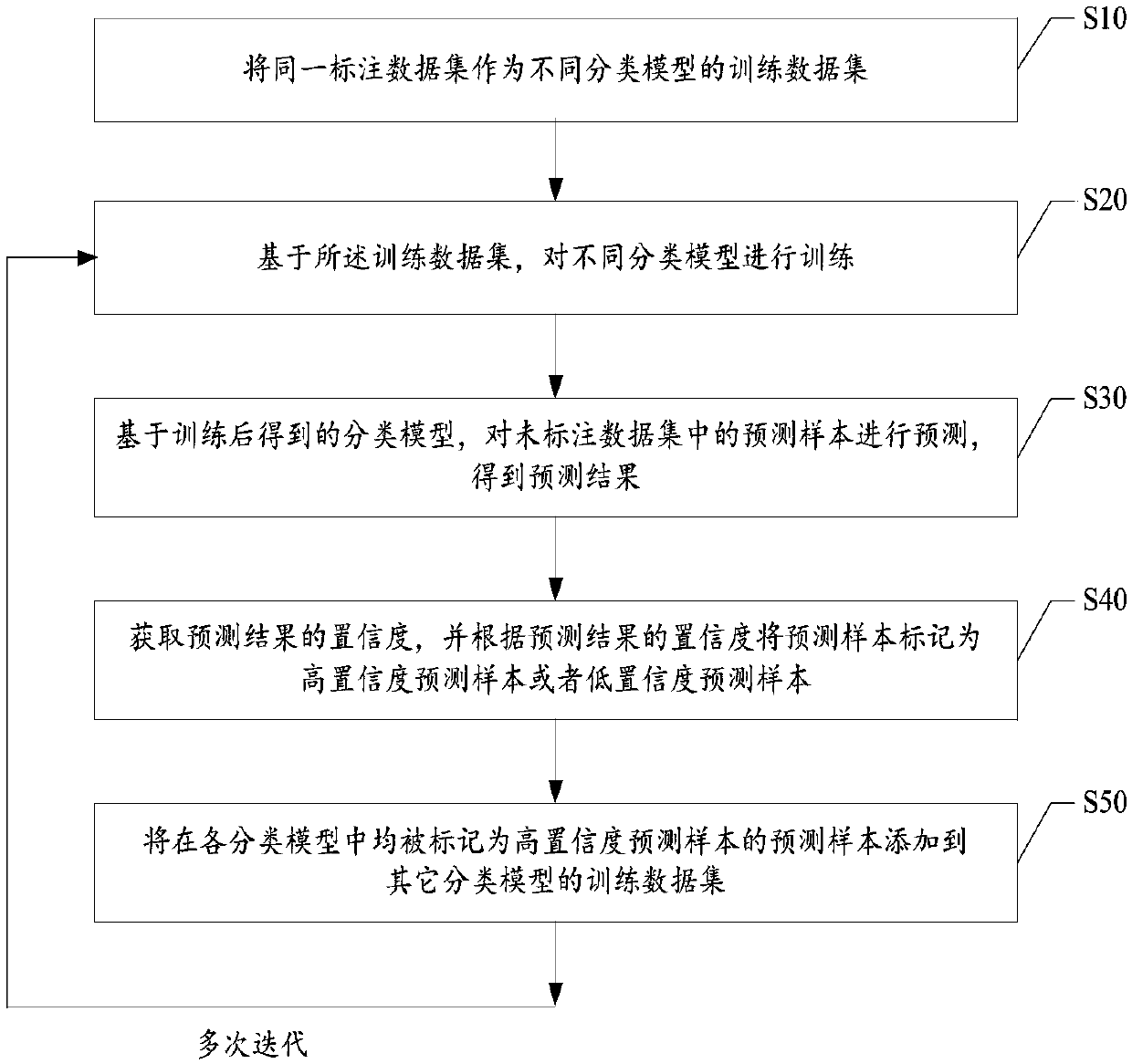

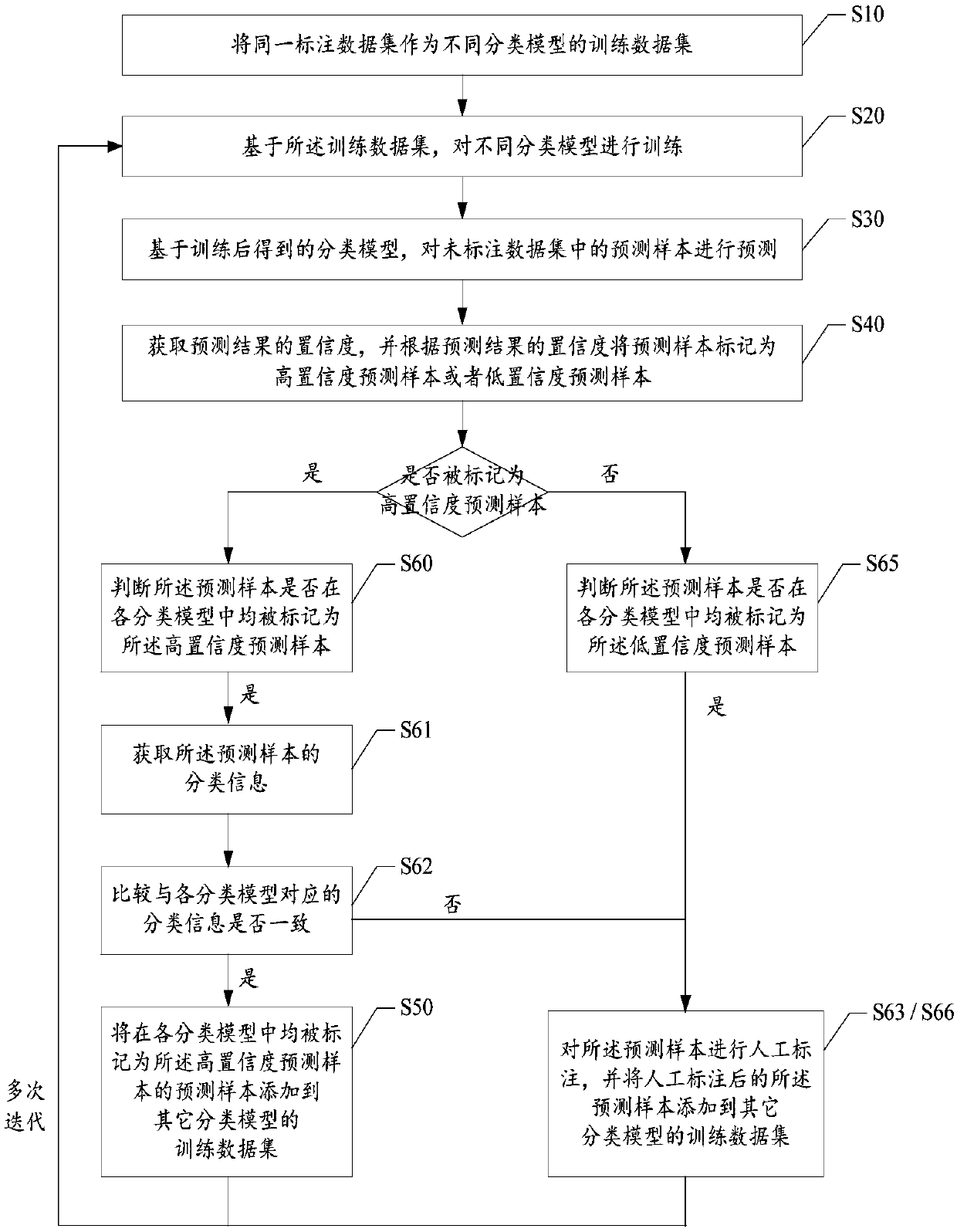

[0058] When training a classification model, the required training data set generally needs to use a data set composed of labeled data. Express the training data set as Dk={(x 1 ,y 1 ),(x 2 ,y 2 ),…,(x k ,y k )}, the above k samples have been labeled, and the corresponding category is marked as known, which is the labeled sample in the labeled data set in this embodiment; in addition, there is also a data set Du={(x k+1 ,y k+1 ),(x k+2 ,y k+2 ),…,(x k+u ,y k+u )}, k<u, the above u samples are not labeled, and the corresponding category is marked as unknown, which is the unlabeled sample in the unlabeled data set in this embodiment.

[0059] If the traditional supervised learning method is adopted, only D k Can be used for th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com