An Adaptive Removal Method of Video Compression Artifacts Based on Deep Learning

A video compression and deep learning technology, applied in the field of video processing, can solve the problems of increased coding complexity, lack of adaptive ability, weak robustness, etc., to enhance nonlinear expression ability, alleviate the problem of gradient disappearance, strengthen communication and The effect of multiplexing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0035] Such as Figure 1-5 One of them is shown in order to make researchers in the technical field better understand the technical solution applied for in the present invention. Next, the technical solutions in the embodiments of the application of the present invention will be described more completely in combination with the drawings in the embodiments of the application. The described embodiments are only some, not all, embodiments of the present application. On the basis of the embodiments described in this application, other embodiments obtained by those skilled in the art without creative efforts shall all fall within the protection scope of this application.

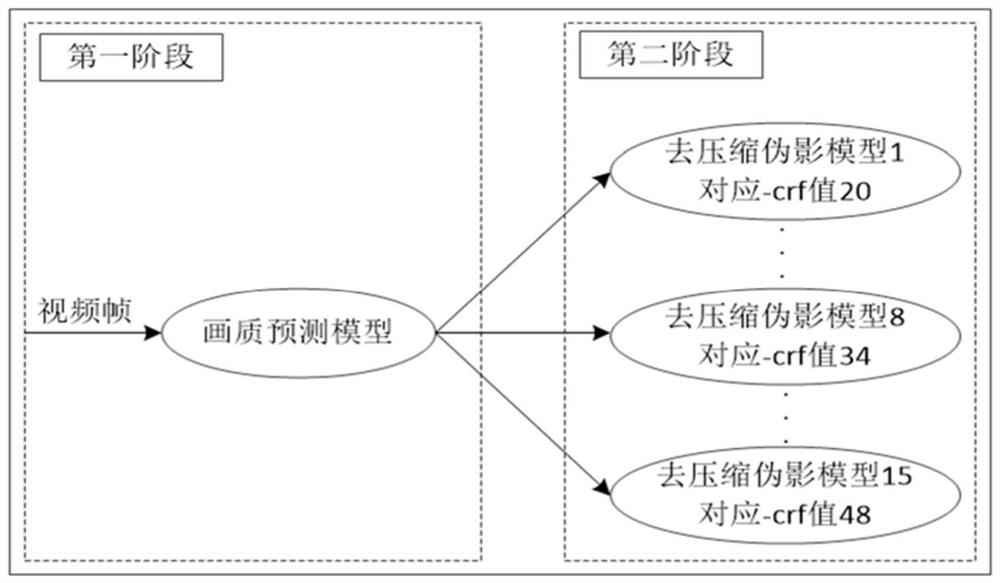

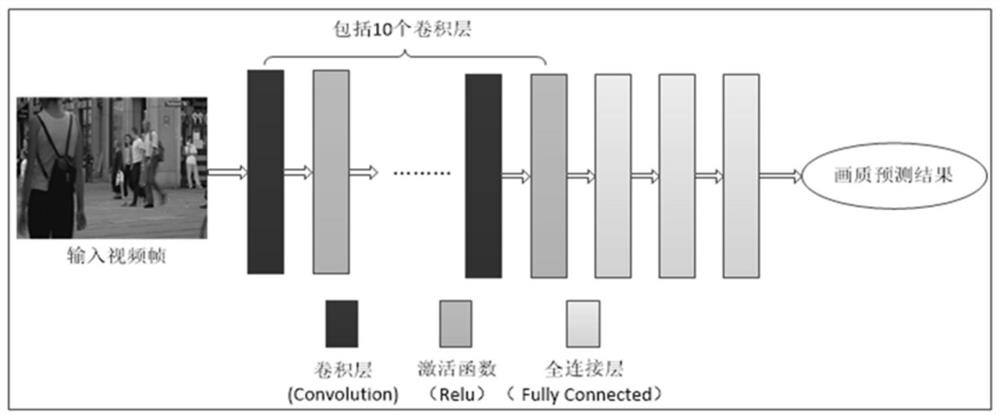

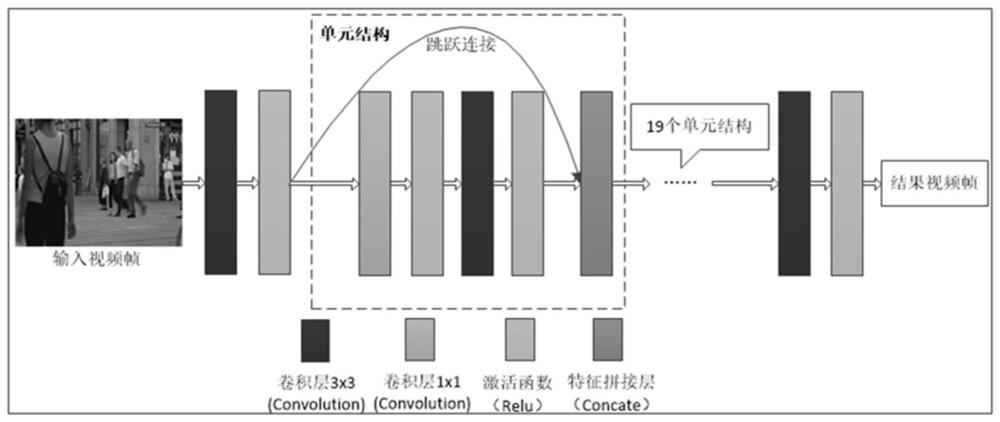

[0036] attached by figure 1 It can be seen that the present invention requires two implementation stages, namely, the image quality prediction stage and the artifact removal stage. The invention discloses a method for adaptively removing video compression artifacts based on deep learning, which includes the fo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com