A three-dimensional wire frame structure method and system fusing a binocular camera and IMU positioning

A binocular camera and camera coordinate system technology, applied in surveying and navigation, image enhancement, instruments, etc., can solve problems such as inability to describe the environment, loss of key structural information, and high calculation costs

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

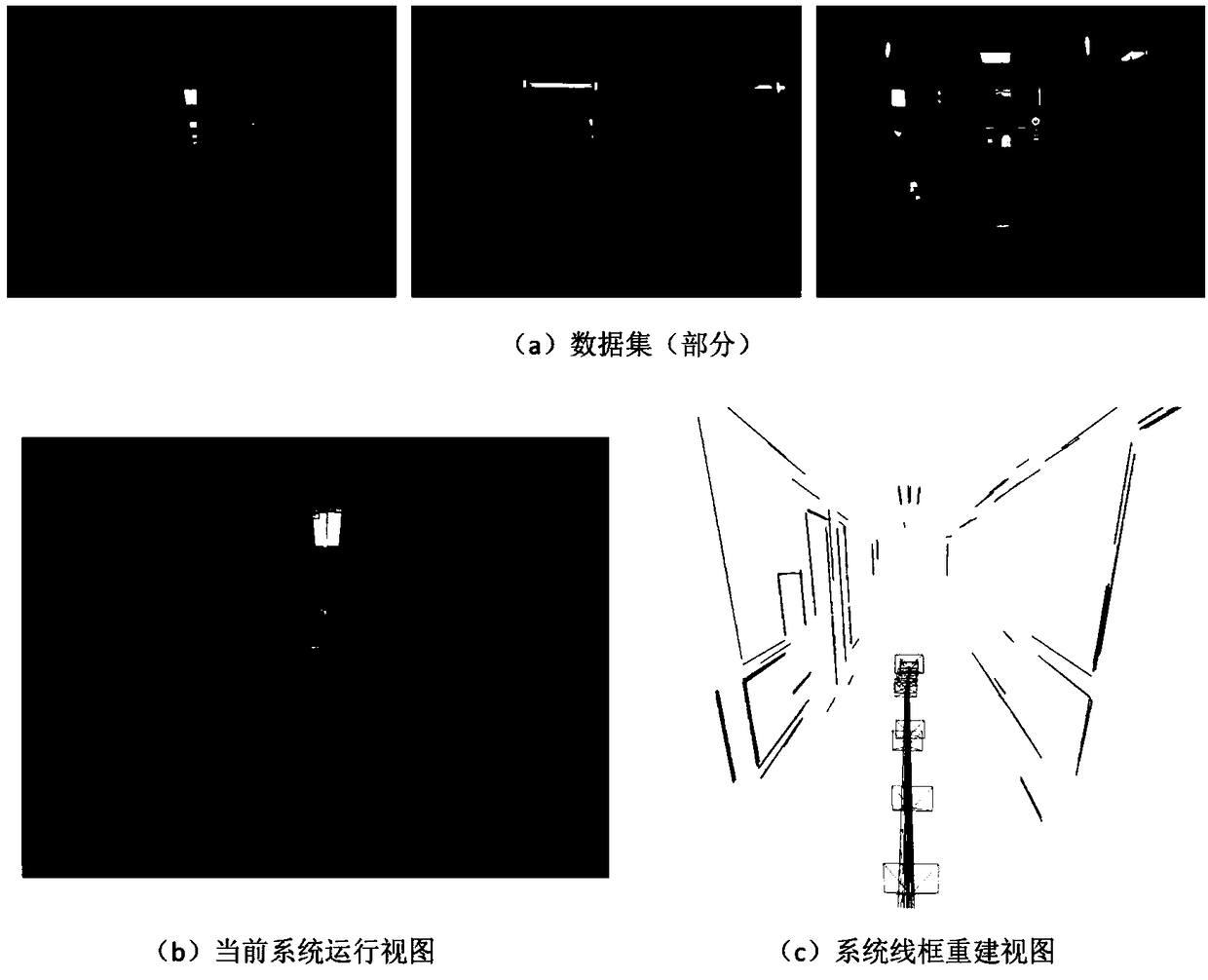

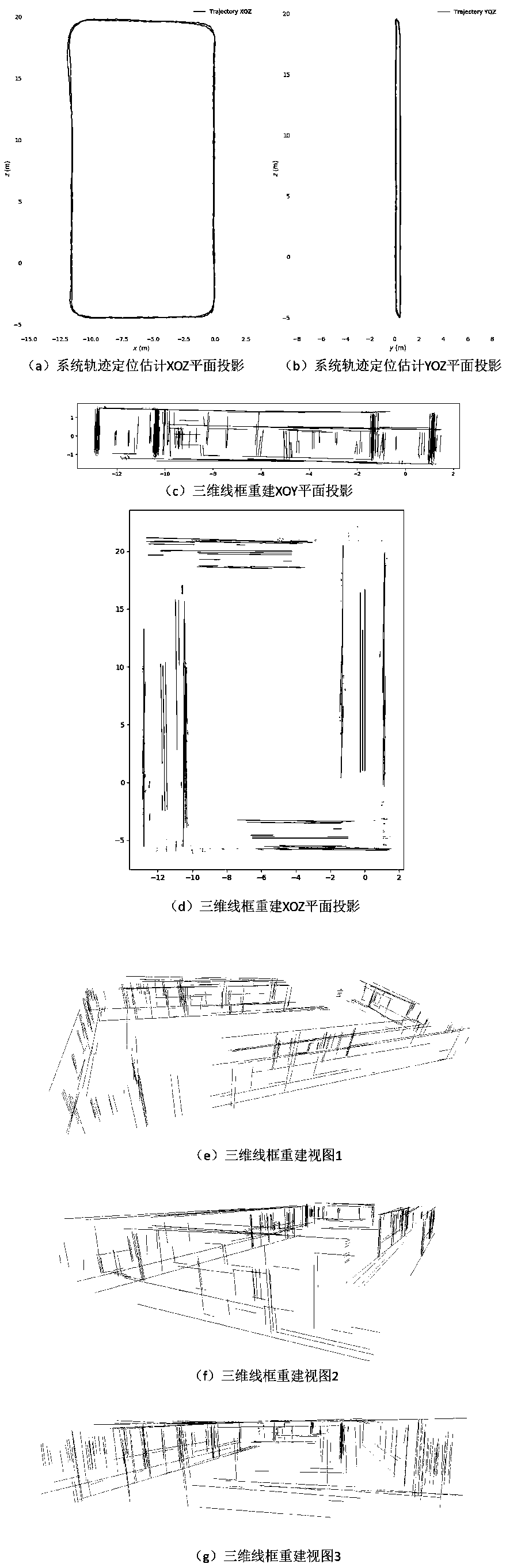

[0120] The technical solutions of the present invention will be further described below in conjunction with the accompanying drawings and embodiments.

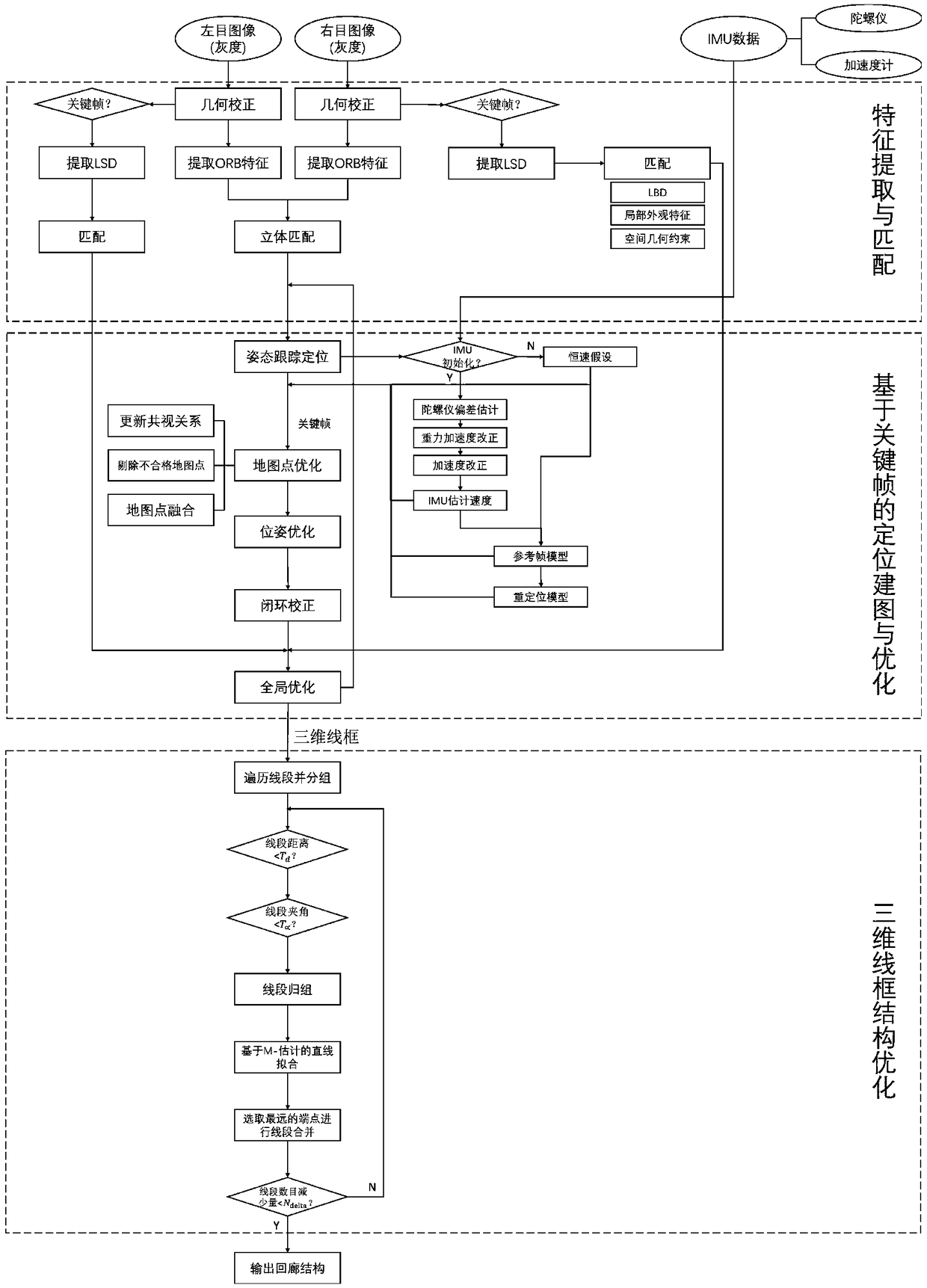

[0121] The embodiment of the present invention provides a positioning and three-dimensional wireframe structure reconstruction system that fuses a binocular camera and an IMU, including the following modules:

[0122] The data acquisition module reads and preprocesses the low-frequency image stream captured by the binocular camera and the high-frequency data collected by the accelerometer and gyroscope in the inertial measurement unit;

[0123] The feature extraction and matching module, through the extraction and matching of the feature points of the left and right images, calculates the disparity, restores the three-dimensional position of the point in the camera coordinate system; extracts the key frames in the image stream and the features of the straight line segments in the key frames, and then based on the local features...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com