A three-dimensional reconstruction method, apparatus, device and storage medium

A technology of 3D reconstruction and 3D grid, which is applied in 3D modeling, image analysis, image enhancement, etc., can solve the problem that GPU cannot be portable, and achieve the effect of improving portability and reducing complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

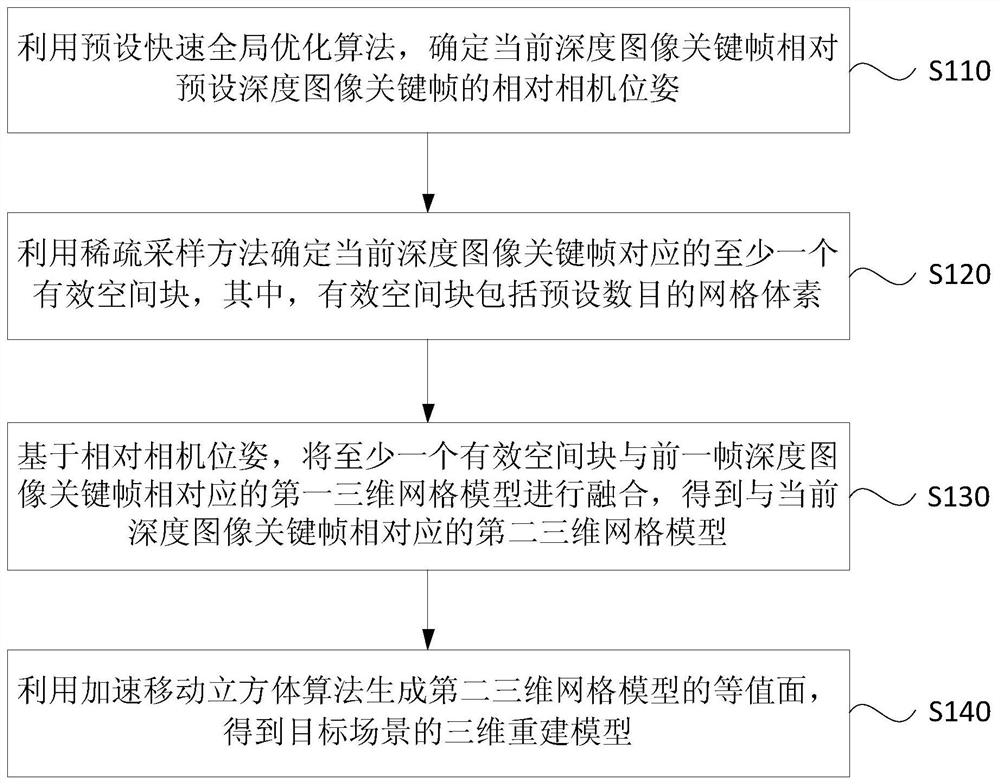

[0030] figure 1 It is a flow chart of a 3D reconstruction method provided by Embodiment 1 of the present invention. This embodiment is applicable to the situation of real-time 3D reconstruction of a target scene based on a depth camera. The method can be performed by a 3D reconstruction device, wherein the The device can be implemented by software and / or hardware, and can be integrated into a smart terminal (mobile phone, tablet computer) or a three-dimensional visual interaction device (VR glasses, wearable helmet). Such as figure 1 As shown, the method specifically includes:

[0031] S110. Using a preset fast global optimization algorithm, determine a relative camera pose of the key frame of the current depth image relative to the key frame of the preset depth image.

[0032] Preferably, the key frame of the current depth image corresponding to the current target scene can be acquired based on the depth camera. Wherein, the target scene may preferably be an indoor space s...

Embodiment 2

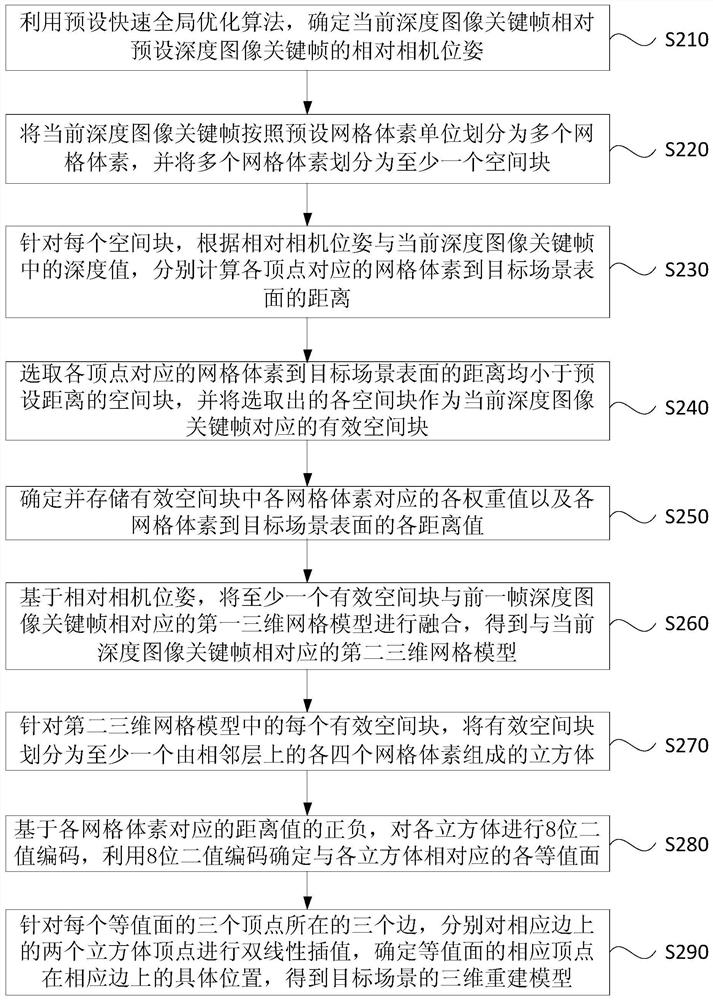

[0096] figure 2 It is a flow chart of a three-dimensional reconstruction method provided by Embodiment 2 of the present invention. This embodiment is further optimized on the basis of the foregoing embodiments. Such as figure 2 As shown, the method specifically includes:

[0097] S210. Using a preset fast global optimization algorithm, determine a relative camera pose of the key frame of the current depth image relative to the key frame of the preset depth image.

[0098] S220. Divide the key frame of the current depth image into multiple grid voxels according to the preset grid voxel unit, and divide the multiple grid voxels into at least one spatial block.

[0099]Wherein, the preset grid voxel unit may preferably be based on the accuracy of the 3D model required for real-time 3D reconstruction. For example, to achieve 3D reconstruction of a 3D model based on a CPU frequency of 30HZ and a grid voxel precision of 5mm, you can use 5mm as the preset grid voxel unit to con...

Embodiment 3

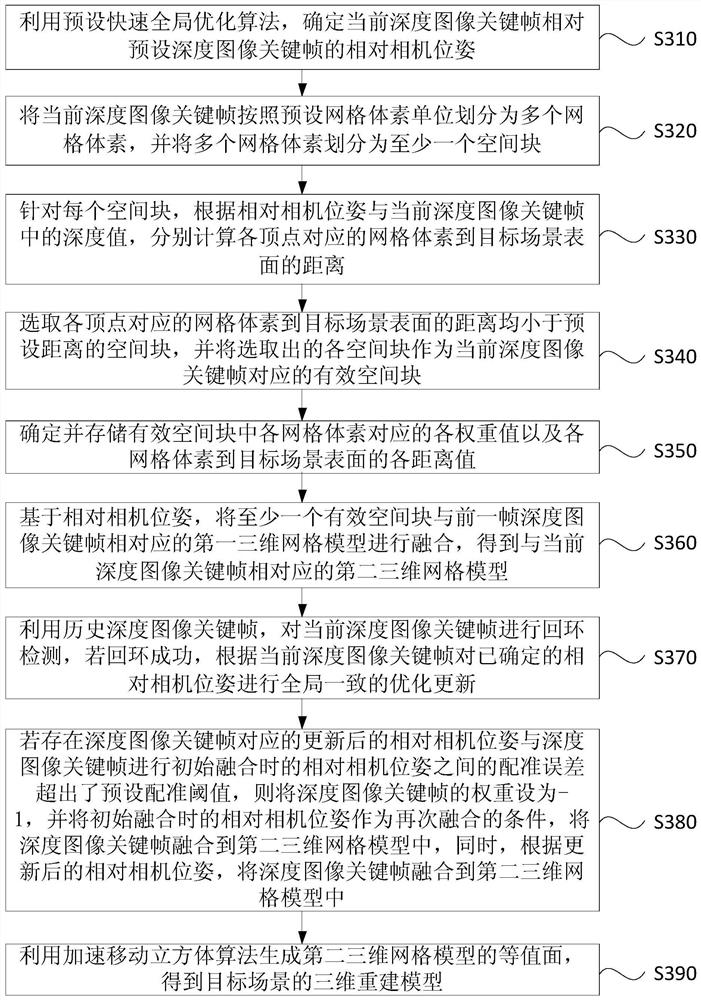

[0119] image 3 It is a flowchart of a three-dimensional reconstruction method provided by Embodiment 3 of the present invention. This embodiment is further optimized on the basis of the foregoing embodiments. Such as image 3 As shown, the method specifically includes:

[0120] S310. Using a preset fast global optimization algorithm, determine a relative camera pose of the key frame of the current depth image relative to the key frame of the preset depth image.

[0121] S320. Divide the key frame of the current depth image into multiple grid voxels according to the preset grid voxel unit, and divide the multiple grid voxels into at least one spatial block.

[0122] S330. For each spatial block, according to the relative camera pose and the depth value in the key frame of the current depth image, respectively calculate the distance from the grid voxel corresponding to each vertex to the surface of the target scene.

[0123] S340. Select space blocks whose distances from gr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com