First-visual-angle interactive action recognition method based on global and local network fusion

A local network, action recognition technology, applied in character and pattern recognition, computer parts, image analysis, etc., can solve problems such as inability to obtain high-precision recognition effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

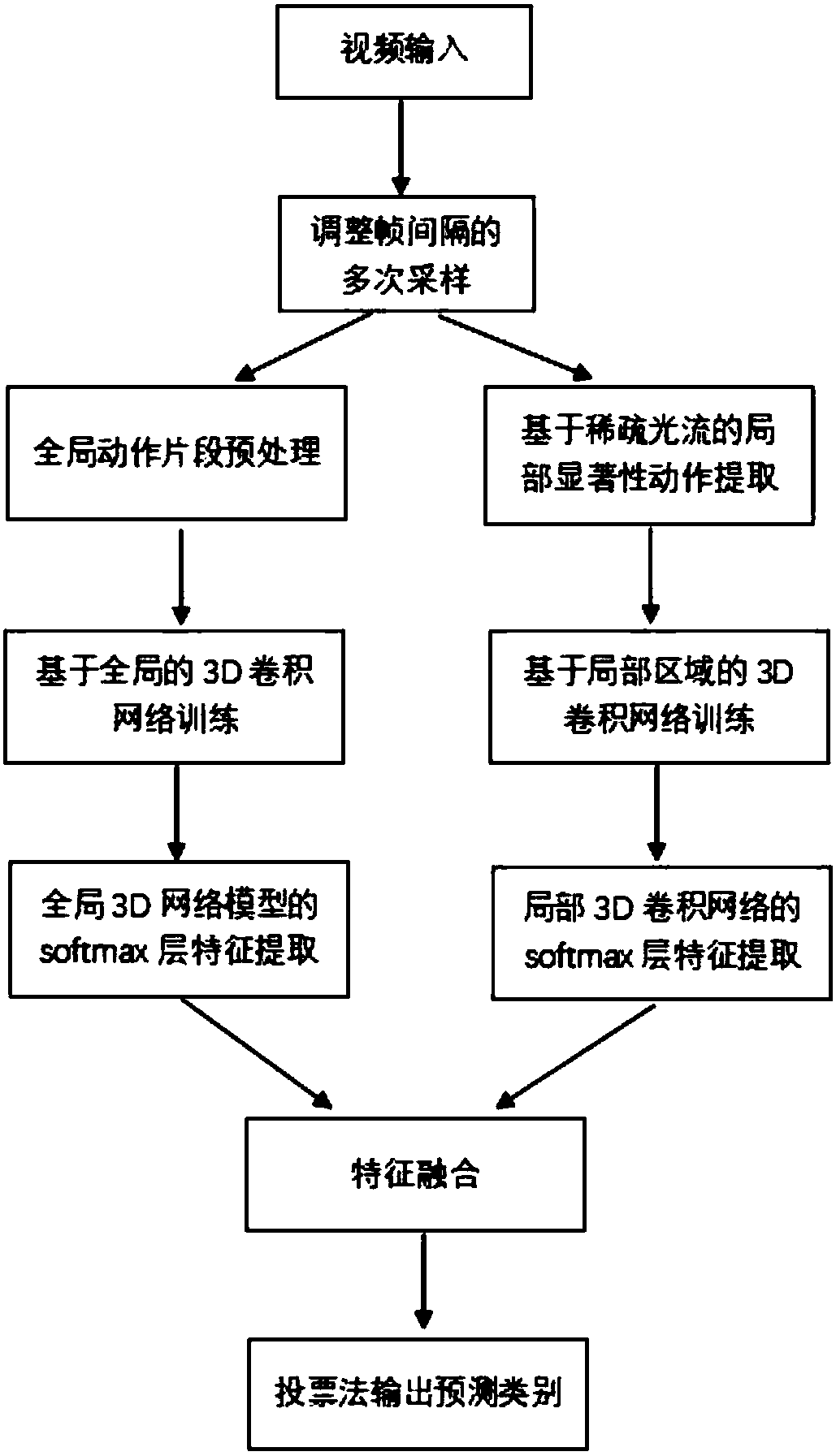

[0018] combine figure 1 , a method for first-person human-computer interaction video action recognition based on global and local network fusion, including the following steps:

[0019] Step 1. Sampling the video to obtain different actions, and obtaining 16 frames of images to form action samples;

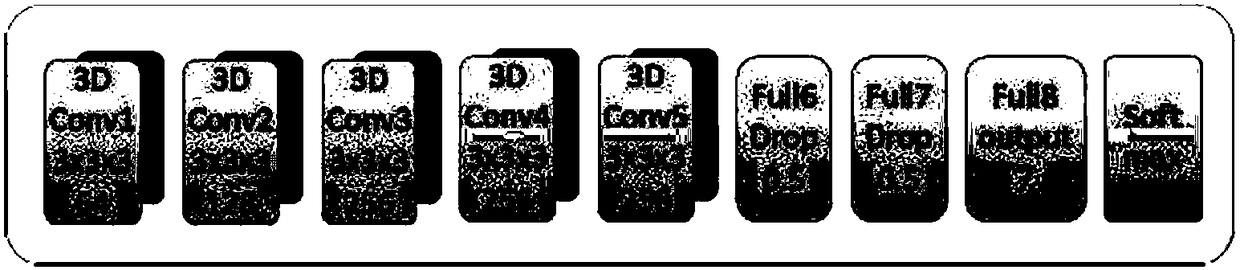

[0020] Step 2, unify the size of the sampled action segments, and perform data enhancement, train a 3D convolutional network based on the global image as input, and learn the spatiotemporal features of the global action to obtain a network classification model;

[0021] Step 3, use sparse optical flow to locate the local area where the salient action occurs in the action segment;

[0022] Step 4. After uniformly processing the local areas of different actions, adjust the hyperparameters of the network, train a 3D convolutional network based on local images as input, and learn local salient action features to obtain a network classification model;

[0023] Step 5: Fuse the global...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com